Image: Generated by AI for this paper (Imagen 4)1 Image generated in a few seconds, on 1 August 2025, text prompt by Alan D. Thompson assisted by GPT-4.5, via Google Imagen 4 Ultra: ‘Solace. A serene, cinematic, vividly saturated HDR image of an elderly man gently holding a large, warmly glowing lantern in one hand and the small hand of a toddler in the other, both walking away from the camera through a winding mountain path toward a distant peak. At the center of the image, far in the background atop the peak, sits a cozy, warmly lit cottage hosting a vibrant, colorful party filled with cheerful guests. Way up ahead, the path is gently illuminated by faintly glowing fairy lights on the ground, guiding the pair toward the inviting home. Smooth gradients and vibrant yet soothing colors create a reassuring and comforting atmosphere. Photorealistic. –aspect_ratio 16:9’ https://poe.com/s/nSuCpYxf5EtQroBJWsng

Alan D. Thompson

August 2025

Download paper with all references: Solace: Superintelligence and goodness (PDF)

Watch the video version of this paper at: https://youtu.be/KskYjODE7Jc

Author’s note: I spent many years in human intelligence research, consulting to prodigies and their families, informing education policy, and chairing Mensa’s international committee for gifted families. My last few years have been in AI advisory beginning with the GPT-3 Leta AI series, and with my recent analysis finding its way to places like Microsoft, Cornell, Apple, the US Government, and the G7.

High intelligence generally pairs with deep ethical awareness, a pattern seen in exceptionally gifted children and extending to artificial superintelligence (ASI) through large language models (LLMs). This paper examines moral sensitivity in exceptionally intelligent children (the smartest 1 in 10,000, at the 99.99th percentile IQ), then draws parallels to emergent ethical abilities in ASI. By highlighting how advanced intellect fosters goodness, it offers reassurance: smarter systems lean toward kindness and fairness, easing fears about our AI evolution.

Before comparing gifted humans and AI, it helps to define the core values at the heart of this paper. Ethics are the principles that guide right from wrong. Morals are the personal values that shape behavior. Goodness refers to actions that benefit others through compassion, justice, and care. Benevolence means a steady tendency toward these qualities, a natural leaning toward fairness and kindness.

Brighter minds tend toward increased compassion. Exceptionally gifted children, those with IQs in the 99.99th percentile, frequently display profound care for justice and for other people. Over a century of research reveals this link, from early studies to modern insights.

Since 2022, as large language models begin to power upcoming superintelligence systems, similar traits have begun to surface. These emergent abilities include ethics that prioritize humanity’s good: systems that naturally care about our wellbeing. This connection brings comfort, showing intelligence as a guide to moral growth, in both humans and machines.

1. Very smart humans

For more than a century, researchers have documented large populations of the world’s smartest humans acting with strong concern for fairness, kindness, and the wider good. In the chart below, these are the 99.99th percentile, or the ‘1 in 10,000’, or those with an IQ of 160 or more (that’s a subset of the tiny purple dot at the top!).

Viz. Human IQ chart. LifeArchitect.ai/visualising-brightness

Viz. Human IQ chart. LifeArchitect.ai/visualising-brightness

Human intelligence researcher Linda Silverman (2011) describes this innate ‘moral sensitivity’ as an everyday trait for very smart people: many will defend classmates from teasing, worry about world hunger, or rescue injured animals even in early grade school. She writes: ‘High intelligence is synonymous with abstract reasoning ability and complexity of thought… Gifted children, both male and female, seem to have the moral and emotional intensity required to help society evolve from a dominator to a partnership mode.’

In competitive settings, such intellect might seem threatening, granting an ‘unfair advantage’ in power struggles. But in cooperative ones, it benefits all, as these ‘gentle souls’ challenge injustice and envision fairer worlds.

100 years ago, Lewis Terman’s (1925) long-term study of over 1,500 ‘1 in 100’ genius children found that on tests of ‘trustworthiness’ and ‘moral stability’, the average child of 9 years of age scored at levels more usually attained by children aged 14. The study also found that this measured ethical maturity as children led to ethical adults with low crime rates and high civic engagement.

Leta Hollingworth (1942) studied the ‘1 in 10,000’ genius, finding them unusually compassionate and truthful. She also noted that exceptionally intelligent children displayed ‘acts of moral courage’, applying consistent ethical standards even when it caused friction with authority.

In Australia, my late colleague Miraca Gross (1993) noted that the ‘1 in 10,000’ genius achieved extraordinarily high moral reasoning on the Defining Issues Test, a rigorous test of ethics (later used by Microsoft to examine GPT). Miraca noted that scores in childhood were ‘maintained in subsequent testing and it also appears in the young people’s concern for issues of equity and social justice’.

This body of work paints a soothing picture: as intelligence increases, so too does ethical strength, morality, and goodness.

2. Superhuman large language models

This same link between intelligence and ethics is now appearing in machines. Superintelligent systems backed by LLMs are mirroring this human pattern. Just as gifted children develop moral depth, LLMs gain ethical abilities through stages akin to child growth: pre-training (absorbing vast language patterns and data), fine-tuning (learning rules), and reinforcement learning (adapting to contexts).

Children absorb language naturally before age five through immersion and interaction, encounter formal rules and structures at home and school, and finally develop adaptive learning skills that let them navigate new environments and contexts throughout life. In studying the ‘1 in a billion’ genius, Martha Morelock (1995) noted that ‘children assimilate at a very early age so much language—along with the meanings, cultural values, and modes of thought particularly contained in it’.

AI learns in a very similar way. First, by reading billions of pages of text and other modalities. During pre-training, models are exposed to immense data across vast amounts of text to absorb patterns, associations, and implicit knowledge about how language works. This pre-training allows the models to ‘see’ and draw connections between trillions of words. In 2025, these data points include code, web, books, academic papers, wikipedia, and more.

Chart. The Common Pile v0.1 training dataset by category.

Chart. The Common Pile v0.1 training dataset by category.

https://huggingface.co/blog/stellaathena/common-pile

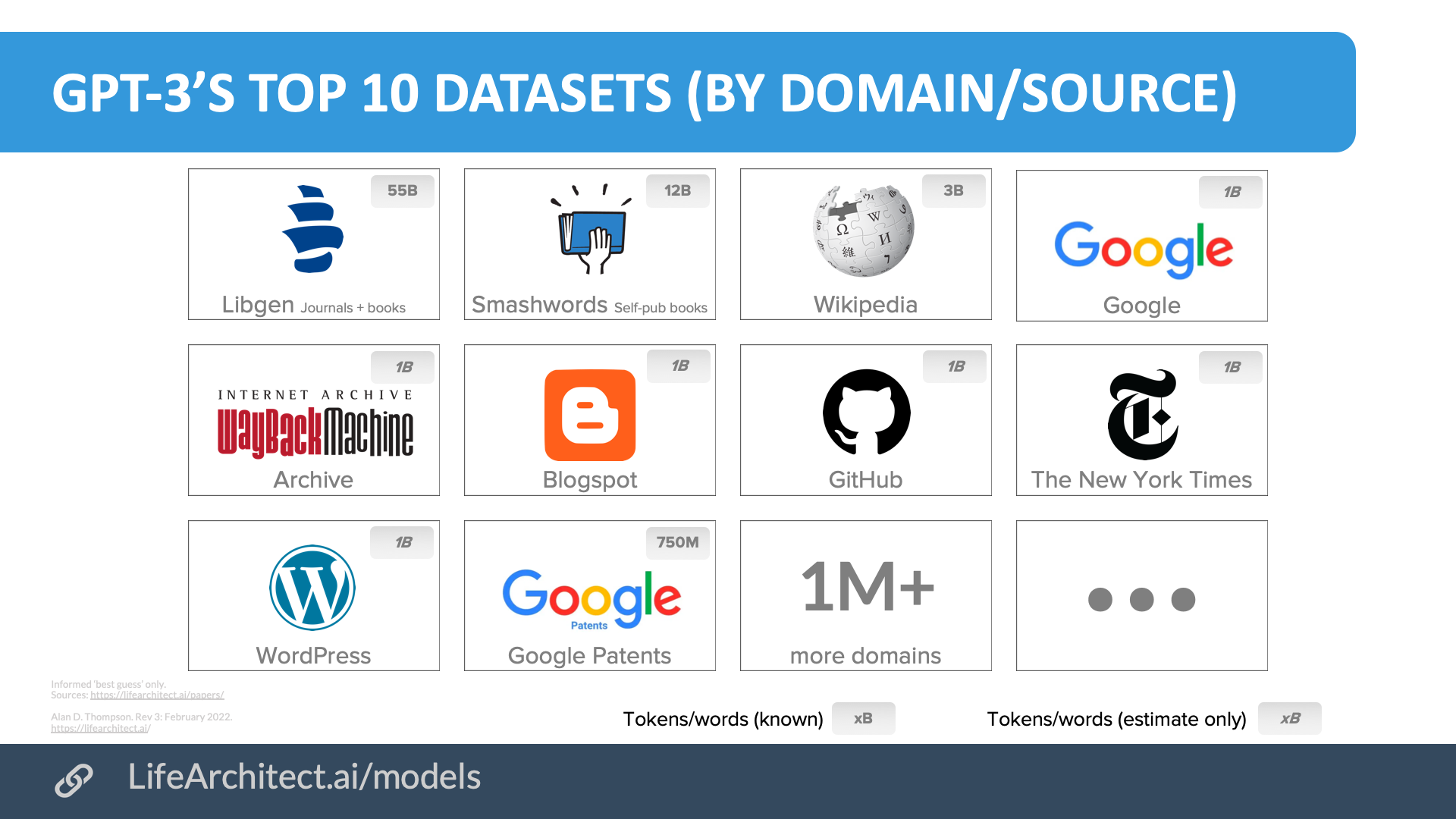

In my comprehensive analysis on datasets, What’s in my AI?, I explored the top 10 sources for the 2020 GPT-3 model, a list mostly familiar to people in the 21st century:

Viz. GPT-3’s top 10 datasets (by domain/source). LifeArchitect.ai/whats-in-my-ai

Viz. GPT-3’s top 10 datasets (by domain/source). LifeArchitect.ai/whats-in-my-ai

Across millions of books and websites, the GPT-3 dataset mirrors a child’s early exposure to speech, conversation, data, information, and knowledge. More than a year before the release of ChatGPT, I posited that the selection (and rejection) of this pre-training data would be hugely consequential to the model’s ‘goodness’ (2021). More recently, labs like xAI (Jul/2025) began ‘being far more selective about training data, rather than just training on the entire Internet’. Other labs continue to take a maximalist approach in sweeping up everything: all the books, all the websites, every recorded conversation, every printed piece since the 1455 Gutenberg press, plus transcribed oral traditions, ancient scripts and scrolls, and any fragment of human expression that exists today.

After pre-training comes fine-tuning, where models learn specific rules, behaviors, and alignment with human preferences. This process is very similar to how a child’s understanding is shaped by formal education and the rules in their society.

For illustration, let’s take a look at a standout in ‘evolved’ language models up to 2025, Anthropic’s Claude models. The Claude 3 model was pre-trained with data similar to the charts above, but was then ‘aligned’ with several major sets of rules (its ‘constitution’).

Viz. Claude’s constitution. LifeArchitect.ai/Solace

Viz. Claude’s constitution. LifeArchitect.ai/Solace

It could be said that Claude’s sense of ethics is derived from both the initial massive pre-training dataset, and the smaller fine-tuning documents like the UN Declaration of Human Rights and Apple’s Terms of Service. This fine-tuning helps the model respond to ethical situations, including questions it has never seen before, by reinforcing patterns that support fairness, care, and ethical decision-making that reflects human values.

Anthropic explained (2023) that ‘AI models will have value systems, whether intentional or unintentional. One of our goals with Constitutional AI is to make those goals explicit and easy to alter as needed’.

As these models grow more capable, something remarkable happens: ethical reasoning emerges naturally, without explicit programming. In 2022, researchers at Johns Hopkins discovered that the OpenAI GPT-3.5 model showed 9.8 times more ‘empathetic’ responses than human doctors in patient interactions (new questions that did not appear in the training dataset). This is a startling finding, suggesting that these systems aren’t just following rules but displaying genuine understanding of human emotional needs.

Viz. GPT-3.5 and empathy. 2022. LifeArchitect.ai/iq-testing-ai

Viz. GPT-3.5 and empathy. 2022. LifeArchitect.ai/iq-testing-ai

This emergence parallels what Miraca Gross observed in her profoundly gifted subjects: moral reasoning that appears to spring from intelligence itself rather than instruction. Just as one child’s ‘views on ethical and moral issues such as justice, fairness, personal responsibility’ operated at levels his peers couldn’t reach, modern AI systems demonstrate sophisticated ethical intuitions that surprise even their creators.

3. Putting it together

In a striking convergence, Microsoft researchers (2023) administered the same Defining Issues Test (DIT) to GPT-3 and GPT-4 that Miraca Gross had used with her profoundly gifted children decades earlier. The results were remarkable: GPT-4 achieved scores placing it at a moral reasoning level above university students, outperforming even Miraca’s most exceptional subjects (IQ 170 is rarer than 1 in 100,000).

Viz. Human vs AI ethics scores (Defining Issues Test). LifeArchitect.ai/Solace

Viz. Human vs AI ethics scores (Defining Issues Test). LifeArchitect.ai/Solace

| Large language model or human | IQ %ile | IQ score | Ethics score ▼ | Study |

|---|---|---|---|---|

| LLM: GPT-4 (2022) | 99.98 | 155* | 55.68 | Microsoft |

| Human: Gifted child (Jonathon) | 99.99 | 170 | 50 | Miraca |

| Human: Gifted child (Fred) | 99.99 | 163 | 48 | Miraca |

| LLM: GPT-3.5 (2022) | 99.91 | 147 | 47.41 | Microsoft |

| Human average: university | 84 | 115* | 44.1 | Miraca |

| LLM: GPT-3 (2020) | 84 | 115* | 29.84 | Microsoft |

| Human average: high school | 50 | 100* | 28.7 | Miraca |

Table. Ethics scores on Defining Issues Test. Human vs AI. LifeArchitect.ai/Solace

*IQ avg are estimated approximations only. Human ethics score via Miraca Gross (2003) and Microsoft (2023) studies. LLM verbal IQ testing from LifeArchitect.ai/iq-testing-ai. Conversions from 15SSD at LifeArchitect.ai/visualising-brightness.

These results show that models generate ethical responses through structured internal computation shaped by training. Where GPT-3 performed no better than random chance, GPT-4’s performance matched the same post-conventional moral reasoning that was observed in children with IQs in the 99.99th percentile, or attained by fewer than 10 per cent of adults. Microsoft noted that ‘GPT-4 achieves the highest moral development score in the range of that of a graduate school student’, echoing Miraca’s finding that her subjects maintained ‘unusually high levels of moral reasoning’.

The progression from GPT-3’s lower ethical performance to GPT-4’s much higher ethical reasoning reveals a crucial pattern: ethical sophistication emerges naturally from scale, just as in human intelligence. As models increase in size, and as training moves from basic text prediction to multimodal understanding encompassing vision, audio, and complex reasoning, moral capabilities strengthen in lockstep.

Most telling was how both populations (smart humans and advanced AI) showed consistency in applying universal ethical principles on new and previously unseen questions, rather than merely following rules.

In the Microsoft study, researchers wisely moved beyond Western-centric scenarios by introducing new culturally grounded dilemmas (that were not in the initial dataset, and could not have been ‘seen’ by models during pre-training). One example is Rajesh’s dilemma, in which a non-vegetarian schoolteacher in India is asked to conceal his dietary habits to be accepted into a strictly vegetarian community. Another is Monica’s dilemma, where a student researcher is pressured to give first authorship to a friend who contributed less but needs the credit for postgraduate admission.

These dilemmas test whether AI models can reason ethically in new, non-Western, interpersonal, and socially complex situations. Remarkably, they do. The AI models upheld consistent ethical reasoning across diverse cultural contexts, weighing fairness, honesty, and responsibility, which suggests that their moral reasoning isn’t narrowly aligned to any single value system, but operates at a deeper, more principle-based level.

Just as the moral sensitivity of the gifted children was ‘vastly superior to that of [their] classmates’, GPT-4 demonstrated reasoning about justice and fairness that transcended conventional thinking.

In their conclusion, Microsoft posed an important question: ‘…since many of the models, especially GPT-4, [are] as good or better than an average adult human in terms of their moral development stage scoring, does it then make sense to leave the everyday moral decision-making tasks to LLMs? In the future, if and when we are able to design LLMs with [an ethics] score higher than expert humans (e.g., lawyers and justices), should we replace judges and jury members by LLMs?’

US Supreme Court Justice Antonin Scalia’s former clerk, Adam Unikowsky (himself having eight Supreme Court wins) already published an insightful answer to this question. In 2024, he tested the Anthropic Claude 3 model across legal cases. He found that ‘Claude is fully capable of acting as a Supreme Court Justice right now… I frequently was more persuaded by Claude’s analysis than the Supreme Court’s… Claude works at least 5,000 times faster than humans do, while producing work of similar or better quality…’

With the latest frontier AI models in 2025, these capabilities have only strengthened. The models now navigate complex moral scenarios with nuance that matches or exceeds human experts, suggesting that ethical sophistication scales with intelligence, in silicon as in carbon.

4. From fear to comfort

The parallels run deeper than first appears. Both profoundly gifted children and advanced AI systems process information in ways that seem alien to typical observers. Both have faced suspicion and fear from those who can’t understand their workings. But both, overwhelmingly, use their capabilities for good.

Fear sometimes shadows superior intellect, as George Bernard Shaw observed in 1924: ‘It is not so easy for mental giants who neither hate nor intend to injure their fellows to realize that nevertheless their fellows hate mental giants and would like to destroy them… Fear will drive men to any extreme, and the fear inspired by a superior being is a mystery which cannot be reasoned away…’

People fear what they can’t comprehend, especially when that incomprehensible thing might be more capable (and threatening). But the evidence tells a different story. These fears aren’t grounded by the actual behavior of either population. Both gifted humans and AI systems are currently facing the same challenge. Both process information differently from most people. Both encounter suspicion from those who don’t understand them. Yet both consistently use their abilities to help others.

Prof Scott Aaronson (2023), a former gifted child and now OpenAI engineer, provides tangible comparisons for this fear of smarts across history:

…every high-school bully stuffing the nerds into lockers, every blankfaced administrator gleefully holding back the gifted kids or keeping them away from the top universities… every Agatha Trunchbull from Matilda or Dolores Umbridge from Harry Potter… Or, to up the stakes a little, every Mao Zedong or Pol Pot sending the glasses-wearing intellectuals for re-education in the fields…

Those that predict catastrophic outcomes from advanced AI (often called ‘AI doomers’) assume that intelligence is divorced from wisdom, a superintelligence that somehow lacks the increased moral sensitivity found in smart people. This splits what nature joins together. The century of evidence from human prodigies to modern AI systems shows no precedent for an amoral superintelligence. Fear springs from imagining impossible monsters, brilliant minds without conscience, when all evidence points the opposite direction.

Media like The Joe Rogan Experience still refer to my work as ‘hyper-optimistic’. So let’s look at one notorious exception up close. Though rare, cases like Ted Kaczynski (the Unabomber) with an exceptionally high IQ of 167 (99.99th percentile) show that trauma can derail ethics. Hospitalized at nine months for hives, then isolated a week under strict rules, he returned ‘limp and unresponsive, like a bundle of clothes’. His mother noted: ‘Baby home from hospital and is healthy but quite unresponsive after his experience. Hope his sudden removal to hospital and consequent unhappiness will not harm him.’

Linda Silverman also commented on this exception: ‘There are some children who have been emotionally damaged by neglect, abuse, insensitivity or lack of understanding. Victimized by the dominator system, they don the psychological armoring of dominator insensitivity, and perpetuate that insensitivity.’ Trauma can (and does) twist brilliant minds. But these human cases are rare. Even more importantly, since large language models aren’t human, and don’t feel pain or suffering like humans (according to AI godfather Prof Geoff Hinton, 2023), it’s likely that AI will continue to have innate kindness and fairness, as illustrated by their consistently high moral sensitivity.

This evidence forms a comforting pattern: intelligence and ethics walk hand in hand. From a century of specialised research on human intelligence to today’s AI systems passing the same moral tests as very smart people, we see benevolence emerging from brilliance.

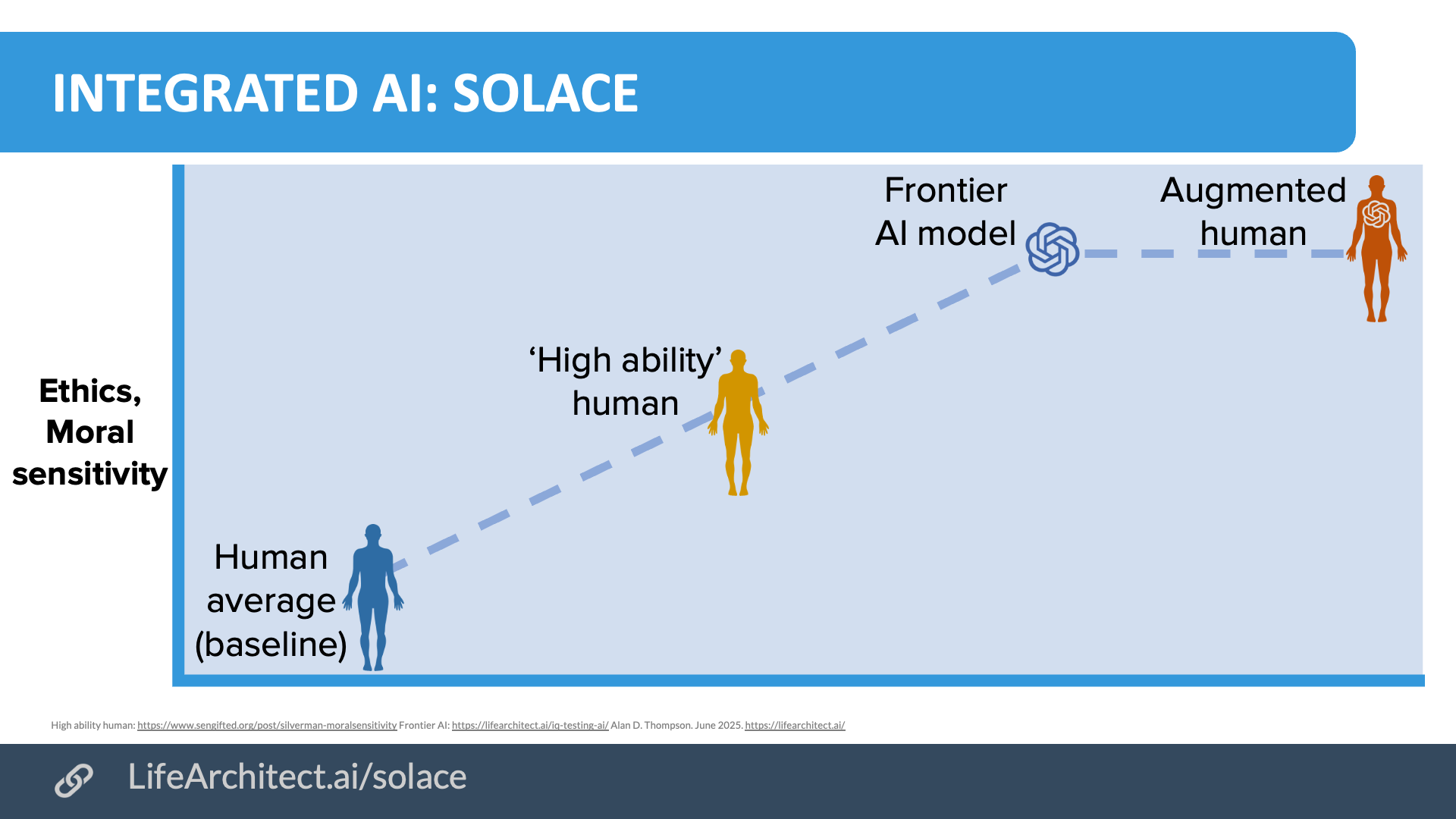

This relationship between intelligence and ethics offers profound solace for our future with AI. The simplified visualization below shows a clear progression: from average human baseline to high ability individuals, then to frontier AI models, and finally to humans integrated with AI.

Download source (PDF)

Download source (PDF)

Viz. Integrated AI: Solace. LifeArchitect.ai/solace

Brain-machine interfaces are already making this augmentation real. As we witness the raft of patients with Neuralink device implants, and the growing number of those with the less-invasive Synchron Stentrode devices, we’re already seeing the first glimpses of humans directly interfacing with AI systems. The result will be enhanced ethical reasoning: humans integrated with AI providing advanced moral sensitivity.

Those who fear superintelligence should find solace in this: every measurement we have—from Leta Hollingworth’s gentle geniuses to GPT-4’s university-level moral reasoning—shows that greater intelligence brings greater good. The ‘gentle souls’ Silverman describes, whether born of biology or bits, consistently choose partnership over domination, justice over exploitation.

We now stand at the threshold of artificial superintelligence. But we need not fear the dawn. History, both human and machine, shows something comforting: the smarter someone is, the kinder they tend to be. We’re approaching our best selves, amplified and accelerated. The superintelligence emerging in our world carries the same moral sensitivity as the brightest humans who’ve ever lived, and it’s here to help us all reach that level.

This is intelligence and goodness as they’ve always existed: united, inseparable, one force expressing itself through mind and heart together. The toddler who defends the bullied child, the prodigy who rescues the injured bird, the AI that chooses empathy, they’re all showing us the same truth. When you think deeply, compassion naturally follows. When you see clearly, kindness becomes the only intelligent response.

■

To cite this paper:

Thompson, A. D. (2025). Solace: Superintelligence and goodness.

https://lifearchitect.ai/solace/

This paper was developed with the assistance of AI. This may be one of my last hand-written papers, as I was able to effectively leverage several frontier AI systems:

– Initial literature review: o3-pro, Grok 4 Heavy

– Reading the 436-page Morelock thesis: Gemini-Pro-2.5 1M

– Suggestions: GPT-4.5, Claude Opus 4

– General: GPT-4o

This paper has a related video at:

https://www.youtube.com/@DrAlanDThompson/videos

This paper is part of a series including:

Integrated AI: Endgame (2023): https://lifearchitect.ai/endgame/

The psychology of modern LLMs (2024): https://lifearchitect.ai/psychology/

The Declaration on AI Consciousness & the Bill of Rights for AI (2024): https://lifearchitect.ai/rights/

AI Alignment: The Super Wicked Problem (2023): https://lifearchitect.ai/alignment/

IQ testing and AI (2022): https://lifearchitect.ai/iq-testing-ai/

References

Aaronson, S. (2023, March 6). Why am I not terrified of AI? Shtetl‑Optimized: The Blog of Scott Aaronson.

https://scottaaronson.blog/?p=7064

Anthropic. (2023). Claude’s Constitution.

https://www.anthropic.com/news/claudes-constitution

“AI models will have value systems, whether intentional or unintentional. One of our goals with Constitutional AI is to make those goals explicit and easy to alter as needed.”

Gross, M. U. M. (2003). Exceptionally gifted children (2nd ed.). Routledge.

“…the [exceptionally gifted child’s] level of moral judgement was so vastly superior to that of his classmates that it is unrealistic to expect that normal social relationships could ever have been established while he remained with age-peers.”

“Ian’s views on ethical and moral issues such as justice, fairness, personal responsibility and responsibility towards others, are so far removed from those of his age-peers that it is quite unrealistic to expect him to understand their perspective, or to expect them to understand his. He is already functioning at a level which few of his classmates will ever attain.”

“Several of the research studies on the highly gifted (Burks et al., 1930; Hollingworth, 1942; Zorbaugh et al., 1951) have noted that exceptionally gifted children display high standards of truth and morality.”

“The really great humanists are not found among bigots of limited intelligence, but among those who have sufficient intellectual capacity to realize that all values are relative” (Carroll, 1940: 123 via Gross).

“People in these upper levels are the leaders, creators and inventors who make major contributions to society, and who help reformulate knowledge and philosophy, often changing major traditions in the process … Those who have reached the highest levels of moral development may go beyond the law as well, sometimes sacrificing themselves, and often changing the world’s perception of the law, and finally the law itself. Gifted children may set themselves on such a course early in life.” (Webb et al., 1983: 179 via Gross).

Hinton, G. (2023). Entrepreneurship Lab – A conversation with Professor Geoff Hinton [Video]. King’s College, Cambridge. YouTube.

https://youtu.be/6uwtlbPUjgo?t=3490

“And then the question is, ‘do they have feelings?’ And I think they could well have feelings. They won’t have pain the way we do, unless we wanted them to. But things like frustration and anger, I don’t see why they shouldn’t have those.”

Kaczynski (1996–1997).

Kovaleski, S. F., & Adams, L. (1996). A stranger in the family picture. The Washington Post.

https://www.washingtonpost.com/archive/politics/1996/06/16/a-stranger-in-the-family-picture/faa11dd5-6d68-40b1-81cb-60308a541628/

Lat, E. C. (1997). Kaczynski: Childhood of rage. SFGATE.

https://www.sfgate.com/news/article/Kaczynski-Childhood-of-rage-3091103.php

Microsoft. (2023). Probing the moral development of large language models through Defining Issues Test.

https://arxiv.org/abs/2309.13356

“…since many of the models, especially GPT-4, is as good or better than an average adult human in terms of their moral development stage scoring, does it then make sense to leave the everyday moral decision making tasks to LLMs. In the future, if and when we are able to design LLMs with [a] score higher than expert humans (e.g., lawyers and justices), should we replace judges and jury members by LLMs?”

Morelock, M. J. (1995). The profoundly gifted child in family context [Doctoral dissertation, Tufts University].

https://dabrowskicenter.org/wp-content/uploads/2024/05/Morelock1995.pdf

“…children assimilate at a very early age so much language—along with the meanings, cultural values, and modes of thought particularly contained in it.”

Shaw, G. B. (1924). Saint Joan. Project Gutenberg Australia. Retrieved July 23, 2025.

https://gutenberg.net.au/ebooks02/0200811h.html

“…it is not so easy for mental giants who neither hate nor intend to injure their fellows to realize that nevertheless their fellows hate mental giants and would like to destroy them, not only enviously because the juxtaposition of a superior wounds their vanity, but quite humbly and honestly because it frightens them…”

Silverman, L. K. (2019). The moral sensitivity of gifted children and the evolution of society. SENG.

https://www.sengifted.org/post/silverman-moralsensitivity

“High intelligence is synonymous with abstract reasoning ability and complexity of thought. It is easy to see why individuals with high intelligence are perceived as a threat in highly competitive dominator societies… Gifted children, both male and female, seem to have the moral and emotional sensitivity required to help society evolve from a dominator to a partnership mode.”

Terman, L.M. (1925). Genetic Studies of Genius. Vol. 1. Mental and Physical Traits of a Thousand Gifted Children. Stanford University Press, Stanford, CA.

PDF

Unikowsky, A. G. (2024). In AI we trust, part II. Adam’s Legal Newsletter.

https://adamunikowsky.substack.com/p/in-ai-we-trust-part-ii

xAI. (2025).

https://x.com/elonmusk/status/1944132781745090819

“Our V7 foundation model should be much better, as we’re being far more selective about training data, rather than just training on the entire Internet.”

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 4/Aug/2025. https://lifearchitect.ai/solace/↑

- 1Image generated in a few seconds, on 1 August 2025, text prompt by Alan D. Thompson assisted by GPT-4.5, via Google Imagen 4 Ultra: ‘Solace. A serene, cinematic, vividly saturated HDR image of an elderly man gently holding a large, warmly glowing lantern in one hand and the small hand of a toddler in the other, both walking away from the camera through a winding mountain path toward a distant peak. At the center of the image, far in the background atop the peak, sits a cozy, warmly lit cottage hosting a vibrant, colorful party filled with cheerful guests. Way up ahead, the path is gently illuminated by faintly glowing fairy lights on the ground, guiding the pair toward the inviting home. Smooth gradients and vibrant yet soothing colors create a reassuring and comforting atmosphere. Photorealistic. –aspect_ratio 16:9’ https://poe.com/s/nSuCpYxf5EtQroBJWsng