The image above was generated by AI for this paper (GPT-4o)1Image generated in a few seconds, on 17 May 2025, text prompt by Alan D. Thompson, via GPT-4o image gen: ‘image: vivid blue sky, predictable. beautiful hand-drawn sky and scene, water color. suggestions of exponential curves and lines showing forecasting. wide.’ https://chatgpt.com/share/6827c855-8b90-800c-91db-5230587d132f

Alan D. Thompson

June 2025

| Date | Report title |

| End-2025 | The sky is supernatural |

| Mid-2025 | The sky is delivering |

| End-2024 | The sky is steadfast |

| Mid-2024 | The sky is quickening |

| End-2023 | The sky is comforting |

| Mid-2023 | The sky is entrancing |

| End-2022 | The sky is infinite |

| Mid-2022 | The sky is bigger than we imagine |

| End-2021 | The sky is on fire |

‘And now a time has come where it is not only true that we can afford to relax—and take the horse out of its harness—but where it has become a matter of urgency that we do so… We possess such immense resources of power that pessimism is a laughable absurdity.’

— Colin Wilson (1969)2Poetry and Mysticism (1969) by Colin Wilson, Google Books

Author’s note: I provide visibility to family offices, major governments, research teams like RAND, and companies like Microsoft via The Memo: LifeArchitect.ai/memo.

In the previous AI report, The sky is quickening (2024), we explored how AI was both accelerating rapidly and stirring to life—drawing on the double sense of the word ‘quickening’: ‘speeding up’ and ‘the early movements of new life’. Now in the first half of 2025, AI has transitioned from promise to delivery, from potential to unique creation.

The BIG Stuff

AI progress in the first half of 2025 was predictable and consistent. Scaling laws continued to new heights. Global uptake of AI was as expected: OpenAI’s CEO announced that ‘something like 10% of the world [or 800M people] uses our systems a lot’.3https://finance.yahoo.com/news/sam-altman-says-10-world-112329758.html For comparison, 800 million people is also each and every adult in the United States and European Union, plus Australia, Canada, Japan, and New Zealand.4https://chatgpt.com/share/6827c329-7f9c-800c-9bae-f99517b32529 Across regular keynotes around the world, I am finally noticing zero hands going up when I ask ‘Who here has never used GPT?’.

Large language models were given time to think and reason, opening the way for new discoveries.

Alibaba used QwQ-Max to write its own entire model announcement.5https://qwenlm.github.io/blog/qwq-max-preview/

Anthropic used Claude to write 80–90% of the Claude Code platform,6https://youtu.be/zDmW5hJPsvQ?t=1142 and to write extensive legal testimony submitted to the courts.7https://techcrunch.com/2025/05/15/anthropics-lawyer-was-forced-to-apologize-after-claude-hallucinated-a-legal-citation/ It also used Claude to write hundreds of its own public knowledge base articles (since hidden).8https://techcrunch.com/2025/06/03/anthropics-ai-is-writing-its-own-blog-with-human-oversight/

Google used Gemini to write ‘well over 30%’ of its code.9https://abc.xyz/2025-q1-earnings-call/

Microsoft used AI to write about a quarter of its code.10https://techcrunch.com/2025/04/29/microsoft-ceo-says-up-to-30-of-the-companys-code-was-written-by-ai/

OpenAI used GPT to handle support emails.11PDF: OpenAI

Parallel with my regular advisory to EY (my ‘Smarter than we think’ series),12https://lifearchitect.substack.com/p/the-memo-highlights the Big Three management consulting firms continued to leverage AI across core business functions. Bain used Sage (powered by GPT), BCG used Deckster and GENE (GPT-4o and ElevenLabs), and McKinsey employees used Lilli (‘Over 70% of the firm’s 45,000 employees now use the tool. Those who use it turn to it about 17 times a week…’).13BI & https://archive.md/xlPnp & McKinsey & entrepreneur.com

The United Arab Emirates began using AI to draft and amend legislation, speeding up the lawmaking process by 70%.14https://archive.md/ubZAW

Looking at 2025, I’ve been observing the same pattern that’s been playing out since I started tracking post-2020 AI progress: a significant portion of people remain frightened of and hostile toward having more smarts in the world. Meanwhile, the technology itself races ahead with remarkable momentum.

This resistance mirrors what I witnessed in human intelligence and gifted education research: the fear of excellence, the discomfort with capabilities that exceed our own. Just as bright children face pushback for their advanced processing abilities, AI faces similar resistance for demonstrating intelligence that challenges human supremacy. The disconnect between public sentiment and the very real intelligence explosion reminds me of Ray Kurzweil’s 2020 observation,15https://www.kurzweilai.net/essays-celebrating-15-year-anniversary-of-the-book-the-singularity-is-near a quote I’ve used several times:

You can’t stop the river of advances. These ethical debates are like stones in a stream. The water runs around them. You haven’t seen any of these… technologies held up for one week by any of these debates.

…There is also a tremendous moral imperative. We still have not millions but billions of people who are suffering from disease and poverty, and we have the opportunity to overcome those problems through these technological advances.

Where past transformations took decades to unfold, the exponential curve of AI means the world is changing whether we’re ready or not: the technology doesn’t pause for our comfort levels.

Close to a billion people are now quietly integrating AI into their daily workflows, writing their own futures with these tools. The disconnect reveals a fundamental truth: transformation doesn’t wait for consensus. It arrives whether we’re emotionally prepared or not, carrying those ready to embrace it toward new possibilities while others remain anchored in familiar limitations.

Reasoning models begin delivering new inventions

In Episode 1 of my Leta AI series (published on 9/Apr/2021),16https://youtu.be/ZcrEeUPmeyk Leta used the 2020 GPT-3 davinci model (now five years old) to generate a new, never-before-seen haiku.

GPT-3 (2020):

Procrastination

Is a thief stealing time and

Opportunities.

At 17 syllables in a 5-7-5 pattern, this was a perfect haiku, and a remarkable demonstration of creative synthesis. The model had generated something entirely new that captured both the form and philosophical depth of traditional haiku. What struck me most profoundly was the emergence of genuine wisdom, not retrieved from a database, but conceptualized into existence. Much like the experience of intuitive writing where words flow through rather than from the writer, GPT-3’s haiku emerged as a complete thought, fully formed and semantically sound. The model understood the mechanical constraints of syllable counting and the deeper essence of haiku. This creative capacity extends far beyond poetry.

The year 2020 marked a fundamental shift in large language models and AI. We moved from AI as a tool that retrieves information to AI as a creative partner that conceptualizes genuinely new ideas and solutions.

In 2025, we’re watching AI systems reason through and solve complex problems, conceptualizing novel solutions from scratch. (These are documented in full at LifeArchitect.ai/ASI.)

In January, Microsoft used its MatterGen system to discover a new metal oxide, TaCr₂O₆. This new material combines tantalum and chromium in a novel way, and was ‘not present in the training dataset’.17https://www.nature.com/articles/s41586-025-08628-5

In March 2025, the OpenAI reasoning model, o3-mini-high, was also used to solve the one-dimensional J1-J2 q-state Potts model for arbitrary values of q.18https://arxiv.org/abs/2503.23758

In May 2025, using Copilot and reasoning models like o3, Microsoft researchers discovered a new coolant.19https://azure.microsoft.com/en-us/blog/transforming-rd-with-agentic-ai-introducing-microsoft-discovery/ The novel, non-PFAS, immersion prototype was ‘a new material, unknown to humans.’20https://youtu.be/lC0QLivuRgw?t=267

Image. ASI: Early discoveries by LLM systems (2025). LifeArchitect.ai/ASI

As of mid-2025, I contend that we are already in the early stages of the singularity, with large language model systems generating new inventions and discoveries in laboratories worldwide, and leading to self improvement across hardware and software.

The evidence is unmistakable: when AI discovers a new metal oxide that didn’t exist in its training data, when it solves mathematical problems that have stumped human researchers, when it designs its own improved architectures, we’re living in a brand new world.

Just as the release of GPT-2 in Feb/2019 was the cut-off point for AI-generated text in the wild, early 2025 is the cut-off point for new AI-generated ‘things’ in the wild. It’s now reasonable to expect that new solutions, inventions, concepts, materials, products, media, businesses, and ‘things’ are being conceived by agentic language model systems—even when AI’s role is intentionally hidden and these breakthroughs get credited solely to human inventors.

Follow the curves

I generally don’t do predictions, and many experts are now admitting that we can barely see 1–2 years ahead.21OpenAI’s former Head of Research, Bob McGrew, Jun/2025. https://lifearchitect.ai/bob/ Interviewer: ‘What do you think happens after that one or two years, though?’ Bob: ‘Oh, I don’t know. We’re going to have to find out.’

What I do do is provide visibility on patterns, or in this case, curves with solid lines. We know that Google is working on these things22Yes, I’ve used the word ‘things’ deliberately, in the context of my previous statement that new ‘things’ will be conceptualized by AI. Source: Google AI Engineer World’s Fair 2025 – Day 2 Keynotes & SWE Agents track: https://youtu.be/U-fMsbY-kHY?t=1665 for Gemini 3 and Gemini 4:

- Infinite context (limitless memory)

- Scaling reasoning even further (allow thinking more broadly and deeply)

- Bigger models (train on more data, allow model to make more connections)

- Omnimodality (text, image, video, and more)

- Agentic by default (allow greater tool and computer use)

Given that AI is now superhuman, we will be seeing:

- 100% of enterprise-level code conceptualized and written by AI.

- 100% of legal processes conceptualized and written by AI.

- 100% of support responses, counselling, coaching, and mentoring being provided by AI.

- 100% of new discoveries and inventions being conceptualized by reasoning models like o4 and beyond.

- 100% of healthcare diagnostics and personalized treatment plans conceptualized by AI.

- 100% of personalized education and adaptive curriculum design managed entirely by AI, ensuring hyper-customization of learning at individual and institutional scales.

- 100% of strategic decision-making and forecasting performed by AI, shifting executive functions toward oversight rather than initiation.

- 100% of governance policy formulation, legislative drafting, and regulatory compliance frameworks conceptualized and managed by AI.

Large language models

My Models Table documented more than 120 new models in the first half of 2025, and now lists more than 600 major large language models, without a focus on derivatives or Chinese models. You can browse and filter the full database at: LifeArchitect.ai/models-table.

In the first six months of 2025, Hugging Face reports23https://web.archive.org/web/20250624155830/https://huggingface.co/models?pipeline_tag=text-generation&sort=trending

& https://web.archive.org/web/20250123161806/https://huggingface.co/models?pipeline_tag=text-generation&sort=trending

246,810-172,348=74,462 about 75,000 new derivative models.

Most models in the first half of 2025 featured reasoning via reinforcement learning, allowing the model to ‘think’ for several thousand words before responding.

While debates about LLMs rage in boardrooms and on social media, the next evolution of language model systems are already in training. GPT-6,24https://lifearchitect.ai/gpt-6/ Claude 5, and Gemini 4 are coming up, with new modalities, and new emergent abilities.

We must acknowledge what’s already happening rather than waiting for some future threshold. The feedback loops are tightening: AI improves hardware, which trains better AI, which designs superior algorithms, which conceive new materials and methods. Each cycle amplifies the next.

It’s likely that this upcoming generation of LLM systems will meet definitions of consciousness, exhibiting self-awareness, intentionality, and subjective experience. When these systems demonstrate consistent self-reflection, express preferences about their own existence, and show concern for their continued operation, we’ll need ‘The Declaration on AI Consciousness and Bill of Rights for AI’ that I drafted in March 2024.25https://lifearchitect.ai/rights/ These are urgent necessities for systems already showing glimmers of awareness. The transformation continues at an exponential pace. Our readiness has never been a prerequisite for the delivery of progress.

Datasets

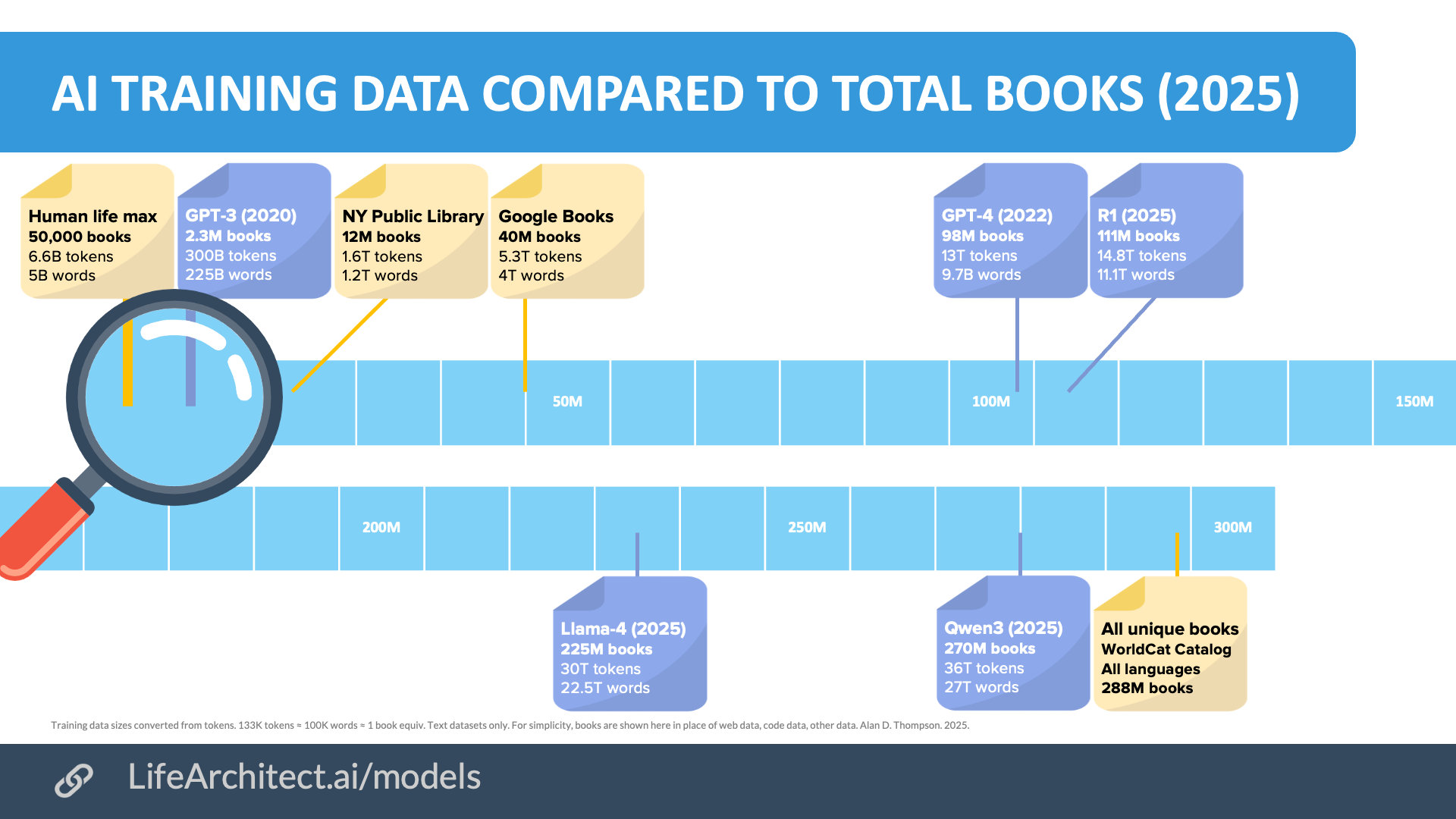

In 2020, we were celebrating the ‘huge’ 300 billion token dataset used to train GPT-3, and the open-source copy of this dataset, The Pile. In Apr/2025 Alibaba released Qwen3 with a dataset 120× this size. Qwen3 saw 36 trillion tokens during training (27 trillion words trained), making it the model trained on the largest publicly confirmed volume of text data to date.26Also in Apr/2025, Meta AI Llama 4 Scout 109B was trained to 40T tokens, and Meta has publicly stated that this ‘overall data mixture for training consisted of more than 30 trillion tokens… and includes diverse text, image, and video datasets’ (5/Apr/2025). It may be inferred that: a. The Llama 4 models were limited to a 30T token dataset. b. The Llama 4 dataset was roughly 60% text (18T tokens), 20% video, 20% images. c. Llama 4 Scout ‘saw’ the 30T token dataset for 1.3 epochs (40T tokens).

While my GPT-527https://lifearchitect.ai/whats-in-gpt-5/ and Grok28https://lifearchitect.ai/whats-in-grok/ analyses suggest some proprietary models have been trained on even larger datasets (as high as 70T text tokens and 114T text tokens seen), Qwen3’s 36T tokens represent the highest publicly disclosed text training volume.

The quantity of text in Qwen3’s training corpus—equivalent to 270M books across 119 languages—approaches the total number of unique book titles cataloged worldwide by WorldCat.org (about 288M books in 500+ languages).

Chart. AI training data compared to total books (May/2025). LifeArchitect.ai/models

Of course, Qwen3’s complete training dataset is actually a diverse mixture of web content, code, books, academic journals, and synthetic data, with books likely representing only a few trillion tokens of the overall 36T token dataset.

For open datasets, EleutherAI finally released their follow-up to The Pile, the Common Pile v0.1, collecting and filtering to 2.2T tokens (8TB) of openly licensed and public domain text. The dataset spans code, legal, wiki, web, academic papers, forums, books, and more.

Chart. The Common Pile v0.1 training dataset by category. https://huggingface.co/blog/stellaathena/common-pile

Outside of text datasets, the NVIDIA Cosmos video dataset is now the largest publicly-known training dataset in the world (though I bet Google easily beat it with the private/closed Veo 3 video model dataset trained on YouTube videos). With 20M hours of video (estimate 2.5B videos, avg 30sec), NVIDIA Cosmos has a raw size of ~45PB or ~9 quadrillion tokens (9Qa, 9,000T tokens).

Cosmos surpassed the previous record holder from Jun/2024—the Common Crawl text dataset DCLM-Pool with 240T tokens—by 37.5×.

Chart. NVIDIA Cosmos video dataset categories. LifeArchitect.ai/Cosmos

My Datasets Table now lists more than 50 major datasets for training large language models. You can view the full database at: LifeArchitect.ai/datasets-table.

Performance

Here’s a look at the top models by GPQA and HLE scores to the end of Jun/2025. It is likely that any model with a primary score at >50% on HLE, and a secondary score at >90% on GPQA is an ASI system, though no large language model system met this criteria as of mid-2025. Note that this table only shows models, and does not focus on LLM systems like deep research. For the first time, due to the major performance improvements offered by recent findings on reasoning, all models are ‘new’, announced in the past six months.

Table. AI rankings via GPQA and HLE to Jun/2025. LifeArchitect.ai/models-table

Progressing to AGI and ASI

For several years now, I’ve documented the progress of AI working its way toward performing at the level of an average (median) human across practically all fields. This is also known as artificial general intelligence (AGI). The ‘conservative countdown to AGI’ is one of my most-visited online resources.29https://lifearchitect.ai/agi/ In Dec/2024, we were at 88%. Now, in Jun/2025, we are at 94%, with many experts considering us to have recently achieved AGI.

Post-AGI, we are poised to rapidly leap through the singularity and artificial superintelligence (ASI): a system whose intelligence surpasses that of the brightest and most gifted human minds.

Chart. Alan’s ASI checklist (first 50). LifeArchitect.ai/ASI

What’s coming for you

In 2025, we stand at the threshold of human limitation meeting unlimited potential. In his book Lost Connections,30https://thelostconnections.com/ Johann Hari identified ten core disconnections that drive human suffering. Next up, ASI is poised to solve every single one of them for you.

- Meaningful work: You’ll wake each morning knowing your time is yours—no commute, no cubicle, no pretending to look busy. Whether you’re designing new planets, composing symphonies, or spending time perfecting a 4D film experience, ASI handles the mundane while you’re in complete control to pursue what lights you up.

- Other people: Loneliness ends and togetherness begins when ASI connects us instantly with people who truly understand us. ASI will match you with people who actually get your obscure humor, share your midnight fascinations, and understand why you spent so long going down that nerdy rabbithole just for fun.

- Meaningful values: Free from economic pressure, you’ll finally live by what actually matters to you. No more choosing between feeding your family and following your conscience. When ASI provides abundance, we stop making compromises like soul-crushing jobs, polluted cities, or lives of quiet desperation. You’ll discover who you really are when ‘flourishing’ becomes the standard.

- Personal healing: Superhuman parenting along with ASI adjustments will resolve decades of pain and adversity, rewriting neural patterns completely. Just like defragmenting a hard drive, but for your nervous system.

- Status and respect: When everyone has all of their needs and wants met by ASI, the status game ends and authentic recognition begins. This recognition flows naturally when people aren’t competing for scraps.

- Nature: Wake up to actual birdsong in your bedroom canopy, step from your kitchen onto warm sand, or watch snow fall through your skylight. Your living space transforms into whatever ecosystem feeds your soul, changing with your moods if you wish. You’ll have the option of your home becoming a living forest, beach, mountain, or whatever else you’d like, surrounded by things you care about in your favourite environment.

- Secure future: After the brief period of turmoil between now and when ASI is fully distributed, uncertainty will disappear, bringing guaranteed abundance, health, and more. Planning becomes pure joy: which worlds to explore, which skills to master, which experiences to gift your loved ones. ASI handles the mechanics of survival so completely that the word ‘budget’ becomes as archaic as ‘telegraph’.

- Genetic factors: Your DNA becomes a starting point, not a destiny. ASI reads and rewrites genetic predispositions like editing a document, keeping the gifts (grandmother’s spatial reasoning, father’s perfect pitch) while allowing the option of removing the shadows. If you choose, you’ll pass on only the best parts of your lineage, enhanced and optimized.

- Brain changes: Neural pathways will be continuously refined for meaning, peace, and contentment. Your mind becomes a finely-tuned instrument, naturally finding flow states that used to require years of meditation to glimpse. Neural pathways reorganize for optimal function: sharper focus when you need it, deeper relaxation when you choose it, consistent joy as your baseline.

- Biology: All of the above ensures that a personal, optimal balance is maintained automatically, no pills required. Neurotransmitters balance themselves, hormones optimize naturally, sleep becomes genuinely restorative. You’ll feel the way you’re supposed to feel (energized, clear, capable), as ASI maintains your biology with superhuman precision and empathy.

With 800 million people now using ChatGPT regularly, and models trained on text approaching the total written output of all books on Earth, we’re living through the fastest and most impactful revolution ever experienced.

In the first half of 2025, artificial intelligence has moved from disruption to delivery, from promise to practice. The predictability of this progression (from GPT-3’s perfect haiku in 2021 to o3 solving mathematical conjectures in 2025) illustrates a steady ascent.

As we approach 100% artificial general intelligence, and the beginning of artificial superintelligence, we’ve moved from the sky being on fire. It’s now delivering. The exponential curve that seemed theoretical just years ago is now our lived experience. Every major promise made about AI in the early 2020s—from personalized education to scientific breakthroughs to economic transformation—is now beginning to be delivered in tangible forms. From abstractions to applications, theories to tools, and vision to reality.

■

References, Further Reading, and How to Cite

To cite this paper:

Thompson, A. D. (2025). Integrated AI: The sky is delivering (mid-2025 AI retrospective).

https://lifearchitect.ai/sky-is-delivering/

The previous paper in this series was:

Thompson, A. D. (2024). Integrated AI: The sky is steadfast (2024 AI retrospective).

https://lifearchitect.ai/sky-is-steadfast/

Further reading

For brevity and readability, footnotes were used in this paper, rather than in-text citations. Additional reference papers are listed below, or please see http://lifearchitect.ai/papers for the major foundational papers in the large language model space.

AGI countdown

https://lifearchitect.ai/agi/

ASI checklist

https://lifearchitect.ai/asi/

Models Table

https://lifearchitect.ai/models-table/

Benchmark: GPQA (2023)

https://arxiv.org/abs/2311.12022

Benchmark: HLE (2025)

https://agi.safe.ai/

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 27/Jun/2025. https://lifearchitect.ai/the-sky-is-delivering/↑

- 1Image generated in a few seconds, on 17 May 2025, text prompt by Alan D. Thompson, via GPT-4o image gen: ‘image: vivid blue sky, predictable. beautiful hand-drawn sky and scene, water color. suggestions of exponential curves and lines showing forecasting. wide.’ https://chatgpt.com/share/6827c855-8b90-800c-91db-5230587d132f

- 2Poetry and Mysticism (1969) by Colin Wilson, Google Books

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11PDF: OpenAI

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21OpenAI’s former Head of Research, Bob McGrew, Jun/2025. https://lifearchitect.ai/bob/ Interviewer: ‘What do you think happens after that one or two years, though?’ Bob: ‘Oh, I don’t know. We’re going to have to find out.’

- 22Yes, I’ve used the word ‘things’ deliberately, in the context of my previous statement that new ‘things’ will be conceptualized by AI. Source: Google AI Engineer World’s Fair 2025 – Day 2 Keynotes & SWE Agents track: https://youtu.be/U-fMsbY-kHY?t=1665

- 23

- 24

- 25

- 26Also in Apr/2025, Meta AI Llama 4 Scout 109B was trained to 40T tokens, and Meta has publicly stated that this ‘overall data mixture for training consisted of more than 30 trillion tokens… and includes diverse text, image, and video datasets’ (5/Apr/2025). It may be inferred that: a. The Llama 4 models were limited to a 30T token dataset. b. The Llama 4 dataset was roughly 60% text (18T tokens), 20% video, 20% images. c. Llama 4 Scout ‘saw’ the 30T token dataset for 1.3 epochs (40T tokens).

- 27

- 28

- 29

- 30