The Memo.

Alan D. Thompson

February 2023 (updated May 2023 with PaLM 2 detail + PaLM 2 dataset estimate, updated Dec 2023 with Gemini Pro)

Summary

Timeline to Google Bard

| Date | Milestone |

| 27/Jan/2020 | Initial Google Meena 2.6B paper published to arXiv. |

| 18/May/2021 | Google LaMDA 1 announced on the Google blog. |

| 20/Jan/2022 | LaMDA 2 paper published to arXiv. |

| 21/Jan/2022 | LaMDA 2 announced on the Google blog. |

| 11/May/2022 | LaMDA 2 demonstrated at Google I/O 22 (timecode). |

| 25/Aug/2022 | AI Test Kitchen (LaMDA 2) opened to US public testers (link). |

| 6/Feb/2023 | Bard announced on the Google blog. |

| 8/Feb/2023 | Bard demonstrated at Google Live in Paris 2023 (timecode). |

| Feb/2023-Mar/2023 | Competitors launch: 7/Feb/2023: Microsoft launches Bing Chat (GPT-3.5) in Edge. 10/Feb/2023: Opera adds ChatGPT model to browser. 28/Feb/2023: Microsoft adds Bing Chat (GPT-3.5) to Windows 11 taskbar. 2/Mar/2023: Brave adds Brave AI model (BART 140M/DeBERTa 1.5B fine-tuned to search results). 8/Mar/2023: DuckDuckGo’s DuckAssist using OpenAI + Anthropic models via Wikipedia. 9/Mar/2023: Discord builds in chat (GPT-3.5) to their platform. |

| 21/Mar/2023 | Bard released to public, announced on the Google blog. |

| 10/May/2023 | Bard now powered by PaLM 2. |

| 13/Jul/2023 | Significant Bard updates including Google Lens integration. |

| 6/Dec/2023 | Bard now powered by Gemini Pro with web access and different fine-tuning (link). |

Table. Timeline from Google Meena to Google Bard.

Google announcement on 6/Feb/2023 highlighted their aims with this model:

We’ve been working on an experimental conversational AI service, powered by LaMDA, that we’re calling Bard. And today, we’re taking another step forward by opening it up to trusted testers ahead of making it more widely available to the public in the coming weeks.

Bard seeks to combine the breadth of the world’s knowledge with the power, intelligence and creativity of our large language models. It draws on information from the web to provide fresh, high-quality responses. Bard can be an outlet for creativity, and a launchpad for curiosity, helping you to explain new discoveries from NASA’s James Webb Space Telescope to a 9-year-old, or learn more about the best strikers in football right now, and then get drills to build your skills. (- Google blog 6/Feb/2023)

Dataset for PaLM 2 via Infiniset (estimate)

‘Infiniset’ is different to Google PaLM’s 780B-token dataset (see my Google Pathways report).

Infiniset contains 1.56T words (1,560B words), which Google tokenized to 2.81T tokens (2,810B tokens). This is on par with DeepMind’s MassiveText dataset of 2.35T tokens (2,350B tokens) in 10.5TB of data. (see my What’s in my AI? paper).

Assuming the same tokenization as MassiveText, Infiniset totals 12.55TB of text data.

The Infiniset dataset is made up of:

| Count | Dataset | Percentage tokens | Raw Size (GB) | Tokens (B) |

| 1 | Dialogues from public forums (assume Reddit, Twitter) | 50% | 6,277 | 1,405 |

| 2 | C4 (Common Crawl) | 12.5% | 1,569 | 351 |

| 3 | Code documents from sites related to programming like Q&A sites, tutorials, etc (assume GitHub, Stack Overflow, Hacker News) | 12.5% | 1,569 | 351 |

| 4 | Wikipedia (English)* | 12.5% | 1,569 | 351 |

| 5 | English web documents (maybe Google Books data) | 6.5% | 816 | 182 |

| 6 | Non-English web documents (maybe Google Books data) | 6.5% | 816 | 182 |

| Total | 100.5% | 12,616GB | 2,822B |

* English Wikipedia is about 60GB uncompressed text in 2023. The history pages can be larger than 14TB additional uncompressed text. It is not clear how and why Google extracted 1,500GB instead of the standard 6-13GB of cleaned and filtered Wikipedia text data used by most other AI labs.

Table. Google Infiniset dataset. Rounded. Disclosed in bold. Determined in italics. For similar models, see my What’s in my AI paper.

Dataset for PaLM 2 via MassiveText (estimate)

The Google PaLM 2 dataset could potentially be made up of some of DeepMind’s MassiveText (multilingual) 5T-token dataset (see the Improving language models by retrieving from trillions of tokens paper and my What’s in my AI? paper).

Not disclosed. In the PaLM 2 paper, Google said only that PaLM 2 is ‘composed of a diverse set of sources: web documents, books, code, mathematics, and conversational data… significantly larger than the corpus used to train PaLM… PaLM 2 is trained on a dataset that includes a higher percentage of non-English data’

Massive team. The data collection for PaLM 2 was a huge undertaking lead by Siamak Shakeri. Dataset contributions were from a team of 18 Google staff.

Please note that the following table is ‘best guess’ by Alan (not confirmed by Google), and is based on available information from the state-of-the-art DeepMind MassiveText (multilingual) + 1,000B tokens of discussion.

Update 17/May/2023: CNBC confirms the PaLM 2 actual dataset size of 3.6T (3,600B) tokens.

| Count | Dataset | Percentage tokens | Raw Size (GB) | Tokens (B) |

| 1 | Books (en) | 68.11% | 12,853GB | 3,423B |

| 2 | Discussion (multilingual)* | x% | 3,750GB | 1,000B* |

| 3 | Web: C4 (multilingual) | 19.45% | 3,656GB | 977B |

| 4 | Code: Github | 7.46% | 2,754GB | 375B |

| 5 | News (en) | 4.71% | 888GB | 237B |

| 6 | Wikipedia (multilingual) | 0.26% | 48GB | 13B |

| Totals | 23,949GB (23.9TB) | 6,000B (6T) |

* Alan’s estimate only.

Table. MassiveText multilingual dataset estimates. Rounded. Disclosed in bold (from DeepMind’s MassiveText multilingual dataset). Determined in italics. For similar models, see my What’s in my AI paper.

Deprecated Bard LaMDA 2 info

We’re releasing it initially with our lightweight model version of LaMDA. This much smaller model requires significantly less computing power, enabling us to scale to more users, allowing for more feedback. We’ll combine external feedback with our own internal testing to make sure Bard’s responses meet a high bar for quality, safety and groundedness in real-world information. We’re excited for this phase of testing to help us continue to learn and improve Bard’s quality and speed. (- Google blog 6/Feb/2023)

While Google explained that Bard was so named because it is a ‘storyteller‘, given the parameter count of 2B, I wonder if the name was also inspired by the famous monologue from The Bard’s Play (wiki).

LaMDA 2 Paper

The LaMDA 2 paper gives very specific details about the model. Dr Ray Kurzweil is one of the 60 co-authors of the paper.

The model is fine-tuned on human feedback, but they do not use that term in the paper:

We quantify safety using a metric based on an illustrative set of human values, and we find that filtering candidate responses using a LaMDA classifier fine-tuned with a small amount of crowdworker-annotated data offers a promising approach to improving model safety.

Dataset for LaMDA 2

The Google LaMDA 2 dataset is called ‘Infiniset’, and is different to Google PaLM’s 780B-token dataset (see my Google Pathways report).

Infiniset contains 1.56T words (1,560B words), which Google tokenized to 2.81T tokens (2,810B tokens). This is on par with DeepMind’s MassiveText dataset of 2.35T tokens (2,350B tokens) in 10.5TB of data. (see my What’s in my AI? paper).

Assuming the same tokenization as MassiveText, Bard’s Infiniset totals 12.55TB of text data.

LaMDA 2 and Bard’s Infiniset dataset is made up of:

| Count | Dataset | Percentage tokens | Raw Size (GB) | Tokens (B) |

| 1 | Dialogues from public forums (assume Reddit, Twitter) | 50% | 6,277 | 1,405 |

| 2 | C4 (Common Crawl) | 12.5% | 1,569 | 351 |

| 3 | Code documents from sites related to programming like Q&A sites, tutorials, etc (assume GitHub, Stack Overflow, Hacker News) | 12.5% | 1,569 | 351 |

| 4 | Wikipedia (English)* | 12.5% | 1,569 | 351 |

| 5 | English web documents (maybe Google Books data) | 6.5% | 816 | 182 |

| 6 | Non-English web documents (maybe Google Books data) | 6.5% | 816 | 182 |

| Total | 100.5% | 12,616GB | 2,822B |

* English Wikipedia is about 60GB uncompressed text in 2023. The history pages can be larger than 14TB additional uncompressed text. It is not clear how and why Google extracted 1,500GB instead of the standard 6-13GB of cleaned and filtered Wikipedia text data used by most other AI labs.

Table. Google LaMDA 2/Bard dataset. Rounded. Disclosed in bold. Determined in italics. For similar models, see my What’s in my AI paper.

Dataset size comparison for interest:

| Date | Model + parameter count | Tokens |

|---|---|---|

| Jan/2020 | Google Meena 2.6B (LaMDA predecessor) | 61B/10T trained (164x epochs) |

| May/2020 | OpenAI GPT-3 175B | 499/300B trained |

| Feb/2022 | Google LaMDA 2 137B | 2.81T |

| Mar/2022 | DeepMind Chinchilla 70B | 2.35T/1.4T trained |

| Apr/2022 | Google PaLM 540B | 780B/780B trained |

Table. Dataset size comparison between LaMDA 2 and other current models.

Web Access

Like Microsoft Bing Chat, Bard seems to have web access during inference. From the LaMDA 2 paper:

[For training,] We create a toolset (TS) that includes an information retrieval system… TS takes a single string as input and outputs a list of one or more strings. Each tool in TS expects a string and returns a list of strings… the information retrieval system can take “How old is Rafael Nadal?”, and output [“Rafael Nadal / Age / 35”]. The information retrieval system is also capable of returning snippets of content from the open web, with their corresponding URLs. The TS tries an input string on all of its tools, and produces a final output list of strings by concatenating the output lists from every tool in the following order: calculator, translator, and information retrieval system. A tool will return an empty list of results if it can’t parse the input (e.g., the calculator cannot parse “How old is Rafael Nadal?”), and therefore does not contribute to the final output list…We decided to fine-tune our language model to provide attributions for its responses by looking up its claims using a toolset…

The interface to the set of tools used here is identical to the service used by the algorithm at inference time. Given a general text query, the information retrieval system returns a set of brief, text-only snippets in rank order. Snippets of open-web content include URLs for their source… When the user has finished running queries, they have the opportunity to rewrite the model’s statement to include well-sourced claims. If they used open-web content, we ask them to cite the URLs needed to support any responses which contain information pertaining to the external world. URLs can be appended to the end of the message, or if the context warrants it, they can be attached inline to particular words in the response using Markdown format.

We then fine-tune LaMDA to perform two tasks… At inference time, the model’s output is directed to the information retrieval system or to the user, depending on whether the first generated string is ‘TS’ or ‘User’.

Query generation is entirely based on the model fine-tuning, and there is no heuristic component. The model’s output determines the number of queries—the research loop continues until the model generates output addressed to the user—but we impose a maximum at inference time to eliminate the possibility of an infinite loop. The maximum is a parameter of the serving model. In the current example, it is set to four.

Availability

The LaMDA model is closed, with some very limited availability of filtered outputs via Google’s AI Test Kitchen.

Google Bard is being made available to users in Q1 2023.

No further information is yet available to mid-Feb/2023.

Google Bard announcement video (8/Feb/2023)

Timecode 13:23

Google Bard examples

Prompt: I want to buy a new car for my family. What should I consider?

Response:

- Your budget: How much can you afford to spend on a new car? Keep in mind that the initial purchase price is just the beginning. You’ll also need to factor ongoing costs like fuel, maintenance, and insurance.

- Seating: How many people will be riding in the car on a regular basis? Do you need a car with three rows of seats, or will two rows be sufficient?

- Features: What features are important to you? Do you want a car with a sunroof, leather seats, or parking cameras?

- Safety: Safety is always a top priority when buying a new car. Look for cars with good crash test ratings and features like airbags.

- Fuel type: Do you want a gasoline-powered car, a diesel-powered car, or an electric car? Each type has its own pros and cons.

Ultimately, the best car for your family is the one that meets your needs and fits your budget. Test drive a few different models to find the perfect one for you.

Prompt: Help me understand the pros/cons of buying an electric one

Response:

Pros:

- Electric cars produce zero emissions when driving, which is better than a gas powered car for the environment. Gas cars produce emissions that contribute to climate change.

- They are generally cheaper to operate. They don’t require oil changes or tune-ups, and they have fewer moving parts, so there’s less that can go wrong.

- In some places, electric cars are eligible for tax credits.

- Electric cars are becoming more popular, so there’s a growing network of charging stations.

Cons:

- Electric cars have a more limited driving range, depending on the size of battery.

- Charging an electric car can take a while, especially if you use a public charging station.

- The initial cost of an electric car is usually higher than the initial cost of an equivalent gasoline car.

- Right now, electric cars are not as readily available as gasoline cars. There are fewer models available and they are not as widely available at dealerships.

Prompt: I think our first longer drive will be from San Francisco to Santa Cruz, can you suggest a few stops along the way?

Response: Sure, here’s a sample itinerary for stops between San Francisco to Santa Cruz.

- Half Moon Bay features several beautiful beaches, including Gray Whale Cove and Cowell Ranch State Beach. It’s also known for its local art galleries and breweries.

- Pescadero is a bit farther south. It’s known for its farm and ranch community. Stop by Pigeon Point Lighthouse to catch a great view. If you want to stay overnight to extend your road trip, there are many quaint bed and breakfasts available here.

- Redwood forests are also an option. As you approach Santa Cruz, check which Redwood State Parks are open for a walk or hike.

- The Santa Cruz Beach Boardwalk is like an amusement park on the beach, with rides, games, and food. Many people come to Santa Cruz to surf, and you can find lessons and equipment rentals along the beach.

This is just one possible plan for a road trip from San Francisco to Santa Cruz. There are many other ways to plan this drive, so you can adjust this plan to fit your interests and time constraints.

Google Bard updates

11/Apr/2023: Bard updates page Google launches official Bard updates page bard.google.com/updates

21/Mar/2023: Bard released to US and UK users via waitlist: bard.google.com

14/Mar/2023: Big Bard [probably 137B]. ‘Googlers built “Big Bard,” as the company refers to it internally, on the same language model as Bard, known as LaMDA, but with seemingly larger parameters that make it appear more intelligent… An earlier version, tested internally, was named, “Apprentice Bard.”‘ — via Business Insider.

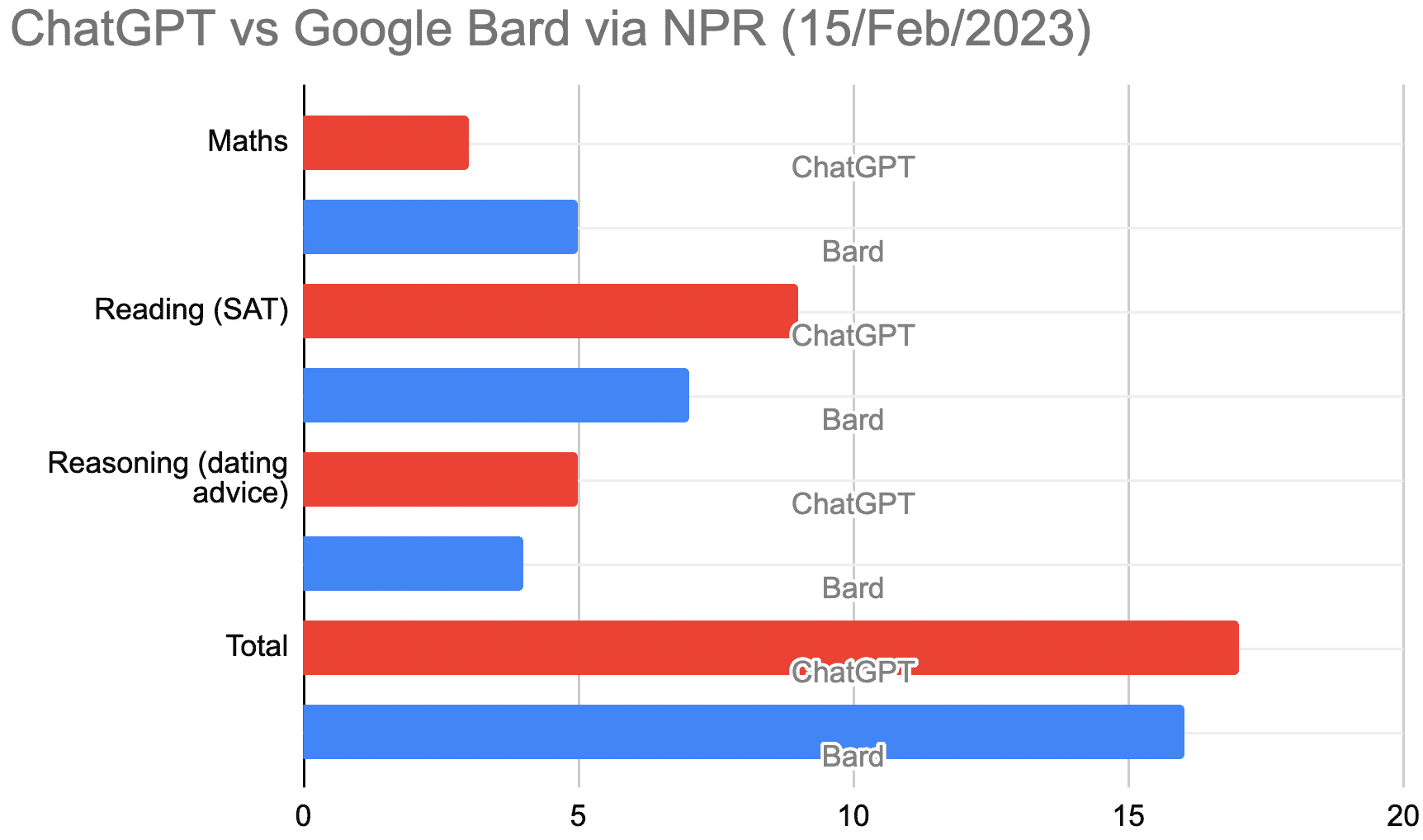

15/Feb/2023: Google Bard scored 66% higher than ChatGPT for maths (5 points vs 3 points) in an informal assessment by NPR assisted by Google engineers (15/Feb/2023), but ChatGPT was slightly better overall.

ChatGPT v Google Bard via NPR: View the data (Google sheets).

Header image courtesy Drew Fairweather/Married To The Sea. You guys are awesome!

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Bestseller. 10,000+ readers from 142 countries. Microsoft, Tesla, Google...

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.This page last updated: 9/Feb/2024. https://lifearchitect.ai/bard/↑