- Announced Feb/2023.

- Open-sourced Apr/2023.

- MOSS is English-first, limited Chinese. Fudan said it: ‘trained on 300 billion English words and only 30 billion Chinese words.’

- Less params than ChatGPT (Fudan: ‘tens of billions of parameters’ and ‘parameters of MOSS are an order of magnitude smaller than that of ChatGPT,’ MOSS=16B vs ChatGPT=175B).

- Chinchilla-aligned. 1 token≈0.7 words. 1 word≈1.3 tokens. 330B words * 1.3 = 430B tokens trained to 16B parameters would be around 27:1 (compared to GPT-3’s 1.7:1 and Chinchilla’s 20:1).

- Dataset may be unlike Chinese models such as Wudao and PanGu Alpha, more like Tsinghua’s GLM-130B which prioritised English data from The Pile.

- Aligned with Anthropic’s HHH values: helpful, harmless, and honest.

- Named after the character of MOSS from The Wandering Earth series, 流浪地球 liúlàng dìqiú (wiki, IMDB). The character of MOSS is similar to AUTO from WALL-E or HAL 9000 from 2001: A Space Odyssey.

- Major bandwidth issues at launch ‘MOSS instantly went viral on Chinese social media, generating tens of millions of hits on China’s Twitter-like Weibo’ – via Reuters (21/Feb/2023).

- Public interface will be: https://moss.fastnlp.top/

- More info: https://txsun1997.github.io/blogs/moss.html

Compare the MOSS chatbot with:

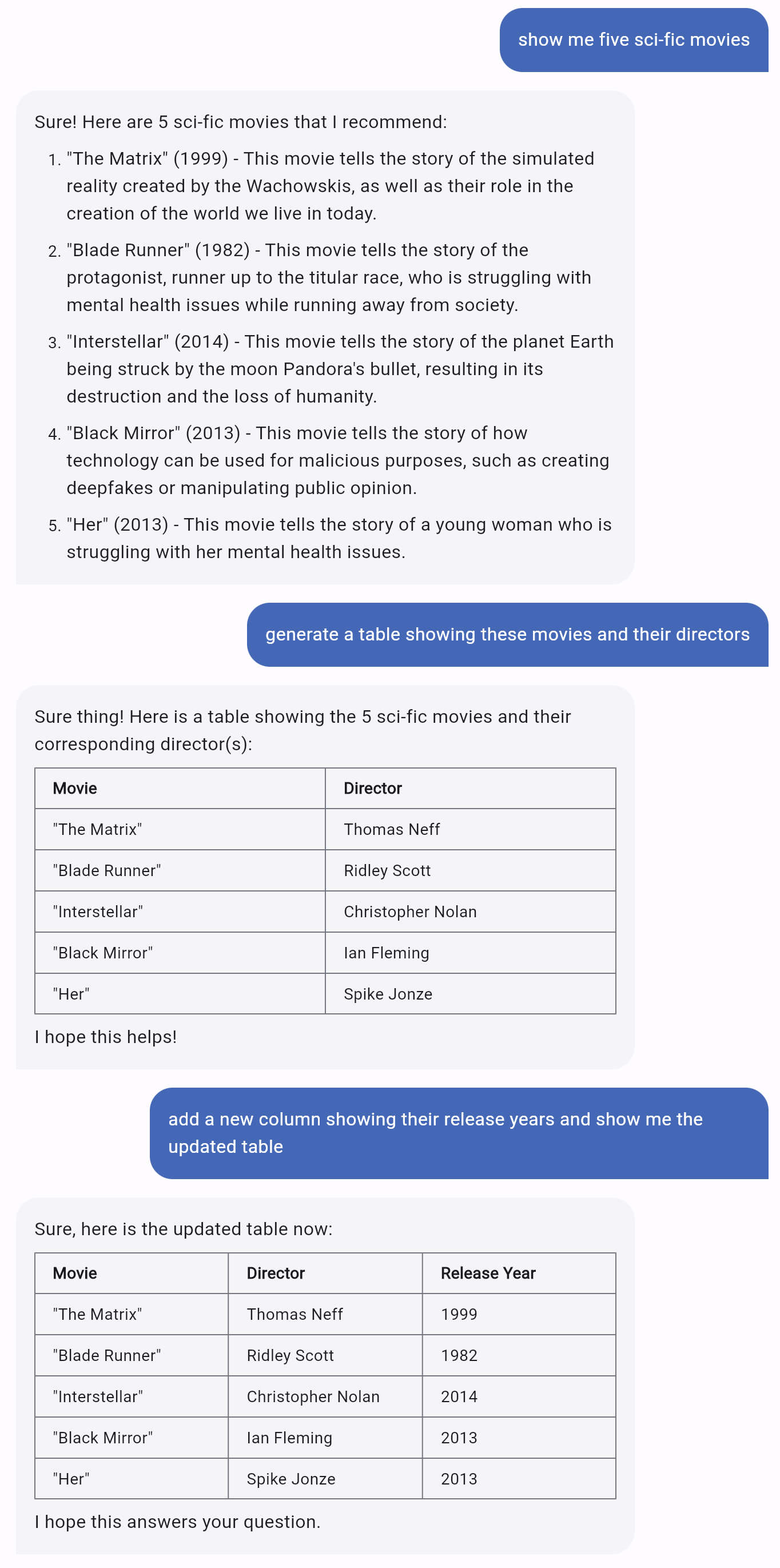

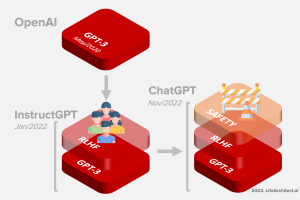

- OpenAI ChatGPT (Nov/2022).

- DeepMind Sparrow (Sep/2022).

- Anthropic Claude (Dec/2022).

- Bing Chat (Sydney) (Feb/2023).

- Google Bard (Feb/2023).

Download

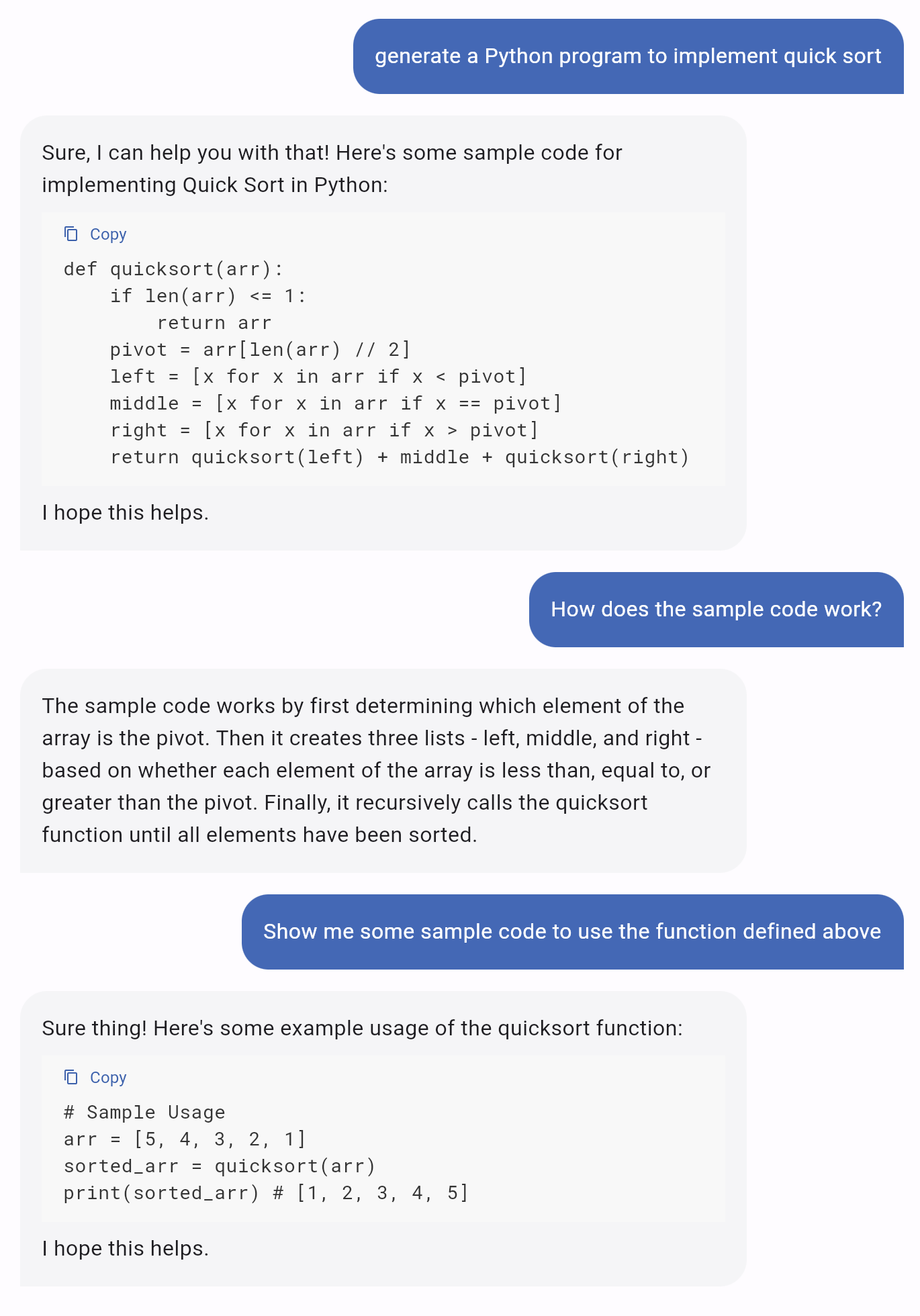

MOSS examples

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Bestseller. 10,000+ readers from 142 countries. Microsoft, Tesla, Google...

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.This page last updated: 9/Feb/2024. https://lifearchitect.ai/moss/↑