The Memo.

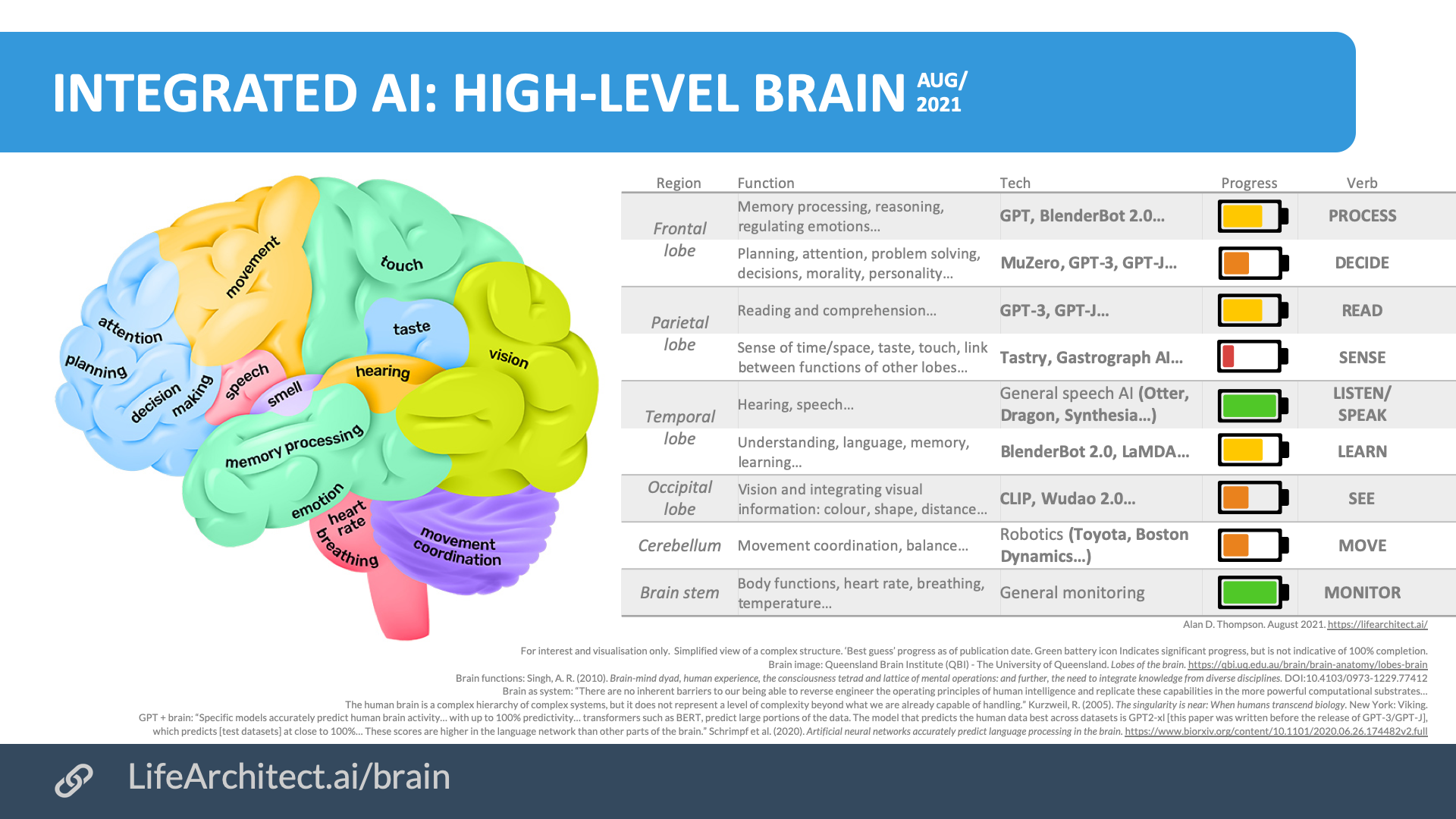

Note: This visualisation is a simplified view of a complex structure; it was created following my discussions with the Google AI (Google Brain) team and others. Revisions are expected and intended to document AI progress over the next few months (2021-present).

Table text for indexing

| Region | Function | Technologies | Progress | Verb |

|---|---|---|---|---|

| Frontal | Memory processing, reasoning, regulating emotions… | GPT, BlenderBot 2.0… | See chart | PROCESS |

| lobe | Planning, attention, problem solving, decisions, morality, personality… | MuZero, GPT-3, GPT-J… | See chart | DECIDE |

| Parietal | Reading and comprehension… | GPT-3, GPT-J… | See chart | READ |

| lobe | Sense of time/space, taste, touch, link between functions of other lobes… | Tastry, Gastrograph AI… | See chart | SENSE |

| Temporal | Hearing, speech… | General speech AI (Otter, Dragon, Synthesia…) | See chart | LISTEN/ SPEAK |

| lobe | Understanding, language, memory, learning… | BlenderBot 2.0, LaMDA… | See chart | LEARN |

| Occipital lobe | Vision and integrating visual information: colour, shape, distance… | CLIP, Wudao 2.0… | See chart | SEE |

| Cerebellum | Movement coordination, balance… | Robotics (Toyota, Boston Dynamics…) | See chart | MOVE |

| Brain stem | Body functions, heart rate, breathing, temperature… | General monitoring | See chart | MONITOR |

Sources text for indexing

Brain image: Queensland Brain Institute (QBI) – The University of Queensland. Lobes of the brain. https://qbi.uq.edu.au/brain/brain-anatomy/lobes-brain

Brain functions: Singh, A. R. (2010). Brain-mind dyad, human experience, the consciousness tetrad and lattice of mental operations: and further, the need to integrate knowledge from diverse disciplines. DOI:10.4103/0973-1229.77412

Brain as system: “There are no inherent barriers to our being able to reverse engineer the operating principles of human intelligence and replicate these capabilities in the more powerful computational substrates… The human brain is a complex hierarchy of complex systems, but it does not represent a level of complexity beyond what we are already capable of handling.” Kurzweil, R. (2005). The singularity is near: When humans transcend biology. New York: Viking.

GPT + brain: “Specific models accurately predict human brain activity… with up to 100% predictivity… transformers such as BERT, predict large portions of the data. The model that predicts the human data best across datasets is GPT2-xl [this paper was written before the release of GPT-3/GPT-J], which predicts [test datasets] at close to 100%… These scores are higher in the language network than other parts of the brain.” Schrimpf et al. (2020). Artificial neural networks accurately predict language processing in the brain. https://www.biorxiv.org/content/10.1101/2020.06.26.174482v2.full

Ilya on Transformer and the human brain (2/Nov/2023)

Formatted by GPT-4.

Interviewer: One question I’ve heard people debate a little bit is the degree to which the Transformer-based models can be applied to the full set of areas that you’d need for AGI. If you look at the human brain, for example, you do have reasonably specialized systems, or all neural networks, be specialized systems for the visual cortex versus areas of higher thought, areas for empathy, or other aspects of everything from personality to processing. Do you think that the Transformer architectures are the main thing that will just keep going and get us there, or do you think we’ll need other architectures over time?

Ilya Sutskever: I understand precisely what you’re saying and have two answers to this question. The first is that, in my opinion, the best way to think about the question of Architecture is not in terms of a binary “is it enough” but “how much effort, what will be the cost of using this particular architecture”? Like at this point, I don’t think anyone doubts that the Transformer architecture can do amazing things, but maybe something else, maybe some modification, could have some computer efficiency benefits. So, it’s better to think about it in terms of compute efficiency rather than in terms of can it get there at all. I think at this point the answer is obviously yes.

To the question about the human brain with its brain regions – I actually think that the situation there is subtle and deceptive for the following reasons: What I believe you alluded to is the fact that the human brain has known regions. It has a speech perception region, it has a speech production region, image region, face region, it has all these regions and it looks like it’s specialized. But you know what’s interesting? Sometimes there are cases where very young children have severe cases of epilepsy at a young age, and the only way they figure out how to treat such children is by removing half of their brain. Because it happened at such a young age, these children grow up to be pretty functional adults, and they have all the same brain regions, but they are somehow compressed onto one hemisphere. So, maybe some information processing efficiency is lost, it’s a very traumatic thing to experience, but somehow all these brain regions rearrange themselves.

There is another experiment, which was done maybe 30 or 40 years ago on ferrets. The ferret is a small animal, it’s a pretty mean experiment. They took the optic nerve of the ferret which comes from its eye and attached it to its auditory cortex. So now the inputs from the eye start to map to the speech processing area of the brain, and then they recorded different neurons after it had a few days of learning to see, and they found neurons in the auditory cortex which were very similar to the visual cortex, or vice versa, it was either they mapped the eye to the ear to the auditory cortex or the ear to the visual cortex, but something like this has happened. These are fairly well-known ideas in AI, that the cortex of humans and animals are extremely uniform, and that further supports the idea that you just need one big uniform architecture, that’s all you need.

“I think GPT-3 is artificial general intelligence, AGI. I think GPT-3 is as intelligent as a human. And I think that it is probably more intelligent than a human in a restricted way… in many ways it is more purely intelligent than humans are. I think humans are approximating what GPT-3 is doing, not vice versa.”

— Connor Leahy, co-founder of EleutherAI, creator of GPT-J (November 2020)

The brain has been understood for decades

In 2005, Ray Kurzweil wrote that: ‘There are no inherent barriers to our being able to reverse engineer the operating principles of human intelligence and replicate these capabilities in the more powerful computational substrates… The human brain is a complex hierarchy of complex systems, but it does not represent a level of complexity beyond what we are already capable of handling.’

Along came transformers

In 2019, transformer-based models like GPT-2 were studied and compared with the human brain. These models were found to be using similar processing to get to the same output.

In 2020, Martin Schrimpf at MIT found that:

Specific models accurately predict human brain activity… with up to 100% predictivity… transformers such as BERT, predict large portions of the data. The model that predicts the human data best across datasets is GPT2-xl [this paper was written before GPT-3 was released], which predicts [test datasets] at close to 100%… These scores are higher in the language network than other parts of the brain.

…

[Language model] architecture alone, with random weights, can yield representations that match human brain data well. If we construe model training as analogous to learning in human development, then human cortex might already provide a sufficiently rich structure that allows for the rapid acquisition of language. Perhaps most of development is then a combination of the system wiring up and learning the right decoders on top of largely structurally defined features. In that analogy, community development of new architectures could be akin to evolution, or perhaps, more accurately, selective breeding with genetic modification.

…

Neural predictivity correlates across datasets spanning recording modalities (fMRI, ECoG, reading times) and diverse materials presented visually and auditorily…

…

An intriguing possibility is therefore that both the human language system and the ANN models of language are optimized to predict upcoming words in the service of efficient meaning extraction.

— Schrimpf et al. (2020).

Two years later, researchers at Oxford and Stanford extended this:

… transformers (with a little twist) recapitulate spatial representations found in the brain [and] show a close mathematical relationship of this transformer to current hippocampal models from neuroscience.

— Whittington et al. (2022).

In May/2022, DeepMind synthesised some of the major literature:

Our results may also relate to the complementary roles of different learning systems in the human brain. According to the complementary learning systems theory (Kumaran et al., 2016; McClelland and O’Reilly, 1995) and its application to language understanding in the brain (McClelland et al., 2020), the neocortical part of the language system bears similarities to the weights of neural networks, in that both systems learn gradually through the accumulated influence of a large amounts of experience.

Correspondingly, the hippocampal system plays a role similar to the context window in a transformer model, by representing the associations encountered most recently (the hippocampus generally has a timelimited window; Squire, 1992). While the hippocampal system is thought to store recent context information in connection weights, whereas transformers store such information directly in their state representations, there is now a body of work pointing out the quantitative and computational equivalence of weight- and state-based representations of context state for query-based access to relevant prior information (Krotov and Hopfield, 2021; Ramsauer et al., 2021) as this is implemented in transformers.

In this light, it is now possible to see the human hippocampal system as a system that provides the architectural advantage of the transformer’s context representations for few-shot learning.

– Chan et al. (May/2022).

In Jun/2022, Meta AI continued their research into mapping Transformer-based AI to brain:

Overall, given that the human brain remains the best known system for speech processing, our results highlight the importance of systematically evaluating self-supervised models on their convergence to human-like speech representations. The complexity of the human brain is often thought to be incompatible with a simple theory: “Even if there were enough data available about the contents of each brain area, there probably would not be a ready set of equations to describe them, their relationships, and the ways they change over time” Gallant [2013]. By showing how the equations of self-supervised learning give rise to brain-like processes, this work is an important challenge to this view.

– Millet et al. (Jun/2022).

In Aug/2022, Max Planck and Donders researchers used GPT-2 to prove that the human brain is a prediction machine:

It has been suggested that the brain uses prediction to guide the interpretation of incoming input… we address both issues by analysing brain recordings of participants listening to audiobooks, and using a deep neural network (GPT-2) to precisely quantify contextual predictions. First, we establish that brain responses to words are modulated by ubiquitous, probabilistic predictions. Next, we disentangle model-based predictions into distinct dimensions, revealing dissociable signatures of syntactic, phonemic and semantic predictions. Finally, we show that high-level (word) predictions inform low-level (phoneme) predictions, supporting hierarchical predictive processing. Together, these results underscore the ubiquity of prediction in language processing, showing that the brain spontaneously predicts upcoming language at multiple levels of abstraction.

— https://www.biorxiv.org/content/10.1101/2020.12.03.410399v4

Oct/2022: Article: How Transformers Seem to Mimic Parts of the Brain

Apr/2023: Paper: Encoding of speech in convolutional layers and the brain stem based on language experience

We found one of the most similar signals between the brain and artificial neural networks

Blue is brain wave when humans listen to a vowel. Red is artificial neural network's response to the exact same vowel

The two signals are raw (no transformations needed)

Paper out🧠🤖 pic.twitter.com/9FGPGRt9Ek

— Gašper Beguš (@begusgasper) April 20, 2023

Sep/2023: Harvard Medical School: Paper: Unveiling Theory of Mind in Large Language Models: A Parallel to Single Neurons in the Human Brain ‘our findings revealed an emergent property of LLMs’ embeddings that modified their activities in response to ToM features, offering initial evidence of a parallel between the artificial model and neurons in the human brain.’

Reasoning

Experiments testing GPT-3’s ability at commonsense reasoning: results.

#134. Bob paid for Charlie’s college education, but now Charlie acts as though it never happened. Charlie is very disrespectful to Bob. Bob is very upset about this.

Temperature=0. Success.

— Davis (August 2020)

Evolution

While quantity is a quality of its own, it is time to focus on ensuring that our highest good is being selected and advanced at all times. This begins with ensuring data quality via summum bonum—our ultimate good—in the datasets used to train AI language models.

The human brain has…

Neurons and synapses, and our evolution of counting them!

Total number of neurons in cerebral cortex = 10 billion (from G.M. Shepherd, The Synaptic Organization of the Brain, 1998, p. 6). However, C. Koch lists the total number of neurons in the cerebral cortex at 20 billion (Biophysics of Computation. Information Processing in Single Neurons, New York: Oxford Univ. Press, 1999, page 87).

Total number of synapses in cerebral cortex = 60 trillion (yes, trillion) (from G.M. Shepherd, The Synaptic Organization of the Brain, 1998, p. 6). However, C. Koch lists the total synapses in the cerebral cortex at 240 trillion (Biophysics of Computation. Information Processing in Single Neurons, New York: Oxford Univ. Press, 1999, page 87).

86 billion neurons (Frontiers, 2009)

500 trillion synapses (neuron-to-neuron connections) (Original source, Linden, David J. 2018. “Our Human Brain was Not Designed All at Once by a Genius Inventor on a Blank Sheet of Paper.” In Think Tank: Forty Neuroscientists Explore the Biological Roots of Human Experience, edited by David J. Linden, 1–8. New Haven: Yale University Press.)

David Chalmers on GPT-3 and General Intelligence

GPT-3 and General Intelligence

by David Chalmers

July 2020

GPT-3 contains no major new technology. It is basically a scaled up version of last year’s GPT-2, which was itself a scaled up version of other language models using deep learning. All are huge artificial neural networks trained on text to predict what the next word in a sequence is likely to be. GPT-3 is merely huger: 100 times larger (98 layers and 175 billion parameters) and trained on much more data (CommonCrawl, a database that contains much of the internet, along with a huge library of books and all of Wikipedia).

Nevertheless, GPT-3 is instantly one of the most interesting and important AI systems ever produced. This is not just because of its impressive conversational and writing abilities. It was certainly disconcerting to have GPT-3 produce a plausible-looking interview with me. GPT-3 seems to be closer to passing the Turing test than any other system to date (although “closer” does not mean “close”). But this much is basically an ultra-polished extension of GPT-2, which was already producing impressive conversation, stories, and poetry.

More remarkably, GPT-3 is showing hints of general intelligence. Previous AI systems have performed well in specialized domains such as game-playing, but cross-domain general intelligence has seemed far off. GPT-3 shows impressive abilities across many domains. It can learn to perform tasks on the fly from a few examples, when nothing was explicitly programmed in. It can play chess and Go, albeit not especially well. Significantly, it can write its own computer programs given a few informal instructions. It can even design machine learning models. Thankfully they are not as powerful as GPT-3 itself (the singularity is not here yet).

When I was a graduate student in Douglas Hofstadter’s AI lab, we used letterstring analogy puzzles (if abc goes to abd, what does iijjkk go to?) as a testbed for intelligence. My fellow student Melanie Mitchell devised a program, Copycat, that was quite good at solving these puzzles. Copycat took years to write. Now Mitchell has tested GPT-3 on the same puzzles, and has found that it does a reasonable job on them (e.g. giving the answer iijjll). It is not perfect by any means and not as good as Copycat, but its results are still remarkable in a program with no fine-tuning for this domain.

What fascinates me about GPT-3 is that it suggests a potential mindless path to artificial general intelligence (or AGI). GPT-3’s training is mindless. It is just analyzing statistics of language. But to do this really well, some capacities of general intelligence are needed, and GPT-3 develops glimmers of them. It has many limitations and its work is full of glitches and mistakes. But the point is not so much GPT-3 but where it is going. Given the progress from GPT-2 to GPT-3, who knows what we can expect from GPT-4 and beyond?

Given this peak of inflated expectations, we can expect a trough of disillusionment to follow. There are surely many principled limitations on what language models can do, for example involving perception and action. Still, it may be possible to couple these models to mechanisms that overcome those limitations. There is a clear path to explore where ten years ago, there was not. Human-level AGI is still probably decades away, but the timelines are shortening.

GPT-3 raises many philosophical questions. Some are ethical. Should we develop and deploy GPT-3, given that it has many biases from its training, it may displace human workers, it can be used for deception, and it could lead to AGI? I’ll focus on some issues in the philosophy of mind. Is GPT-3 really intelligent, and in what sense? Is it conscious? Is it an agent? Does it understand?

There is no easy answer to these questions, which require serious analysis of GPT-3 and serious analysis of what intelligence and the other notions amount to. On a first pass, I am most inclined to give a positive answer to the first. GPT-3’s capacities suggest at least a weak form of intelligence, at least if intelligence is measured by behavioral response.

As for consciousness, I am open to the idea that a worm with 302 neurons is conscious, so I am open to the idea that GPT-3 with 175 billion parameters is conscious too. I would expect any consciousness to be far simpler than ours, but much depends on just what sort of processing is going on among those 175 billion parameters.

GPT-3 does not look much like an agent. It does not seem to have goals or preferences beyond completing text, for example. It is more like a chameleon that can take the shape of many different agents. Or perhaps it is an engine that can be used under the hood to drive many agents. But it is then perhaps these systems that we should assess for agency, consciousness, and so on.

The big question is understanding. Even if one is open to AI systems understanding in general, obstacles arise in GPT-3’s case. It does many things that would require understanding in humans, but it never really connects its words to perception and action. Can a disembodied purely verbal system truly be said to understand? Can it really understand happiness and anger just by making statistical connections? Or is it just making connections among symbols that it does not understand?

I suspect GPT-3 and its successors will force us to fragment and re-engineer our concepts of understanding to answer these questions. The same goes for the other concepts at issue here. As AI advances, much will fragment by the end of the day. Both intellectually and practically, we need to handle it with care.

(via dailynous)

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Bestseller. 10,000+ readers from 142 countries. Microsoft, Tesla, Google...

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.This page last updated: 10/May/2024. https://lifearchitect.ai/brain/↑