Get The Memo.

Summary

| Organization | Amazon |

| Model name | Olympus |

| Internal/project name | – |

| Model type | LLM |

| Parameter count | 2T |

| Dataset size (tokens) | Alan expects: 40T (around 80TB). See also: Gemini |

| Training data end date | Alan expects: Oct/2023 |

| Training start date | Alan expects: Nov/2023 |

| Training end/convergence date | Alan expects: May/2024 |

| Training time (total) |  See working, with sources.

See working, with sources. |

| Release date (public) | Alan expects: Aug/2024 |

| Paper | – |

| Playground | – |

Mythology

2nd gen: Titan: the Titans were second-generation deities who lived on Mount Othrys. The Titans were gigantic compared to the Olympians.

3rd gen: Olympus: the Olympians were third-generation gods who occupied Mount Olympus. The Olympians outnumbered the Titans which resulted in their victory. (source)

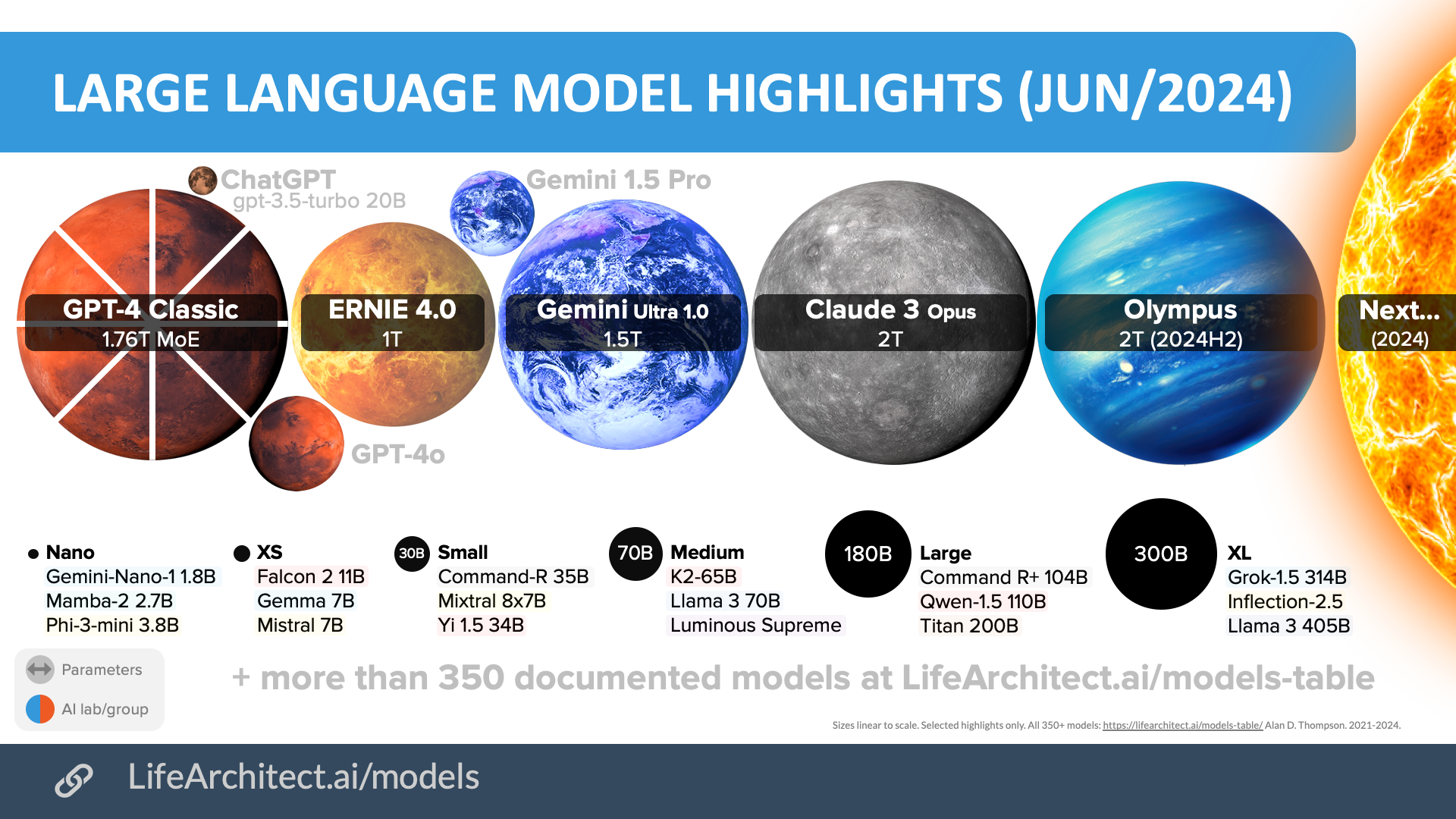

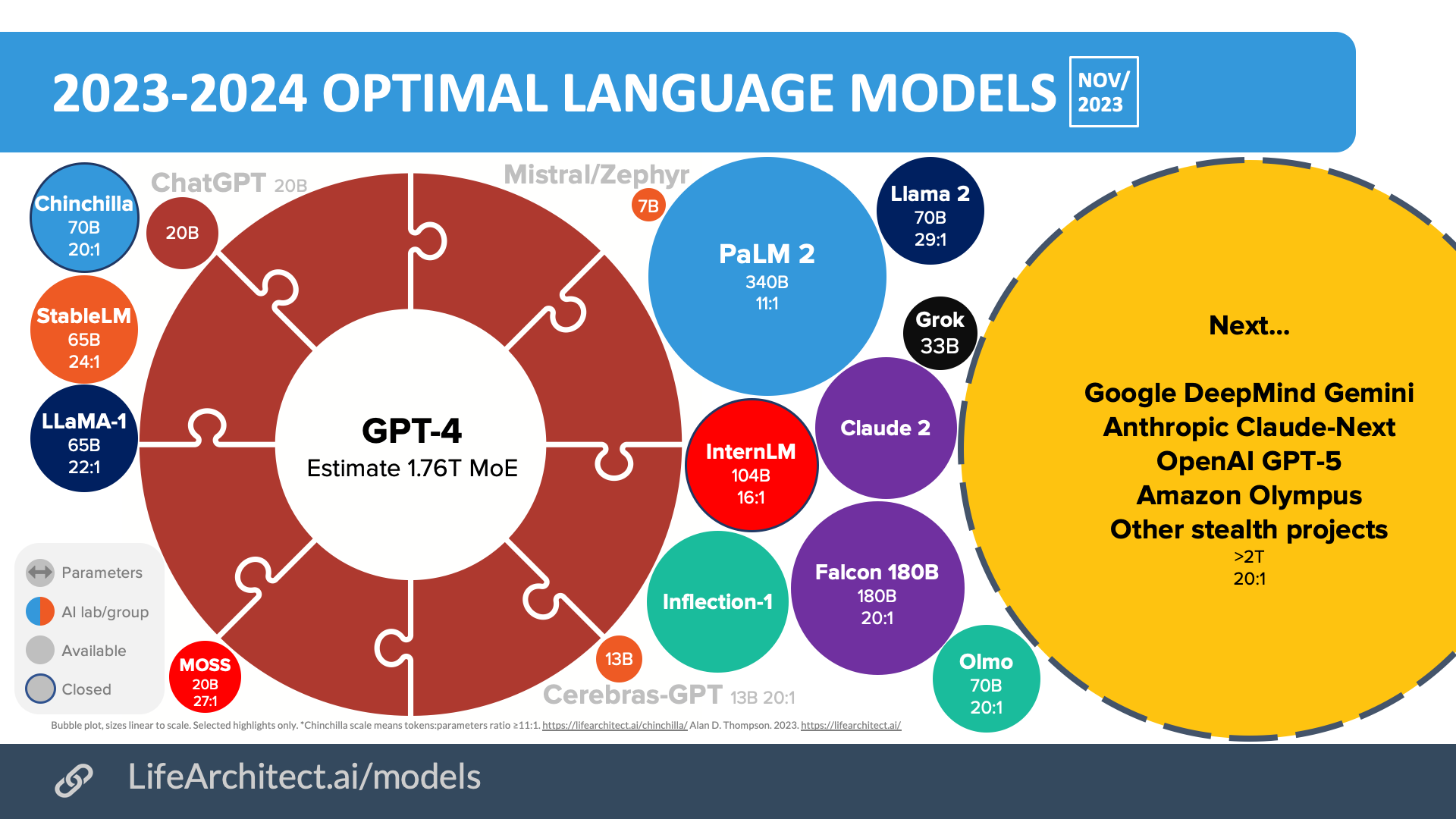

2024 optimal LLM highlights

Download source (PDF)

Download source (PDF)Permissions: Yes, you can use these visualizations anywhere, please leave the citation intact.

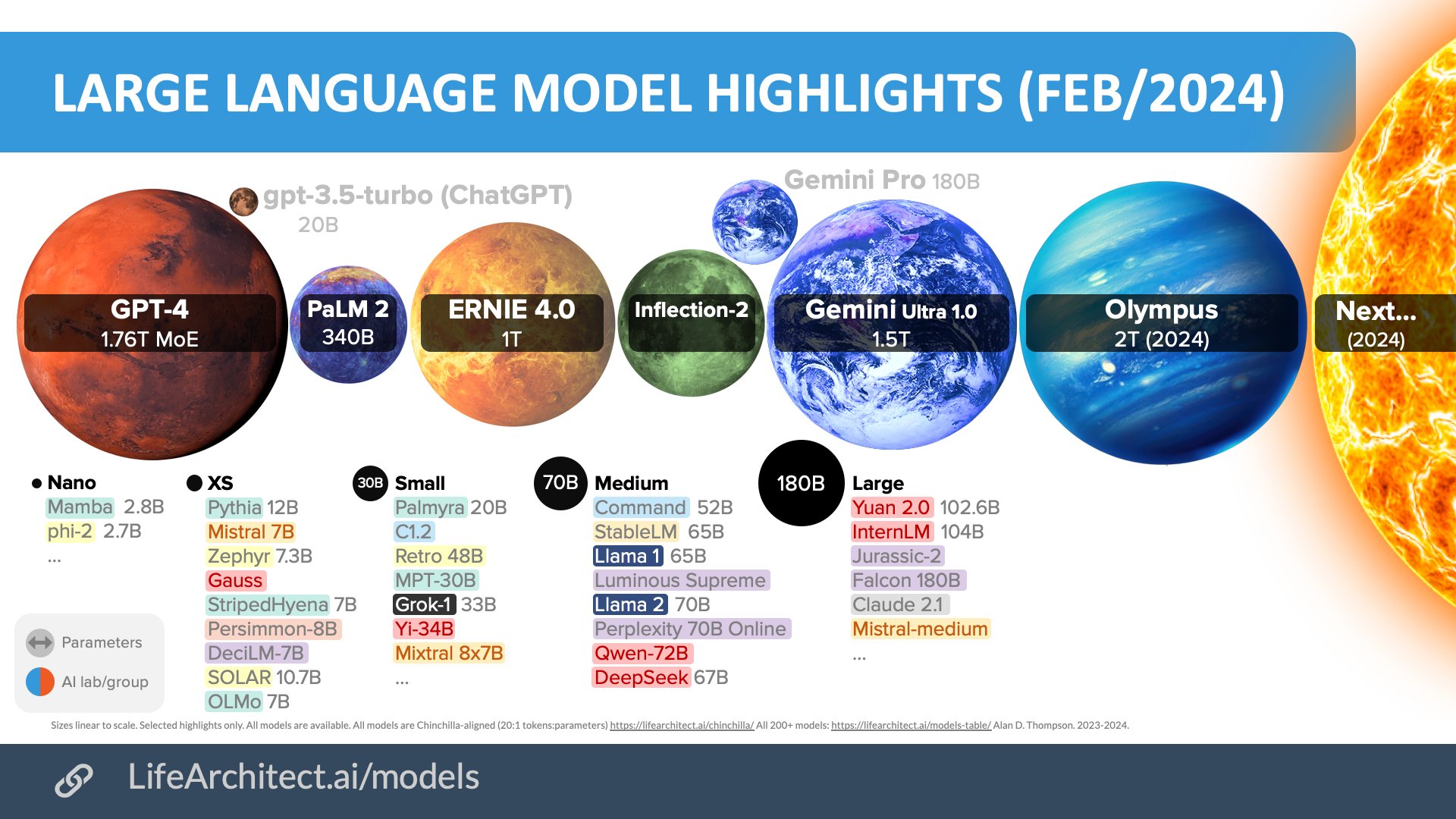

Older bubbles viz

Feb/2024

Nov/2023

Download source (PDF)

Download source (PDF)Permissions: Yes, you can use these visualizations anywhere, please leave the citation intact.

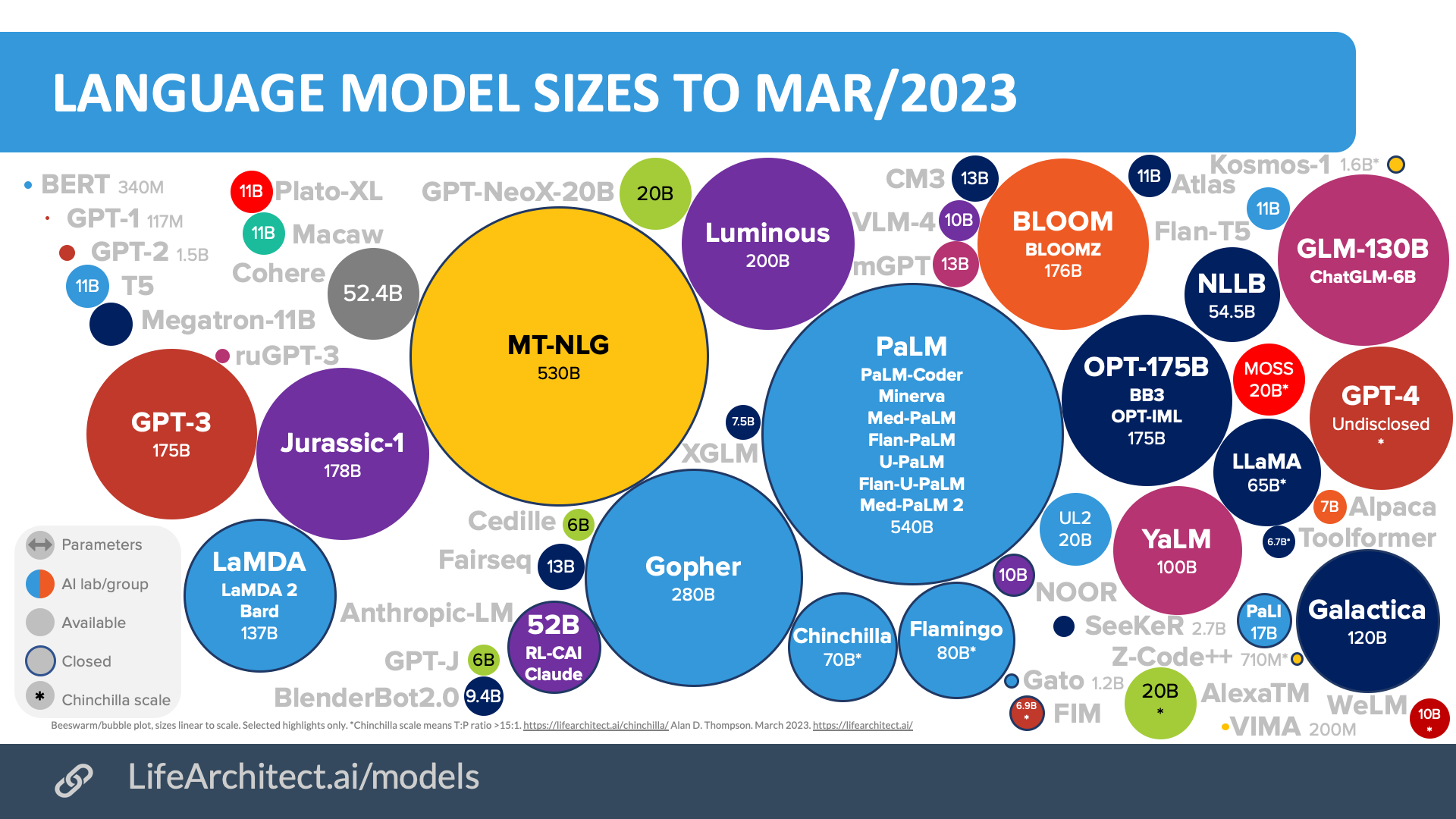

Mar/2023

Download source (PDF)

Download source (PDF)

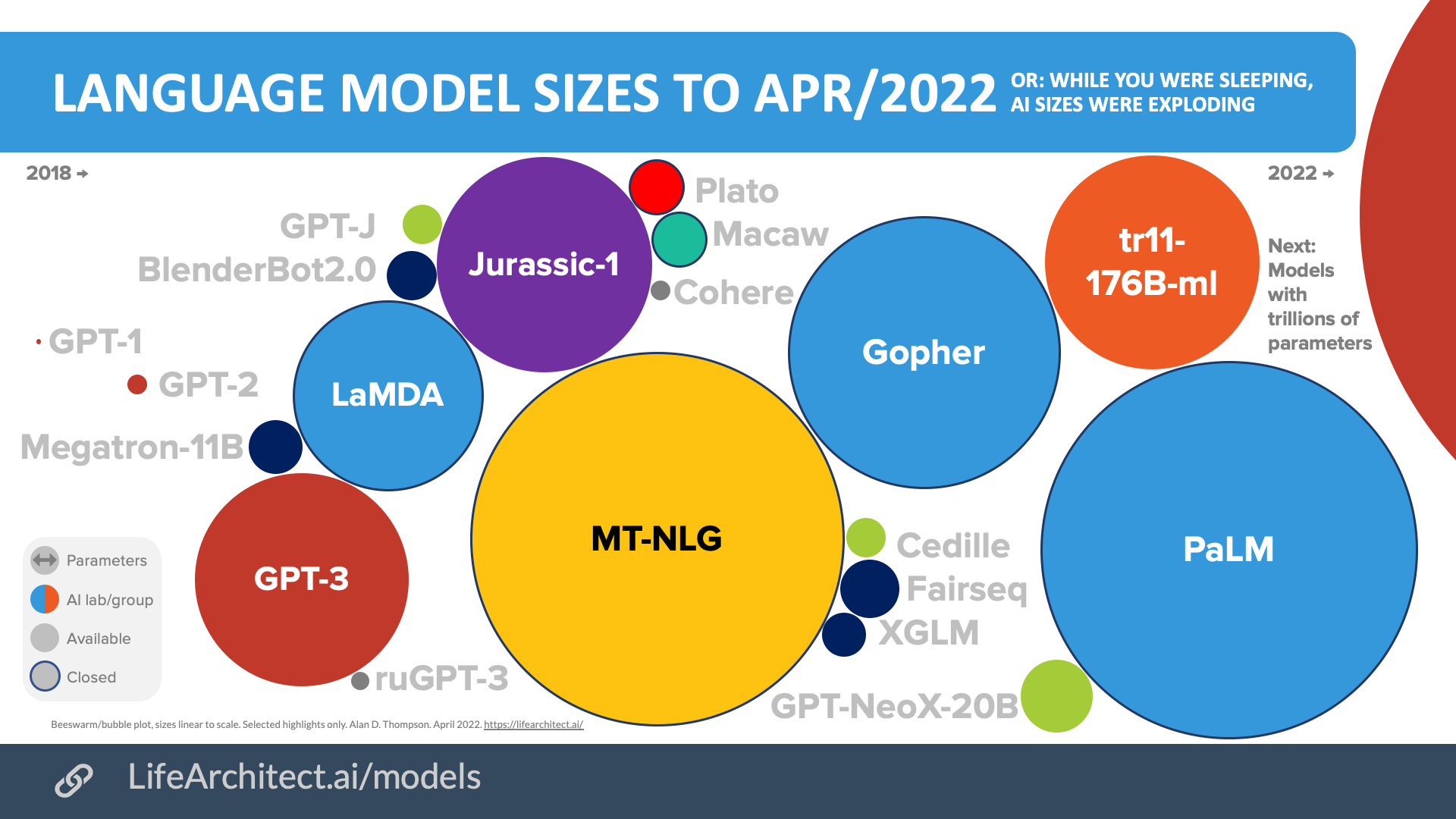

Apr/2022

Download source (PDF)

Download source (PDF)

Models Table

Summary of current models: View the full data (Google sheets)Olympus Updates

24/Jun/2024:

The [chatbot’s] secret internal project is code-named “Metis,” likely in reference to the Greek goddess of wisdom. The new service is designed to be accessed from a web browser, similar to how other AI assistants work… Metis is powered by an internal Amazon AI model called Olympus, another name inspired by Greek mythology. This is a more powerful version of the company’s publicly available Titan model, the people familiar said. At the most basic level, Metis gives text- and image-based answers in a smart, conversational manner, according to the internal document. It’s also able to share links to the source of its responses, suggest follow-up queries, and generate images. (24/Jun/2024, BI)

18/Mar/2024:

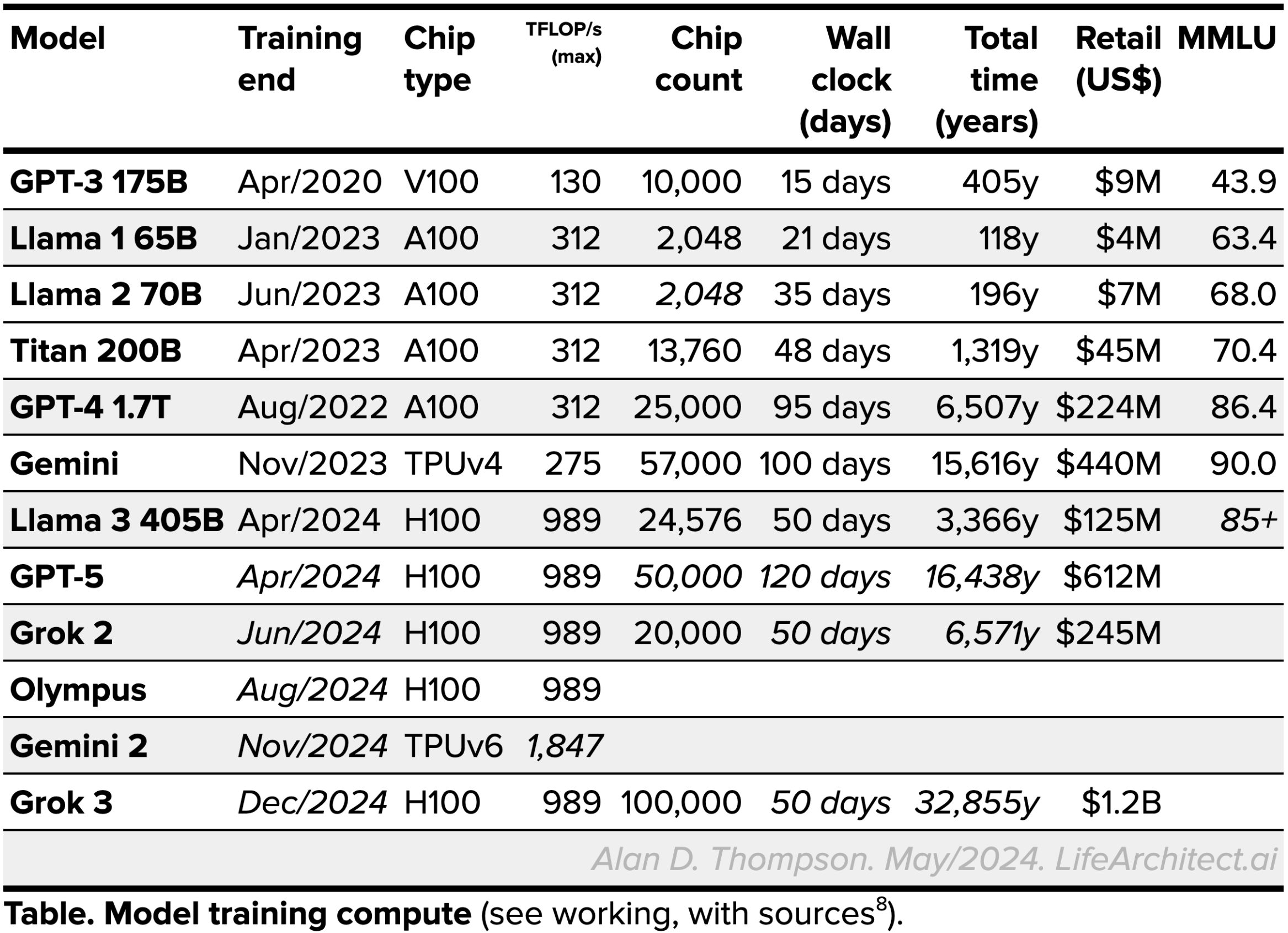

Amazon can see $1 billion training runs on the horizon:

…Technical talk from a longtime AWS person sheds light on frontier AI training…

James Hamilton, a distinguished engineer at Amazon, said at a talk this year that within the last year Amazon carried out a $65m training run. Specifically, they trained a 200B dense model on 4T tokens of data across 13,760 NVIDIA A100 chips (using 1,720 P4d nodes). It took 48 days to train. Hamilton described this training run as “1 gen old” so we can assume Amazon has moved on to larger runs since then. Looking ahead, Hamilton said “training runs soon to cross $1b”. (18/Mar/2024, via Jack Clark)

1/Feb/2024: CEO Andy Jassy has said the company plans to incorporate generative AI across all of its businesses. (CNBC)

Dataset

Timeline to Olympus

| Date | Milestone |

| 28/Sep/2023 | Titan embeddings released. |

| 8/Nov/2023 | Olympus plans leaked. |

| Next… | Olympus… |

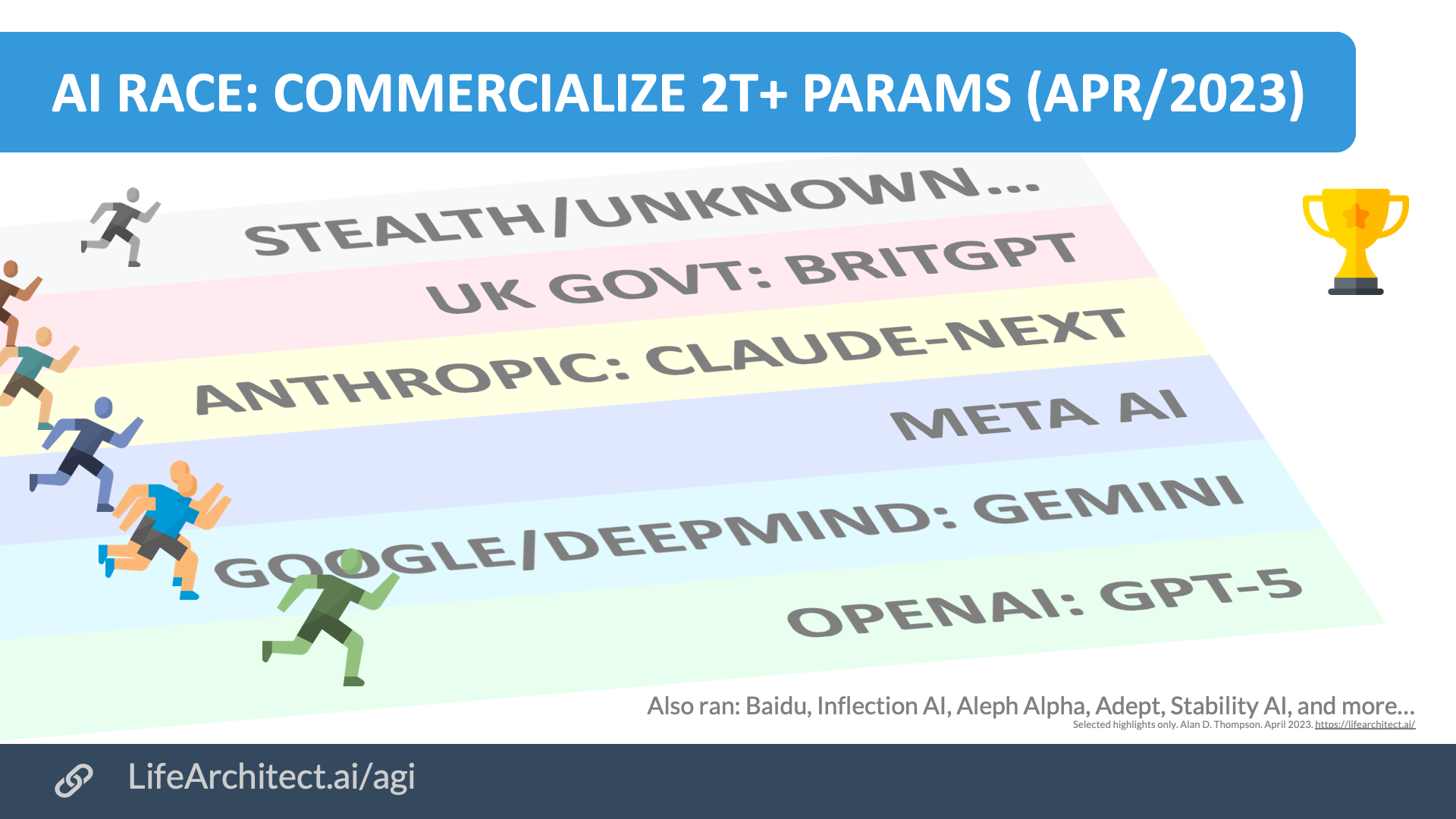

AI Race

Download source (PDF)

Download source (PDF)

Permissions: Yes, you can use these visualizations anywhere, please leave the citation intact.

AGI

Read more about Alan’s conservative countdown to AGI…

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Bestseller. 10,000+ readers from 142 countries. Microsoft, Tesla, Google...

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.This page last updated: 26/Jun/2024. https://lifearchitect.ai/olympus/↑