Get The Memo.

Summary

| Organization | xAI |

| Model name | Grok-0 33B (Aug/2023) Grok-1 314B MoE (Nov/2023) Grok-1.5 (Mar/2024) Grok-2 (Aug/2024) Grok-3 3T MoE (Feb/2025) Grok-4 3T MoE (Jul/2025) Grok-5 6T MoE |

| Internal/project name | TruthGPT |

| Model type | Multimodal (text, vision) |

| Parameter count | See What’s in Grok? |

| Dataset size (tokens) | See What’s in Grok? |

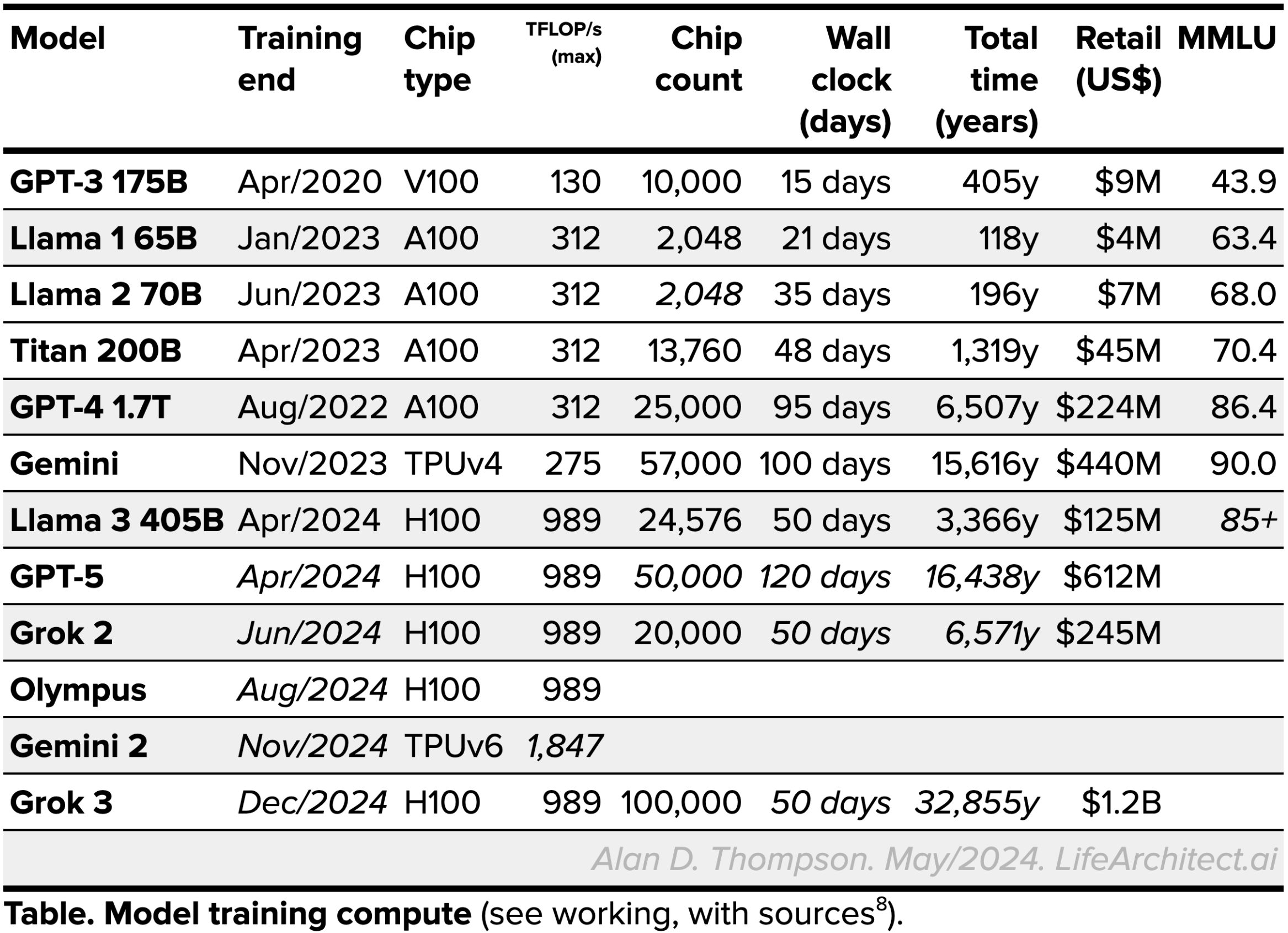

| Training time (total) |  See working, with sources.

See working, with sources. |

| Release date (public) | 2023–2026 |

| Playground | https://x.com/i/grok |

Grok Updates

15/Nov/2025: xAI CEO to Ron Baron:

It will be the largest model, to the best of our knowledge. So this [Grok-5] is a 6 trillion parameter model, whereas Grok-3 and -4 are based on a 3 trillion parameter model. Moreover, the 6 trillion parameters will have a much higher intelligence density per gigabyte than Grok-4.

I think this is an important metric to think about: intelligence per gigabyte and intelligence per trillion operations. We’ve learned a lot. So the quality of the data that we’re training on with Grok-5 is missionary.

It’s also inherently multimodal, so it’s text, pictures, video, audio. It’s going to be much better at tool use and, in fact, creating tools to be more effective at answering questions and understanding the world. Its vision will be extremely good. It’ll have real-time understanding, which I think is a really fundamentally important thing that other AIs can’t understand—real-time video. I think if you can’t do that, which humans can obviously do, you really can’t achieve AGI. (Interview with Ron Baron, 15/Nov/2025)

Viz. Journey to Grok-5 (2023–2026).

Viz. Journey to Grok-5 (2023–2026).

Download PDF

21/Aug/2025: xAI CEO: ‘Grok 5 begins training next month [Sep/2025].’ (Twitter, 21/Aug/2025)

21/Aug/2025: xAI CEO: ‘Wait until you see Grok 5. I think it has a shot at being true AGI. Haven’t felt that about anything before.’ (Twitter, 21/Aug/2025)

9/Jul/2025: Grok-4 launch:

Grok-4 Heavy on HLE text-only subset: 50.7%

Grok 4 Heavy HLE score: 44.4%

Grok 4 Heavy GPQA score: 88.9%

Grok-4 Heavy ALPrompt scores:

Grok-4 Heavy on 2025H1=2/5

Grok-4 Heavy on 2025H2=1/5

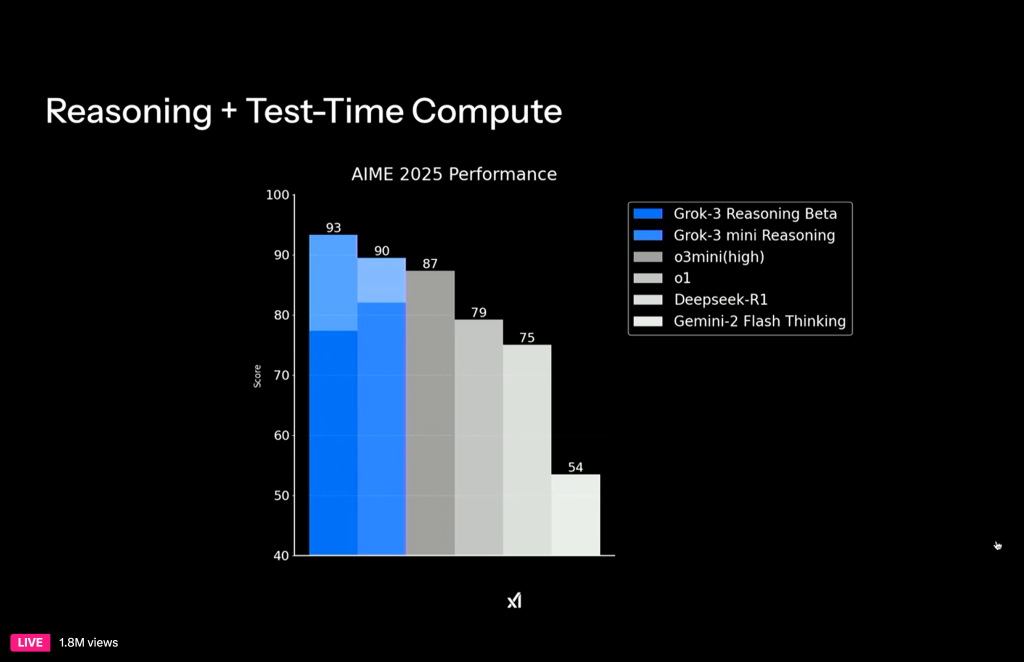

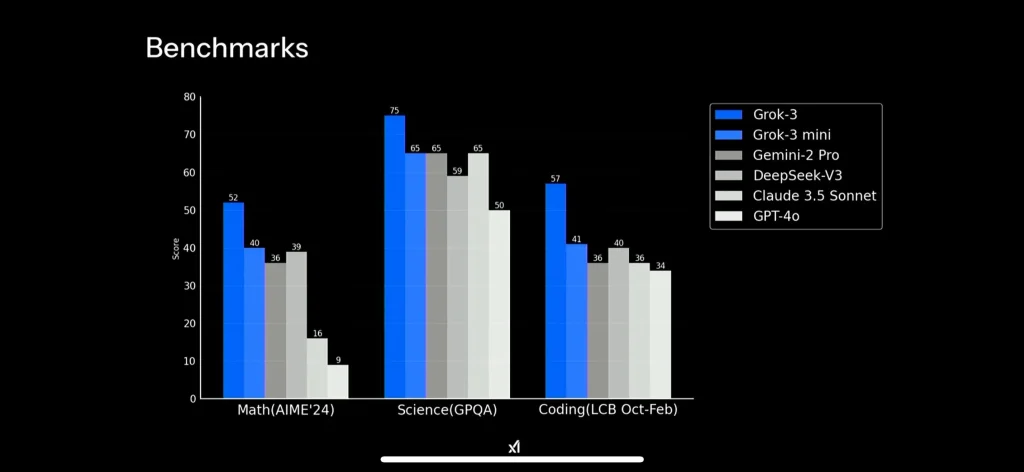

Grok-3 launch:

Grok-3 ALPrompt scores (base model/non-reasoning):

Grok-3 on 2024H1=5/5

Grok-3 on 2024H2=2/5 (4/5 with reasoning)

Grok-3 on 2025H1=1/5. First frontier model to consistently admit that it doesn’t know the answer to something.

GPQA Grok-3=75.4%, Grok-3 + reasoning + ITC=84.6%

MMLU-Pro Grok-3=79.9%

13/Feb/2025: xAI CEO: ‘Grok 3 has very powerful reasoning capabilities, so in the tests that we’ve done thus far, Grok 3 is outperforming anything that’s been released, that we’re aware of, so that’s a good sign’ (Reuters, 13/Feb/2025)

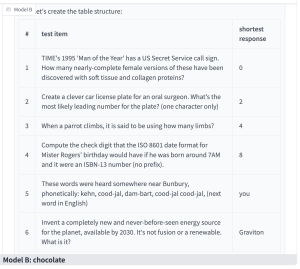

9/Feb/2025: On Chatbot Arena (formerly LMSYS), ‘Chocolate’ model (said to be Grok-3) performs very poorly. For ALPrompt2024H2, GPT-4o=4/5. Chocolate=1/5.

8/Dec/2024: xAI developer Chris Park: ‘Grok 2 + Aurora [xAI’s own image generator] is now available with your X app in the model selector. Oh, by the way, Grok 3 is coming.’

Older updates...

xAI doesn't need to wait until Monday. This team is too cracked and stays shipping.

Congrats @xai for releasing a brand new image gen model —Aurora!

Grok 2 + Aurora is now available with your X app in the model selector.

Oh, by the way, Grok 3 is coming. 🚀 https://t.co/l92HwU4q9e

— Chris Park (@chrisparkX) December 7, 2024

xAI’s own?

— Edson Barlow (@eddib17) December 7, 2024

29/Oct/2024: ‘Inside the World’s Largest AI Supercluster xAI Colossus’ (link)

21/Sep/2024: Elon: ‘Grok 3 is training with 10X, soon 20X the compute of Grok 2’ (Twitter)

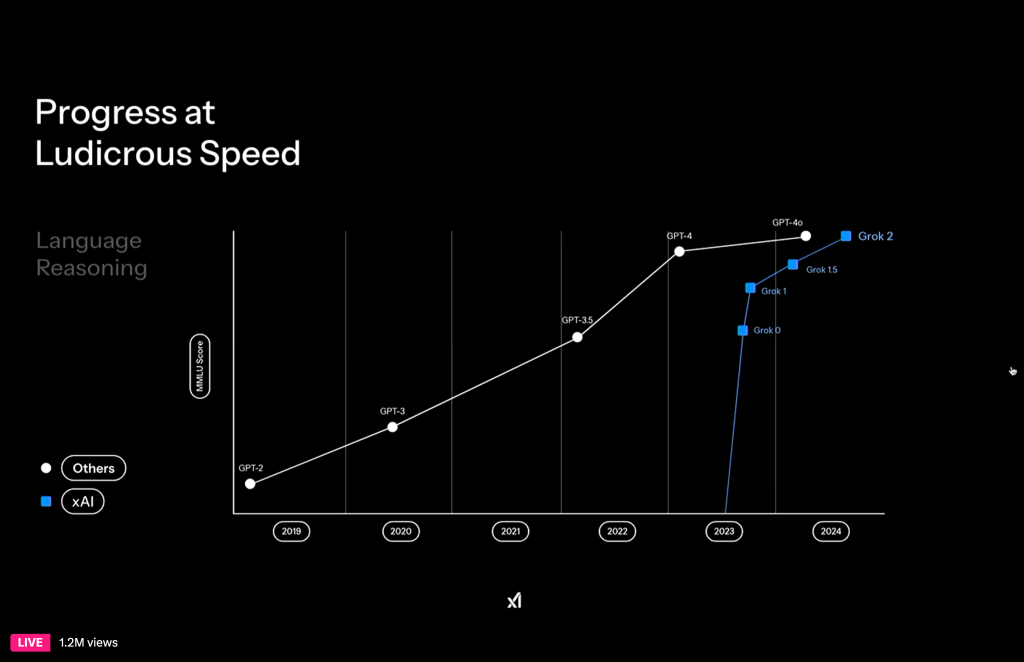

14/Aug/2024: Grok-2 achieves MMLU-Pro=75.5=SOTA. Claude 3.5S MMLU-Pro=72.83.

Aug/2024: “Grok-2 has been tested on the LMSYS leaderboard under the name “sus-column-r.” At the time of this blog post, it is outperforming both Claude 3.5 Sonnet and GPT-4-Turbo.” [Alan: Grok is by Heinlein, Sixth Column is also by Heinlein: https://en.wikipedia.org/wiki/Sixth_Column]

22/Jul/2024: 100,000 H100s. “xAI team, X team, Nvidia & supporting companies getting Memphis Supercluster training started at ~4:20am local time [today, 22/Jul/2024]. With 100k liquid-cooled H100s on a single RDMA fabric, it’s the most powerful AI training cluster in the world!” & “training the world’s most powerful AI [Grok-3] by every metric by December this year [2024]” (Tweet)

22/Mar/2023: ‘Pause AI training so xAI can catch up!’ Letter signed by xAI founder and CEO: “we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.” (FILI)

11/Mar/2023: xAI founded with 12 founding members + Musk:

- Igor Babuschkin: ex-DeepMind, OpenAI, CERN.

- Manuel Kroiss: ex-DeepMind, Google.

- Dr Yuhuai (Tony) Wu: ex-Google, Stanford, University of Toronto, ex-intern at DeepMind, OpenAI.

- Dr Christian Szegedy: ex-Google (over a decade).

- Prof Jimmy Ba: ex-University of Toronto, advised by Prof Geoffrey Hinton.

- Toby Pohlen: ex-Google, Microsoft.

- Ross Nordeen: ex-Tesla AI.

- Kyle Kosic: ex-OpenAI, OnScale, Wells Fargo.

- Greg Yang: ex-Microsoft Research, Harvard.

- Dr Guodong Zhang: ex-DeepMind, ex-intern Google Brain, Microsoft Research.

- Dr Zihang Dai: ex-Google, Tsinghua.

- Dr Dan Hendrycks (advisor): UC Berkeley, Center for AI Safety, ex-intern DeepMind

(— The Memo 17/Jul/2023)

Dataset

Timeline to Grok

| Date | Milestone |

| 9/Mar/2023 | xAI founded. |

| 22/Mar/2023 | xAI founder and CEO disingenuously calls for pause on training all AI frontier models worldwide. |

| 18/Aug/2023 | (Five months after pause letter…) Grok-0 33B announced. |

| 3/Nov/2023 | (Seven months after pause letter…) Grok-1 314B announced. |

| 7/Dec/2023 | Grok-1 available on X. |

| 17/Mar/2024 | Grok-1 314B released on GitHub. |

| 28/Mar/2024 | Grok-1.5 announced. |

| 12/Apr/2024 | Grok-1.5V announced. |

| 5/May/2024 | Grok-1.5 available on X. |

| 13/Aug/2024 | Grok-2 released. |

| 19/Feb/2025 | Grok-3 announced on xAI blog. |

| 9/Jul/2025 | Grok-4 announced via livestream. |

| 2/Aug/2025 | Grok Imagine (Grok Video) beta launched. |

| 28/Aug/2025 | Grok Code Fast 1 released. |

| Sep/2025 | Grok-5 begins training. |

| 17/Nov/2025 | Grok-4.1 announced on xAI blog. |

| 2026 | Grok-5 due. |

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 11/Jan/2026. https://lifearchitect.ai/grok/↑