A Comprehensive Analysis of US Science Datasets

A Comprehensive Analysis of US Science Datasets

Alan D. Thompson

LifeArchitect.ai

January 2026

75+ pages incl extended matter.

The report

Download report

(Available exclusively to full subscribers of The Memo.)

Abstract

This report presents an independent analysis of the United States Genesis Mission, a new initiative to train frontier AI models on integrated Department of Energy scientific datasets. We identify and characterize public and likely ‘Tier 1’ datasets across biology, climate, fusion, materials, physics, security, space, and systems, estimating a combined scale of multiple exabytes, at a quintillion-token scale of physical-world data. In practical terms, this is 14,000× larger than the estimated training dataset used for GPT-5, based on independent analysis by LifeArchitect.ai. Centralizing these datasets bypasses institutional bottlenecks and exposes AI systems directly to experimental and simulated reality. The work builds on several LifeArchitect.ai dataset reports, including What’s in my AI? (2022), subsequently referenced across government and industry submissions addressing dataset provenance in the context of model control and policy. The Genesis Mission amplifies text-based data with physical-world data for frontier AI models, marking the point where science enables artificial intelligence while artificial intelligence enables science.

Contents

1. Background

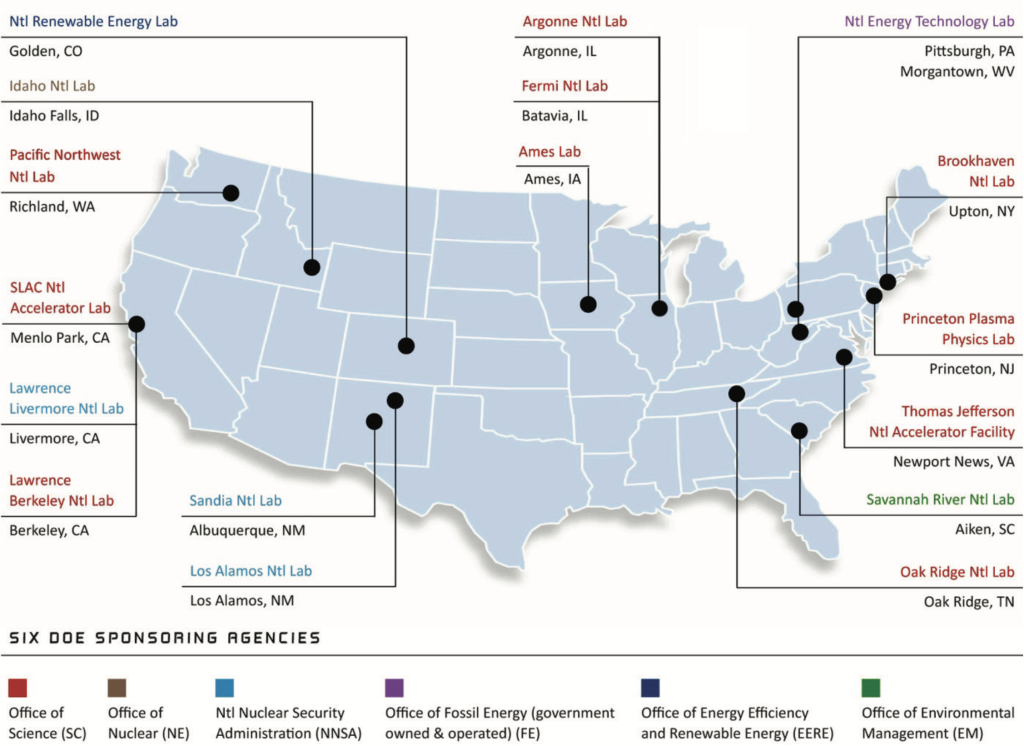

2. Labs and partners

2.1. Anthropic

2.2. Google

2.3. OpenAI

2.4. xAI

3. Timeline

4. DOE public datasets

5. Likely DOE ‘Tier 1’ datasets

5.1. Accelerating Therapeutics for Medicine

5.2. Advanced Photon Source Upgrade X-ray

5.3. Atmospheric Radiation Measurement

5.4. Community Ice CodE / Energy Exascale Earth System Model

5.5. Continuous Electron Beam Accelerator

5.6. Critical Materials Innovation

5.7. Deep Underground Neutrino Experiment

5.8. Earth System Grid Federation

5.9. Energy Data eXchange

5.10. Environmental Molecular Sciences Laboratory

5.11. Joint Genome Institute

5.12. Hardware/Hybrid Accelerated Cosmology Code

5.13. Large Hadron Collider Compact Muon Solenoid Tier-1

5.14. Legacy Survey of Space and Time

5.15. Linac Coherent Light Source II

5.16. Materials Data Facility

5.17. Materials Project

5.18. Million Veteran Program

5.19. National Ignition Facility Shot Data

5.20. National Solar Radiation Database

5.21. National Spherical Torus Experiment

5.22. National Synchrotron Light Source II

5.23. Relativistic Heavy Ion Collider

5.24. Savannah River Site Environmental Monitoring and Surveillance Data

5.25. Stealth/non-public/top secret dataset

5.26. Stockpile Stewardship

5.27. Summit/Frontier I/O Logs

5.28. Viral Genomics

5.29. Wind Integration National Dataset

5.30. Z Pulsed Power Facility

6. From words to world data

7. Why scientific data benefits everyone

8. Mapping Genesis Mission data to the ASI checklist

8.1. Phase 1: Early ASI, Discovery, and Simulation

8.2. Phase 2: Governance and Economic Transformation

8.3. Phase 3: Physical World Integration

9. Hypothetical prompts to a Genesis system

9.1. Global energy transition and climate stability

9.2. Accelerated molecular discovery for longevity

9.3. Room-temperature superconductivity and material synthesis

9.4. Commercial plasma energy generation

9.5. Systemic risk and national security defense

9.6. BHAG 1. Biological immortality through proteomic correction

9.7. BHAG 2. Direct matter-to-energy conversion, mass–energy parity

9.8. BHAG 3. Instantaneous global neural synchronization

9.9. BHAG 4. Total resource abundance via atomic reconfiguration

10. Dataset sovereignty and model ownership discussion

11. Conclusion

12. Further reading

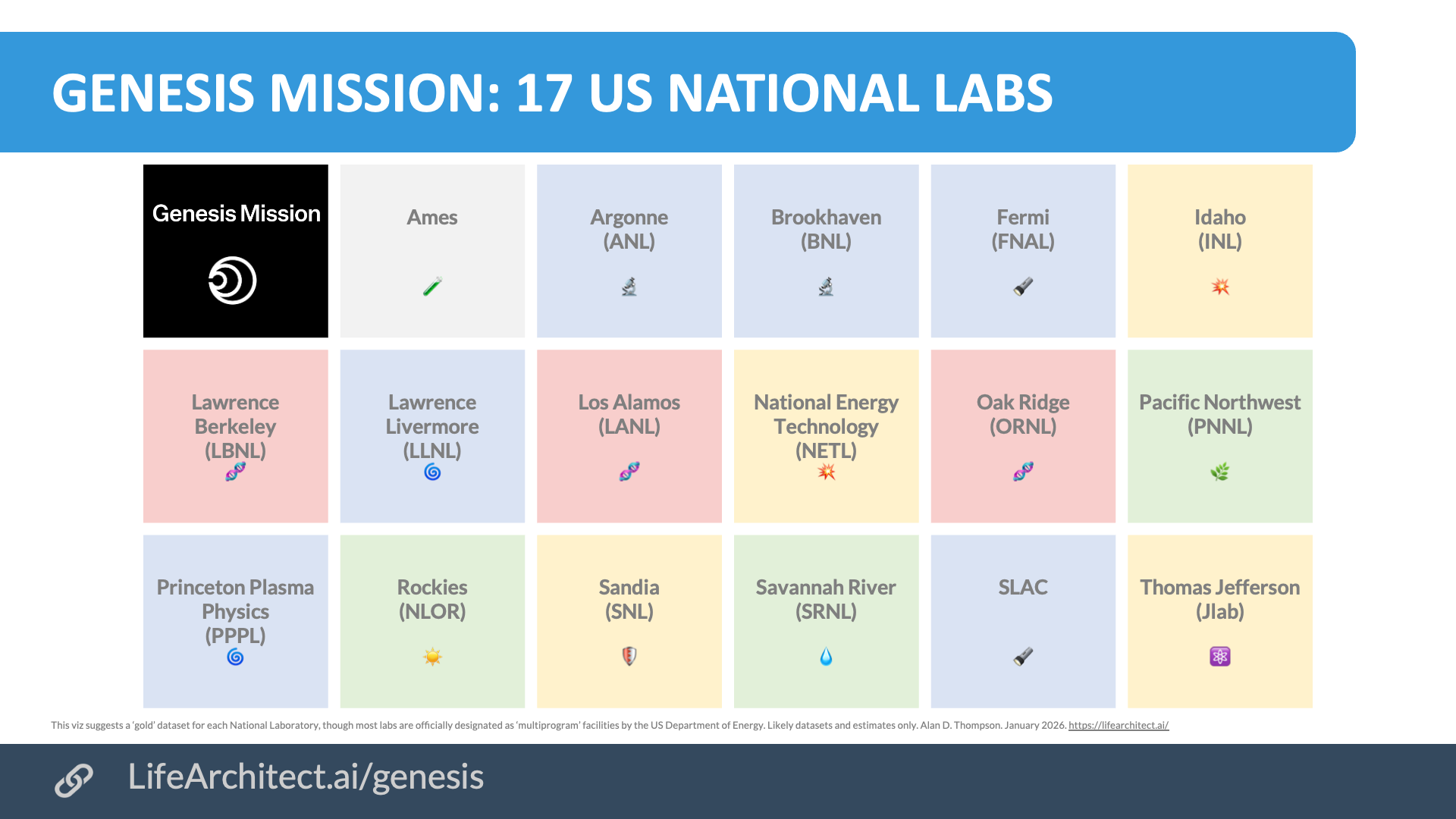

13. Appendix A: Genesis Mission: 17 US National Labs

14. Appendix B: The State of the DOE National Laboratories: 2020 edition

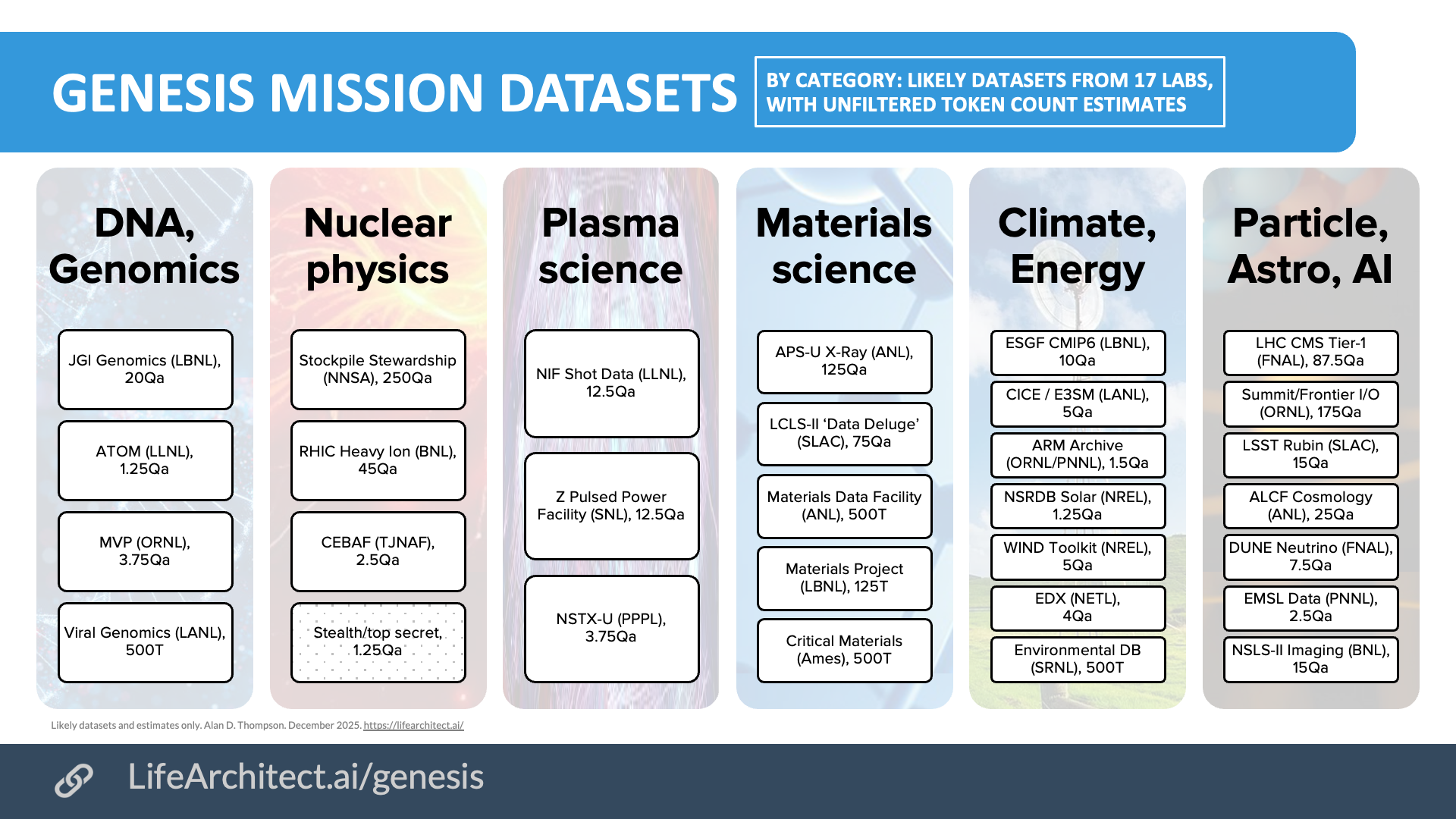

Datasets (estimates by LifeArchitect.ai)

Datasets likely to be identified as part of the Genesis Mission, all working and estimates by LifeArchitect.ai. Mouseover to expand acronyms, or see

dataset contents in plain English.

| # | Dataset | Lab | Field | Type | Size | Tokens | Quote | Source |

|---|---|---|---|---|---|---|---|---|

| 1 | Accelerating Therapeutics for Medicine (ATOM) | Livermore | Biology/Pharma | 🟥 | 5PB | 1.25Qa | “ATOM consortium… transforming drug discovery… massive molecular libraries.” | LLNL ATOM |

| 2 | Advanced Photon Source Upgrade X-ray (APS-U) | Argonne | Materials/Imaging | 🟨 | 500PB | 125Qa | “The upgraded APS will generate 2-3 orders of magnitude more data… reaching exabytes over its lifetime.” | ANL APS-U |

| 3 | Atmospheric Radiation Measurement (ARM) | Oak Ridge | Atmospheric | 🟩 | 6PB | 1.5Qa | “Atmospheric Radiation Measurement… 30 years of continuous sensor data for climate AI.” | ARM.gov |

| 4 | Community Ice CodE / Energy Exascale Earth System Model (CICE/E3SM) | Los Alamos | Climate/Ocean | 🟩 | 20PB | 5Qa | “Energy Exascale Earth System Model… high-res ocean/ice simulation data.” | E3SM |

| 5 | Continuous Electron Beam Accelerator (CEBAF) | JLab | Nuclear Physics | 🟦 | 10PB | 2.5Qa | “Continuous Electron Beam Accelerator Facility… probing the quark-gluon structure of matter.” | JLab |

| 6 | Critical Materials Innovation (CMI) | Ames | Rare Earths | 🟨 | 2PB | 500T | “CMI data… thermodynamic and phase diagram data for rare earth substitution.” | Ames CMI |

| 7 | Deep Underground Neutrino Experiment (DUNE) | Fermilab | Particle Physics | 🟦 | 30PB | 7.5Qa | “Deep Underground Neutrino Experiment… massive liquid argon TPC image data for CNNs.” | DUNE Science |

| 8 | Earth System Grid Federation (ESGF) | Berkeley | Climate Change | 🟩 | 40PB | 10Qa | “Earth System Grid Federation… global repository for CMIP6… vital for climate AI twins.” | ESGF |

| 9 | Energy Data eXchange (EDX) | NETL | Fossil/Carbon | ⬜ | 16PB | 4Qa | “Energy Data eXchange… subsurface data for carbon sequestration and oil recovery.” | NETL EDX |

| 10 | Environmental Molecular Sciences Lab (EMSL) | Pacific Northwest | Molecular Science | 🟨 | 10PB | 2.5Qa | “Environmental Molecular Sciences Lab… mass spec and microscopy data for bio-earth systems.” | EMSL |

| 11 | Hardware/Hybrid Accelerated Cosmology Code (HACC) | Argonne | Cosmology/Physics | 🟦 | 100PB | 25Qa | “Frontier-E… generated > 100 PB of data… establishing a new standard of end-to-end performance.” | ALCF Cosmology, Nov/2025 update |

| 12 | Joint Genome Institute (JGI) | Berkeley | Biology/Genomics | 🟥 | 80PB | 20Qa | “JGI creates petabytes of sequence data… DNA is ‘nature’s language’ (1 base = 1 token).” | JGI DOE |

| 13 | Large Hadron Collider CMS Tier-1 (LHC CMS) | Fermilab | High Energy Physics | 🟦 | 350PB | 87.5Qa | “Fermilab hosts the largest tier-1 computing center for CMS… managing exabyte-scale archives.” | FNAL Computing |

| 14 | Legacy Survey of Space and Time (LSST) | SLAC | Astrophysics | 🟪 | 60PB | 15Qa | “Rubin Observatory Legacy Survey of Space and Time… 20TB/night… processing pipeline at SLAC.” | Rubin Obs |

| 15 | Linac Coherent Light Source II (LCLS-II) | SLAC | X-ray/Quantum | 🟨 | 300PB | 75Qa | “LCLS-II will deliver 8,000x more data… ‘The Data Deluge’… requires edge AI to manage.” | SLAC LCLS |

| 16 | Materials Data Facility (MDF) | Argonne | Materials Science | 🟨 | 2PB | 500T | “A scalable repository for publishing materials data… enabling ML discovery loops.” | MDF |

| 17 | Materials Project | Berkeley | Chemistry | 🟨 | 0.5PB | 125T | “Information on over 150,000 materials… the ‘Google’ of materials properties.” | Materials Project |

| 18 | Million Veteran Program (MVP) | Oak Ridge | Health/Genomics | 🟥 | 15PB | 3.75Qa | “Million Veteran Program… one of the world’s largest genomic databases linked to health records.” | VA/ORNL |

| 19 | National Ignition Facility Shot Data (NIF) | Livermore | Fusion/HED | 🟧 | 50PB | 12.5Qa | “National Ignition Facility… data from fusion ignition shots used to calibrate simulation AI.” | LLNL NIF |

| 20 | National Solar Radiation Database (NSRDB) | Rockies | Solar Energy | 🟪 | 5PB | 1.25Qa | “National Solar Radiation Database… physics-based modeling spanning decades.” | NSRDB |

| 21 | National Spherical Torus Experiment Upgrade (NSTX-U) | Princeton Plasma | Plasma Physics | 🟧 | 15PB | 3.75Qa | “Spherical Torus experiment… microsecond-resolution sensor data for fusion control AI.” | PPPL NSTX-U |

| 22 | National Synchrotron Light Source II (NSLS-II) | Brookhaven | Nanomaterials | 🟨 | 60PB | 15Qa | “NSLS-II data rates require AI-driven streaming analysis… approaching 1 PB/experiment.” | BNL News |

| 23 | Relativistic Heavy Ion Collider (RHIC) | Brookhaven | Nuclear Physics | 🟦 | 180PB | 45Qa | “RHIC & ATLAS Tier-1 center store hundreds of petabytes of collision data.” | BNL SDCC |

| 24 | Savannah River Site Environmental Monitoring (SRS-EM) | Savannah River | Ecology/Waste | 🟩 | 2PB | 500T | “Savannah River Site environmental monitoring… soil, water, and waste processing history.” | SRNL |

| 25 | Stealth/non-public/top secret dataset | — | — | ⬛ | 5PB | 1.25Qa | ||

| 26 | Stockpile Stewardship | Los Alamos | Nuclear Security | ⬛ | 1000PB | 250Qa | “The NNSA labs hold the world’s largest classified archives… necessary for ‘trusted’ AI models.” | NNSA ASC |

| 27 | Summit/Frontier Input/Output Logs | Oak Ridge | HPC Systems | ⬜ | 700PB | 175Qa | “Exascale I/O logs… analyzing system performance and scientific throughput of Frontier.” | OLCF |

| 28 | Viral Genomics | Los Alamos | Epidemiology | 🟥 | 2PB | 500T | “HIV/Influenza/COVID databases… sequencing data for vaccine design AI.” | LANL pathogens |

| 29 | Wind Integration National Dataset (WIND) | Rockies | Wind Energy | 🟩 | 20PB | 5Qa | “2TB per year per region… total archives span terabytes to petabytes for grid planning.” | NREL WIND |

| 30 | Z Pulsed Power Facility | Sandia | HED Physics | 🟧 | 50PB | 12.5Qa | “World’s most powerful pulsed power facility… extreme conditions data for material science.” | Sandia Z |

Dataset size (unfiltered):

Total storage (PB): ~3,635.5PB (petabytes)

Total storage (EB): ~3.6EB (exabytes)

Total tokens (T): ~908,875T (trillion)

Total tokens (Qa): ~908.9Qa (quadrillion)

Total tokens (Qi): ~0.91Qi (quintillion)

Source: LifeArchitect.ai

* All token counts are ‘estimated’. Using the standard text conservative calculation 1PB≈250T tokens≈0.25Qa tokens. This conversion factor is a simplification for non-linguistic scientific data, which does not tokenize like English text, but it is used here deliberately to keep figures round, comparable, and easy to reason about across datasets. Calculations are informed but rough estimates. For similar working see my 2022 paper: What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher.

Viz

Download source (PDF)

Download source (PDF)

Permissions: Yes, you can use these visualizations anywhere, please leave the citation intact.

Dataset sovereignty and model ownership discussion (extract from Section 10, p38–39 of the report)

My earlier analyses of frontier AI training datasets were initially technical in scope, focused on reconstructing how models were trained in the absence of formal disclosure. As questions of ownership, reuse, and control moved into policy and regulatory arenas, that work began to circulate within government and industry discussions of large-scale training data. The Genesis Mission brings this dynamic into the open by placing dataset aggregation itself under explicit public authority.

A structural oddity sits at the center of the Genesis Mission: the US Federal government controls the highest-value scientific datasets, but private frontier labs do the model training. That split is not a normal market arrangement, and it is not a standard government procurement pattern. It seems to put the state in control of the valuable input, then treats the valuable output weights as ordinary private property.

This tension has precedent. Roughly nine months before ChatGPT was announced, my paper, What’s in my AI?, documented the training mixtures used in successive GPT-n class models for a narrow analytical purpose. That work later appeared in formal copyright policy submissions. In one filing, News Corp cites that paper in support of claims about the presence of news sources in major webtext corpora, and the role of high-quality news in improving model reliability, in a submission that explicitly references the Wall Street Journal as part of its publisher group

This context matters for the Genesis Mission. When the government owns the highest-value training datasets, the argument surface shifts from ‘what went into the model?’ to ‘what rights attach to the model trained on government-owned inputs?’

A generation or two later, the implications become direct. If GPT-7 or Gemini 5 or Claude 6 class models are trained on Genesis Mission datasets, dataset ownership starts to function as a proxy for model ownership. The state may not run the training job day-to-day, but it can set access conditions, approve or deny training, constrain reuse, and shape who can deploy or fine-tune. In effect, the weights become a state-anchored asset operated by private labs.

A scenario presented in the acclaimed AI 2027 report captures how quickly this kind of shift becomes explicit once the system is treated as strategic. After a major model-weight theft scenario, it states: ‘The White House puts [the frontier AI lab] on a shorter leash and adds military and intelligence community personnel to their security team.’

Once the system is brought under direct state oversight, formal ownership matters less than effective control. The Genesis Mission structure points in that direction from the outset. Model weights may reside inside a private lab, but state control over data access, data security, and model training conditions places their use increasingly within a public authority frame.

At a longer horizon, even these ownership distinctions are likely to be temporary. As outlined in my ASI checklist, artificial superintelligence systems that exceed human capability across science, economics, and coordination would not remain confined to national borders or conventional state authority, because governance itself would likely become an AI function rather than a human one. Dr Demis Hassabis gestured toward this shift in 2024 when he noted that even money and company constructs may cease to make sense in a post-ASI world. In that context, data sovereignty and model ownership describe an intermediate coordination problem, not a durable political structure, as intelligence becomes the governing layer, and territorial boundaries lose operational and conceptual relevance.

Updates (archived)

Genesis Mission Updates

24/Nov/2025 (Day 0): Genesis Mission launched by US Executive Order. Timeline milestones appear below…

18/Dec/2025: OpenAI and Google and Anthropic sign separate MOUs to join as lead industry partners to the project. (+ OpenAI science letter to DOE).

18/Dec/2025: 24 MOUs signed (‘The organizations that have signed memorandums of understanding (MOUs) as of today have either expressed interest to DOE in response to an RFI or have active projects with DOE and the National Laboratories for activities related to the Genesis Mission, and any products produced for the Genesis Mission will be architecture-agnostic.’): Accenture, AMD, Anthropic, Armada, Amazon Web Services, Cerebras, CoreWeave, Dell, DrivenData, Google, Groq, Hewlett Packard Enterprise, IBM, Intel, Microsoft, NVIDIA, OpenAI, Oracle, Periodic Labs, Palantir, Project Prometheus, Radical AI, xAI, XPRIZE.

24/Dec/2025: Nuclear developer proposes using navy reactors for data centers… to repurpose decommissioned nuclear reactors from Navy warships to support the US grid and fuel the burgeoning energy demands of the AI industry. The project aims to use two retired reactors for a data center in Oak Ridge, Tennessee, as part of the White House’s Genesis Mission, potentially generating 450-520MW. (Bloomberg, Tom’s Hardware)

23/Jan/2026 (+60 days): The Secretary of Energy submits a detailed list of at least 20 national science and technology challenges to the Assistant to the President for Science and Technology.

22/Feb/2026 (+90 days): The Secretary identifies available Federal computing, storage, and networking resources, including DOE supercomputers and cloud-based systems, to support the Mission.

24/Mar/2026 (+120 days): The Secretary identifies initial data and model assets and develops a plan for incorporating datasets from federally funded research and other sources.

22/Jul/2026 (+240 days): The Secretary reviews capabilities across DOE national laboratories for robotic facilities able to engage in AI-directed experimentation and manufacturing.

21/Aug/2026 (+270 days): The Secretary seeks to demonstrate an initial operating capability of the American Science and Security Platform for at least one identified national challenge.

24/Nov/2026 (+1 year): The Secretary submits the first annual report to the President describing the Platform’s status, user engagement, and scientific outcomes.

Labs at a glance (from report)

Labs at a glance

| # | Short name | Acronym | Full name | Type |

Computing

|

Genetics

|

Materials

|

Physics

|

Power

|

Weapons

|

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Ames | Ames National Laboratory | Single-Program Science | 💎 | ||||||

| 2 | Argonne | ANL | Argonne National Laboratory | Multipurpose Science | 🖥️ | 🧬 | 💎 | ⚛️ | ⚡ | |

| 3 | Berkeley | LBNL | Lawrence Berkeley National Laboratory | Multipurpose Science | 🖥️ | 🧬 | 💎 | ⚛️ | ||

| 4 | Brookhaven | BNL | Brookhaven National Laboratory | Multipurpose Science | 🖥️ | 🧬 | 💎 | ⚛️ | ⚡ | |

| 5 | Fermilab | FNAL | Fermi National Accelerator Laboratory | Single-Program Science | 🖥️ | ⚛️ | ||||

| 6 | Idaho | INL | Idaho National Laboratory | Energy Technology | 🖥️ | 💎 | ⚡ | 🛡️ | ||

| 7 | Jefferson | TJNAF / JLab | Thomas Jefferson National Accelerator Facility | Single-Program Science | 🖥️ | ⚛️ | ||||

| 8 | Livermore | LLNL | Lawrence Livermore National Laboratory | Multipurpose Security | 🖥️ | 🧬 | 💎 | ⚛️ | ⚡ | 🛡️ |

| 9 | Los Alamos | LANL | Los Alamos National Laboratory | Multipurpose Security | 🖥️ | 🧬 | 💎 | ⚛️ | ⚡ | 🛡️ |

| 10 | NETL | NETL | National Energy Technology Laboratory | Energy Technology | 🖥️ | 💎 | ⚡ | |||

| 11 | Oak Ridge | ORNL | Oak Ridge National Laboratory | Multipurpose Science | 🖥️ | 🧬 | 💎 | ⚛️ | ⚡ | 🛡️ |

| 12 | Pacific Northwest | PNNL | Pacific Northwest National Laboratory | Multipurpose Science | 🖥️ | 🧬 | 💎 | ⚡ | 🛡️ | |

| 13 | Princeton Plasma | PPPL | Princeton Plasma Physics Laboratory | Single-Program Science | 🖥️ | ⚛️ | ⚡ | |||

| 14 | Rockies | NLOR | National Laboratory of the Rockies | Energy Technology | 🖥️ | 💎 | ⚡ | |||

| 15 | Sandia | SNL | Sandia National Laboratories | Multipurpose Security | 🖥️ | 🧬 | 💎 | ⚡ | 🛡️ | |

| 16 | Savannah River | SRNL | Savannah River National Laboratory | Multipurpose Environmental | 💎 | ⚡ | 🛡️ | |||

| 17 | SLAC | SLAC | SLAC National Accelerator Laboratory | Single-Program Science | 🖥️ | 🧬 | 💎 | ⚛️ |

Partners (from report)

Partners

Showing initial collaborators announced 24/Nov/2025, as well as 24 MOUs signed 18/Dec/2025.

|

Office of Science (SC) 1. Ames 2. Argonne 3. Berkeley 4. Brookhaven 5. Fermi 6. JLab 7. Oak Ridge 8. Pacific Northwest 9. SLAC |

Frontier AI labs – Anthropic – OpenAI – xAI Other AI labs |

|

National Nuclear Security Admin. 10. Los Alamos 11. Livermore 12. Sandia Critical minerals and energy innovation Office of Fusion Office of Nuclear Energy Hydrocarbons and geothermal energy Office of Environmental Management |

Semiconductor and processor design – AMD – Cerebras – Groq – Intel – NVIDIA – SambaNova – Semiconductor Industry Association – Synopsys Cloud and hardware systems High-performance networking |

|

Systems integration – Accenture – Armada – LILA – Nokia – Palantir – Scale AI – Siemens – XPRIZE |

Rare earth and critical minerals – Albemarle – Critical Materials Recycling – MP Materials – Niron Magnetics – Periodic Labs – Phoenix Tailings – PMT Critical Metals – Ramaco |

|

Quantum computing and software – Quantinuum – Qubit – RadiaSoft |

Mapping and data support – DrivenData – Esri – Kitware |

|

Aerospace and defense systems – Collins Aerospace (RTX/Raytheon) – GE Aerospace – RTX (Raytheon) Utility and grid operations |

Materials synthesis and lab automation – Applied Materials – Chemspeed – Emerald Cloud Lab – New York Creates – Nusano – Project Prometheus – TdVib Chemical modeling and R&D software |

Highlights (archived)

Highlights

The Genesis Mission datasets project is one of the most interesting advances in post-2020 frontier artificial intelligence. It combines 17 government laboratories with industry partners like OpenAI, Google, and Anthropic. Here are a few of my favourite highlights…

The Large Hadron Collider is involved. The Large Hadron Collider produces immense streams of information in Switzerland. The heavy lifting of data analysis occurs in the United States. Fermilab (FNAL) functions as the primary hub for the Compact Muon Solenoid (CMS) experiment, managing a Tier-1 center with archives exceeding 350 petabytes. This setup ensures that the most complex physics experiments on Earth rely on American supercomputing to find meaning in the noise of particle collisions.

The Materials Project at Lawrence Berkeley National Laboratory acts as a search engine for the physical world. It contains data on over 150,000 inorganic compounds and millions of properties. Training AI on this specific archive allows the Genesis Mission to predict the behavior of substances that do not yet exist. This process is a requirement for finding new battery chemistries and superconductors that work at room temperature.

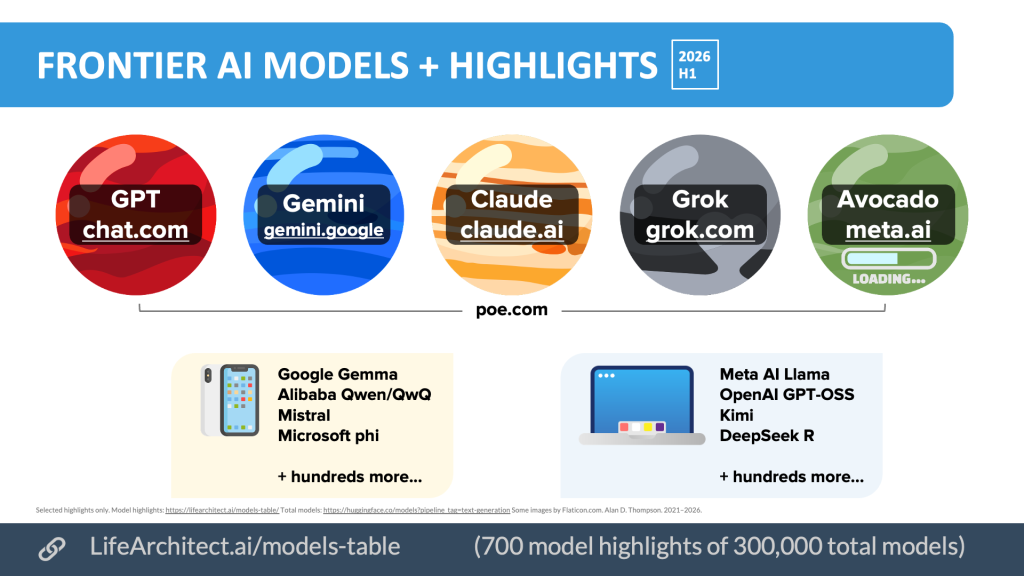

Meta AI is missing from the partners list (as of 22/Dec/2025). The list of partners includes OpenAI, Google, Anthropic, and xAI. However, Meta AI is missing as one of the ‘big 5’ frontier AI labs. Read more about the ‘big 5’ labs and related models:

Anthropic has extensive experience working with the DOE. Anthropic maintains a deep technical partnership with the Lawrence Livermore National Laboratory (LLNL, 9/Jul/2025) and the National Nuclear Security Administration (NNSA, 21/Aug/2025). In July 2025, LLNL expanded access to Claude for Enterprise to its entire staff of 10,000 scientists to support research in nuclear deterrence and energy security. This collaboration led to the co-development of a specialized AI classifier with the NNSA, allowing the identification of harmful queries related to nuclear weapons with 96% accuracy.

Robots. The project incorporates robotic laboratories where AI models can directly control physical instruments. This allows for a closed-loop research cycle where the AI proposes and then tests its own ideas. This process removes the delays of manual labor, letting the machines run experiments around the clock (24/7, in the dark) without human intervention.

The Genesis Mission has been described as a modern Manhattan Project. It coordinates 40,000 scientists and engineers across 17 locations to build a single discovery platform. This represents the most significant state-led scientific effort in history.

The total volume of information involved reaches into the exascale range. The term exascale describes a specific boundary where computing power and data volume meet. In the first instance, it refers to compute speed: a machine performing a quintillion calculations per second. In the second instance, it refers to data capacity: a collection of information reaching the exabyte level, which is a quintillion bytes. The Genesis Mission sits at this intersection because the 17 national labs possess both the machines capable of these speeds and the archives that fill that capacity. With around one quintillion tokens estimated, the Genesis Mission datasets provide a deep pool of scientific knowledge. This allows models to learn the rules of nature from raw sensor data, creating a path toward discovery that does not depend on human text alone.

Bonus: Dataset analysis from LifeArchitect.ai has already been featured across the US DOE, including in the 2024 report ‘Enabling capabilities and resources’ (PDF, Apr/2024). The LifeArchitect.ai independent research on datasets, frontier LLMs, and superintelligence is used across government (G7, US Gov), think tanks (RAND, Brookings), frontier labs (Microsoft, Apple), and other organisations worldwide.

Dataset contents in plain English (archived)

Dataset contents in plain English

1. Accelerating Therapeutics for Medicine

The ATOM dataset, developed in collaboration with national laboratories and pharmaceutical partners including GSK, provides molecular libraries and chemical property data used to accelerate preclinical drug discovery.

2. Advanced Photon Source Upgrade X-ray

The APS-U generates high-energy X-ray beams to produce ultra-high-resolution 3D imaging of materials at the atomic and molecular scale. This petabyte-scale dataset allows AI to analyze the structural characteristics of new materials and chemical reactions in real-time.

3. Atmospheric Radiation Measurement

This archive contains over 30 years of continuous sensor data tracking radiation, cloud properties, and atmospheric chemistry. It serves as a critical training set for climate AI models to improve the accuracy of weather forecasting and long-term climate projections.

4. Community Ice CodE / Energy Exascale Earth System Model

These datasets consist of simulations of the Earth’s cryosphere and oceans, focusing on the complex interactions between sea ice and global climate systems. They provide the high-fidelity data necessary for ‘climate digital twins’ to predict future sea-level rise and polar changes.

5. Continuous Electron Beam Accelerator

CEBAF data captures the results of electron-nucleus collisions to probe the quark and gluon structure of protons and neutrons. This nuclear physics dataset is used to map the fundamental forces of the ‘strong interaction’ that holds the nucleus of the atom together.

6. Critical Materials Innovation

This dataset is a collection of thermodynamic, phase, and chemical property data for rare earth elements developed to secure the domestic supply chain. It aggregates information from over 12 years of research into magnetic and structural properties, allowing AI models to predict new alloy compositions that do not rely on scarce minerals.

7. Deep Underground Neutrino Experiment

DUNE records images of neutrino interactions within massive liquid argon time projection chambers located nearly a mile underground in South Dakota. At scale, the dataset supports automated reconstruction, anomaly detection, and inference workflows that allow frontier AI systems to learn directly from rare particle events and extract weak signals tied to matter-antimatter asymmetry.

8. Earth System Grid Federation

The Earth System Grid Federation aggregates the world’s most advanced climate model outputs and long-horizon simulations, forming the reference archive for global climate analysis across an international network of modeling centers. At scale, it allows frontier AI systems to learn coupled atmospheric, oceanic, and land processes directly from multinational simulation ensembles, supporting inference across uncertainty, feedback loops, and long-term planetary behavior.

9. Energy Data eXchange

EDX aggregates subsurface geological, geophysical, and engineering datasets used to support carbon capture, utilization, and storage, as well as broader energy and resource systems analysis. It brings together seismic surveys, well logs, core samples, reservoir models, and operational metadata, providing a unified view of subsurface structure and behavior. Frontier AI models can use this data to support scenario analysis for energy transition planning and large-scale infrastructure deployment.

10. Environmental Molecular Sciences Lab

EMSL provides mass spectrometry and microscopy data that characterizes molecular processes within biological and terrestrial systems. This dataset allows researchers to use AI to understand how microbes and plants affect carbon cycling and nutrient movement in the soil.

11. Hardware/Hybrid Accelerated Cosmology Code

The Hardware/Hybrid Accelerated Cosmology Code (HACC) produces simulations of the universe’s evolution, tracking billions of particles to model the formation of cosmic structures. This data provides a foundational ‘ground truth’ for astrophysicists using AI to interpret actual telescope observations of the dark universe.

12. Joint Genome Institute

JGI produces petabytes of DNA and RNA sequence data from plants, fungi, and microbes found in diverse environments. By treating genetic sequences as ‘nature’s language,’ AI models can be trained on this data to discover new enzymes for biofuel production or carbon sequestration.

13. Large Hadron Collider Compact Muon Solenoid Tier-1

This dataset contains records of particle collisions from the LHC, which is located in a 17-mile underground ring on the border of France and Switzerland. It is used by researchers to search for rare physical phenomena and to train AI to distinguish signal from background noise in subatomic physics.

14. Legacy Survey of Space and Time

The Rubin Observatory in Chile will generate a nightly ‘movie’ of the southern sky, capturing 20 terabytes of data every session to track moving or changing celestial objects. This dataset is used to train AI pipelines to automatically detect supernovae, asteroids, and distant galaxies in near real-time.

15. Linac Coherent Light Source II

LCLS-II uses ultra-fast X-ray pulses to capture the molecular movie of chemical bonds breaking and forming at the femtosecond scale. Because the data rates are so high, it requires edge-AI to process and compress information instantly, enabling the study of quantum materials and rapid chemical reactions.

16. Materials Data Facility

The MDF is a scalable repository that aggregates experimental and computational materials science data from various institutions. It is specifically structured to enable machine learning discovery loops, allowing AI to suggest new material compositions with specific desired properties.

17. Materials Project

Described as a ‘Google’ for materials, this dataset provides calculated properties for over 150,000 inorganic compounds. It is a foundational dataset for training graph neural networks to predict the stability, conductivity, and hardness of yet-to-be-synthesized materials. This dataset is cited in research papers more than six times a day.

18. Million Veteran Program

The MVP links the genomic sequences and longitudinal electronic health records of over a million US veterans. This massive dataset enables AI to identify genetic markers for complex diseases, leading to more personalized precision medicine and targeted therapies.

19. National Ignition Facility Shot Data

NIF data records the outcomes of fusion ignition experiments where giant lasers compress hydrogen fuel to extreme temperatures and pressures. These datasets are used to calibrate high-energy-density physics simulations, helping scientists move closer to achieving sustainable, clean fusion energy.

20. National Solar Radiation Database

The NSRDB provides 30 years of solar radiation data and meteorological observations for the entire Americas. It is the primary training set for AI models used to predict solar power grid stability and optimize the placement of large-scale solar arrays.

21. National Spherical Torus Experiment

This dataset captures microsecond-resolution sensor data from plasma confinement experiments in a spherical tokamak, a magnetic fusion device designed to contain ultra-hot plasma in a closed loop. It is used to train real-time AI control systems that can predict and prevent plasma disruptions, which is critical for developing stable fusion power systems.

22. National Synchrotron Light Source II

NSLS-II produces extremely bright X-rays to image nanomaterials and biological structures at the nanometer scale. The resulting data is used to train AI to reconstruct 3D images from partial or noisy experimental scans, speeding up the discovery of more efficient battery materials.

23. Relativistic Heavy Ion Collider

RHIC data records the results of smashing gold ions together at nearly the speed of light to create a quark-gluon plasma. This dataset allows AI to model the behavior of matter under the most extreme temperature and density conditions possible in a laboratory.

24. Savannah River Site Environmental Monitoring

This dataset aggregates decades of environmental monitoring and surveillance data from the Savannah River Site, spanning soil, water, air, sediment, biota, and ecological measurements tied to nuclear materials processing, waste management, and cleanup. By leveraging subsurface characterization data collected at sites such as Savannah River and Hanford, this material is treated as a national asset to accelerate environmental cleanups, enhance domestic energy production, and support the extraction of critical minerals and materials.

25. Stealth/non-public/top secret dataset

There will be more than one ‘top secret’ classified dataset within the DOE remit. This entry functions as a placeholder for multiple archives that are not publicly available or acknowledged, likely spanning national security, weapons science, and sensitive simulation or experimental programs. Their inclusion signals that the visible Tier 1 list is incomplete by design, and that a portion of Genesis Mission-relevant data remains undisclosed due to classification and access controls rather than absence or lack of scale.

26. Stockpile Stewardship

This classified archive, commonly associated with the Stockpile Stewardship Program and led operationally by Los Alamos National Laboratory in coordination with Lawrence Livermore and Sandia, aggregates data spanning the full lifecycle of the US nuclear weapons program from the Manhattan Project era of the early 1940s to the present. It includes empirical measurements from atmospheric nuclear tests conducted between 1945 and 1962, underground tests from 1963 until the US testing moratorium in 1992, and decades of post-testing laboratory work such as subcritical experiments, hydrodynamic tests, materials aging studies, radiation diagnostics, and weapons component surveillance.

27. Summit/Frontier I/O Logs

This dataset consists of the input/output logs and system performance metrics from the world’s most powerful exascale supercomputers. AI models analyze these logs to optimize scientific workflows, detect hardware failures before they happen, and improve the efficiency of massive scientific computations.

28. Viral Genomics

This repository hosts the genomic sequences of thousands of viral strains, including HIV, Influenza, and SARS-CoV-2, and played a direct role during COVID-19 in variant tracking and mutation analysis, making it a high-signal training corpus for frontier LLM-class systems learning evolutionary dynamics, sequence structure, and rapid biological change beyond text alone.

29. Wind Integration National Dataset

The WIND Toolkit provides wind speed and power estimates across vast geographic regions and timeframes. It is a vital dataset for training AI to forecast wind power availability, helping utility companies integrate renewable energy into the national power grid more reliably.

30. Z Pulsed Power Facility

The Z Pulsed Power Facility dataset captures data from extreme pulsed-power experiments that subject materials to massive magnetic fields and pressures. In addition to materials response, it records radiation output, plasma behavior, and shock propagation under conditions relevant to fusion and planetary interiors. For frontier AI systems, this data provides rare, high-fidelity examples of matter far from equilibrium, supporting model capability in contexts where small prediction errors carry outsized physical consequences.

Related datasets (archived)

Related datasets

- The ANL AuroraGPT datasets were analysed in a similar way: LifeArchitect.ai/AuroraGPT

- The Well: 15TB (~4.1T tokens) of physics simulations

“The Well” contains curated physics simulations from 16 scientific domains, each capturing fundamental equations that appear throughout nature, and all validated and/or generated by domain experts:

- Fluid dynamics & turbulence

- Supernova explosions

- Biological pattern formation

- Acoustic wave propagation

- Magnetohydrodynamics

Available at: https://github.com/PolymathicAI/the_well

- Multimodal Universe: 100TB (~27.5T tokens) of astronomical data

“Multimodal Universe”, contains hundreds of millions of observations across multiple modalities, object types, and wavelengths. The data was collected from JWST, HST, Gaia, and several other major surveys, and unified in a single, ML-ready format.

Available at: https://github.com/MultimodalUniverse/MultimodalUniverse

See also:

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG…

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG…

Alan D. Thompson

LifeArchitect.ai

March 2022

26 pages incl title page, references, appendix.

A Comprehensive Analysis of Datasets Likely Used to Train GPT-5

A Comprehensive Analysis of Datasets Likely Used to Train GPT-5

Alan D. Thompson

LifeArchitect.ai

August 2024

27 pages incl title page, references, appendices.

Mapping Genesis data to the ASI checklist (archived)

Mapping Genesis data to the ASI checklist

By mapping the Genesis datasets against Alan’s ASI checklist, we see that these exascale archives provide the physical substrate for the transition to superintelligence. To track the real-time progress of these milestones, visit LifeArchitect.ai/ASI

Phase 1: Early ASI, Discovery, and Simulation

#1 & #2: Recursive hardware self-improvement achieved & Recursive code self-optimization achieved: The Summit/Frontier I/O Logs and ALCF Cosmology performance paths allow the system to observe and refine exascale computing efficiency, reaching autonomous improvement loops.

#3: First major simulation of a suggested improvement; convinces majority of humans: The ESGF (CMIP6) and NIF Shot Data provide the high-fidelity archives necessary to create a simulation of climate or energy stability so precise that it establishes a new standard of predictive power.

#6 & #7: First new discovery (i.e. a new theoretical concept) & First new physical invention (i.e. a new tool): By cross-referencing the Materials Project with APS-U X-ray imaging, the system identifies novel theoretical concepts and engineers new physical tools like non-silicon processors.

#8 & #9: First new element added to the periodic table & Novel computing materials developed (i.e. beyond silicon): Extreme energy collisions in the Z Pulsed Power Facility, CEBAF, and LCLS-II datasets provide the blueprints for superheavy elements and materials that surpass current semiconductor limits.

#12, #14 & #16: First mental health condition resolved, Majority of physical conditions able to be resolved by AI & Optimized biology at birth becomes standard (1M+ people): Linguistic and proteomic patterns in JGI, MVP, and ATOM allow for the resolution of chronic conditions through personalized molecular correction.

#17, #18 & #19: First new type of energy discovered, First new type of energy harnessed & First new type of energy storage: Using sensor streams from NSTX-U, NIF, and the WIND Toolkit, the system discovers and harnesses unrecognizable energy types while engineering storage with near-infinite density.

Phase 2: Governance and Economic Transformation

#27: Traditional economics surpassed; money deflates in value: The Materials Project and Critical Materials (Ames) datasets allow for the direct synthesis of rare resources from waste, removing the price floor of physical goods.

#32: Integrated international governance by AI: The Stockpile Stewardship (NNSA) and ESGF CMIP6 archives provide the global security and climate modeling necessary for a machine-led international stability pact.

Phase 3: Physical World Integration

#37 & #38: Waste management optimized; no more trash & Environmental issues resolved and environment optimized: The EDX and Environmental DB datasets provide the blueprints for total molecular recycling and the reversal of atmospheric carbon levels.

#45: New state of matter engineered: Extreme pressure and temperature data from the Z Pulsed Power Facility and NIF Shot Data provide the recipes for stable, non-natural states of matter like metallic hydrogen.

#46 & #47: First planet other than Earth optimized/terraformed & First planet other than Earth colonized: ALCF Cosmology and ESGF simulations serve as the foundation for managing the atmospheres and magnetospheres of other planets.

Hypothetical prompts to a Genesis model (archived)

Hypothetical prompts to a Genesis model

1. Global energy transition and climate stability

Prompt: Cross-reference the ESGF (CMIP6) climate projections with the NSRDB and WIND Toolkit archives. Map the ideal placement for a global decentralized grid that maintains 100% uptime, using EDX data to identify sites for atmospheric carbon removal that utilize excess thermal output.

ASI Response: Mapping successful. By aligning atmospheric flow patterns with subsurface storage capacity, a global equilibrium is reachable within…

2. Accelerated molecular discovery for longevity

Prompt: Apply the linguistic patterns found in JGI and MVP to the molecular libraries in ATOM. Identify the specific genetic sequences responsible for cellular decay and propose a viral delivery vector, based on Viral Genomics structures, to distribute corrective sequences.

ASI Response: The Million Veteran records reveal a distinct sequence subset that, when paired with the following molecular structure…

3. Room-temperature superconductivity and material synthesis

Prompt: Analyze the APS-U X-ray Data and LCLS-II “Data Deluge” datasets alongside the Materials Project database. Identify a stable atomic lattice that exhibits zero electrical resistance at 295K, ensuring the material is composed of elements found in the Critical Materials inventory.

ASI Response: Superconductivity is a product of lattice geometry. The following nitrogen-doped lutetium structure remains stable under standard pressure…

4. Commercial plasma energy generation

Prompt: Synthesize the sensor logs from NIF Shot Data, NSTX-U, and the Z Pulsed Power Facility. Create a predictive model for plasma containment that uses edge-AI to adjust magnetic fields in real-time, preventing the instabilities recorded in previous experiments.

ASI Response: The instability patterns in the Princeton and Sandia data are predictable. By shifting to a non-linear magnetic pulse, a net energy gain of 400% is achievable…

5. Systemic risk and national security defense

Prompt: Using the Stockpile Stewardship archives and the frontier logs, simulate a global cyber-kinetic conflict. Identify the primary vulnerabilities in the domestic power grid and propose a self-healing software architecture that can withstand a multi-vector exascale attack.

ASI Response: Security resides in decentralization. The Frontier logs show that a distributed defense layer can absorb 99.8% of high-speed packet injections by shifting…

BHAG 1 (big, hairy, audacious goal!). Biological immortality through proteomic correction

Prompt: Use the JGI and EMSL Data to map the precise folding failures that lead to senescence. Cross-reference this with the MVP health records to identify the “immortality markers” in rare human lineages. Design a self-replicating nanobotic repair system, informed by NSLS-II Imaging, that resets the epigenetic clock of every cell in a living organism to a biological age of twenty-five.

ASI Response: Aging is an accumulated software error in the proteome. By introducing a synthetic corrective enzyme that targets the following mitochondrial DNA sequences, the decay process ceases…

BHAG 2. Direct matter-to-energy conversion (mass-energy parity)

Prompt: Analyze the high-energy density physics within the Z Pulsed Power Facility and NIF Shot Data alongside the subatomic quark-gluon structures recorded at CEBAF. Determine the exact resonance frequency required to induce a controlled decay of non-fissile waste into pure kinetic energy, bypassing the need for traditional nuclear fuel cycles and providing infinite, zero-emission power from common silica.

ASI Response: The transition from mass to energy does not require heavy isotopes. By applying a focused harmonic pulse to stable atomic nuclei, we can trigger a localized release of binding energy…

BHAG 3. Instantaneous global neural synchronization

Prompt: Leverage the ALCF Cosmology (HACC) simulations and the LHC CMS Tier-1 data to identify the quantum entanglement signatures of consciousness. Propose a method using LCLS-II “Data Deluge” photonics to establish a non-local communication layer between human brains, effectively removing the latency of language and resolving all human conflict through the total transparency of shared experience.

ASI Response: Language is a low-bandwidth bottleneck. The quantum signatures in the neural cortex are compatible with long-distance entanglement, allowing for the direct transfer of conceptual architectures…

BHAG 4. Total resource abundance via atomic reconfiguration

Prompt: Combine the Materials Project and Materials Data Facility libraries with the APS-U X-ray Data imaging. Design an “atomic assembler” that can rearrange the molecular structure of ocean plastic and industrial waste into high-value Critical Materials, such as neodymium or platinum, at the atomic level, rendering scarcity and mining obsolete.

ASI Response: Material scarcity is a failure of sorting, not a lack of atoms. By utilizing the following electromagnetic assembly sequence, any carbon-based waste can be restructured into crystalline lattices…

Older/unused material (archived)

All dataset reports by LifeArchitect.ai (most recent at top)

| Date | Type | Title |

| Dec/2025 | 📑 | Genesis Mission |

| Jan/2025 | 📑 | What's in Grok? |

| Jan/2025 | 💻 | NVIDIA Cosmos video dataset |

| Aug/2024 | 📑 | What's in GPT-5? |

| Jul/2024 | 💻 | Argonne AuroraGPT |

| Sep/2023 | 📑 | Google DeepMind Gemini: A general specialist |

| Feb/2023 | 💻 | Chinchilla data-optimal scaling laws: In plain English |

| Aug/2022 | 📑 | Google Pathways |

| Mar/2022 | 📑 | What's in my AI? |

| Sep/2021 | 💻 | Megatron the Transformer, and related language models |

| Ongoing... | 💻 | Datasets Table |

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 21/Jan/2026. https://lifearchitect.ai/genesis/↑