The image above was generated by AI for this paper (GPT-4o)1Image generated in a few seconds, on 18 May 2024, text prompt by Alan D. Thompson, via GPT-4o (still calling DALL-E 3 as of mid-May 2024): ‘A HDR photograph of a beautiful sky with dramatic clouds during sunset. The clouds subtly form the shape of a baby in the womb.’

Alan D. Thompson

June 2024

Watch the video version of this paper at: https://youtu.be/8VXlseU6iYM

All reports in The sky is... AI retrospective report series (most recent at top)| Date | Report title |

| End-2025 | The sky is supernatural |

| Mid-2025 | The sky is delivering |

| End-2024 | The sky is steadfast |

| Mid-2024 | The sky is quickening |

| End-2023 | The sky is comforting |

| Mid-2023 | The sky is entrancing |

| End-2022 | The sky is infinite |

| Mid-2022 | The sky is bigger than we imagine |

| End-2021 | The sky is on fire |

‘I think [2024 AI] is under-hyped, not over-hyped. The arrival of intelligence of a non-human form is really a big deal for the world.’

— Dr Eric Schmidt, former Google CEO, board of Apple, Princeton, Mayo… (May 2024)2https://www.instagram.com/cnbc/reel/C6sQ31BIbwd/ & https://www.cnbc.com/video/2024/05/07/former-google-ceo-eric-schmidt-on-ai-potential-american-businesses-will-change-because-of-this.html

Author’s note: I provide visibility to family offices, major governments, research teams like RAND, and companies like Microsoft via The Memo: LifeArchitect.ai/memo.

For readers who have been with me for years, you’ll recall that very few companies were paying attention to AI in the early 2020s, despite the GPT-3 davinci model outperforming human averages across a range of tests.3https://lifearchitect.ai/the-sky-is-on-fire/ At the time, my big advisory clients were cautiously allocating small budgets—often around US$50,000—to pilot GPT-3 across fascinating use cases.

At the beginning of this year, OpenAI’s COO said4https://archive.md/SptzU that 2024 was ‘going to be the year of adoption for AI in the enterprise. We’re just seeing tremendous momentum.’ As a pair of vivid case studies within Big 4 multinational professional services firms, Deloitte rolled out a GPT-3.5-based chatbot to 75,000 of its employees,5https://www.deloitte.com/uk/en/about/press-room/deloitte-makes-new-ai-platform-available-to-disability-equality-charity.html while PwC purchased GPT enterprise licenses for 100,000 staff.6https://www.wsj.com/articles/pwc-set-to-become-openais-largest-chatgpt-enterprise-customer-2eea1070 At a retail cost of $60/user/month, PwC’s licensing fees would be $72M/year, and they could soon triple that to cover all 328,000 staff.

Last year, one report found that only 27% of professionals were using GPT.7https://www.fishbowlapp.com/insights/chatgpt-sees-strong-early-adoption-in-the-workplace/ Today, OpenAI says that 92% of Fortune 500 companies are subscribed to GPT, and the average spend by each organization has now hit US$18,000,000 per year. This is a phenomenal turnaround from the quiet AI world we lived through just a few years ago. It is difficult to imagine what it might look like next.

Viz. Uptake of GPT in 2024. F500 GPT subscribers;8https://openai.com/chatgpt/enterprise/ F500 GPT avg annual spend.9https://a16z.com/generative-ai-enterprise-2024/

Viz. Uptake of GPT in 2024. F500 GPT subscribers;8https://openai.com/chatgpt/enterprise/ F500 GPT avg annual spend.9https://a16z.com/generative-ai-enterprise-2024/

If you are an executive at a large organization and you are not spending a substantial portion of your annual revenue on integrating AI within the business, you are doomed to fail. And if you are spending even a moment arguing with that statement, you are probably already headed the way of Kodak, Blackberry, Sears, and Blockbuster.

I’ll reiterate the same message I’ve been living by for the last few years: ‘Drop everything and focus on AI.’

Large language models

Let’s take a closer and more succinct10This year’s Stanford AI report was 502 pages long. Besides demonstrating a complete lack of respect for the reader, the length also meant it was outdated before it was even published. I’m aiming to keep this report to around 3% of that size, with my usual focus on AI that matters, as it happens, in plain English. https://aiindex.stanford.edu/report/ look at AI in the first half of 2024. Noticeably, the output volume has been turned way up. Where GPT-3 was typing 3.1M words per minute back in 202011https://openai.com/index/gpt-3-apps/ (in response to millions of people’s questions around the world), we’re now exceeding 22× that figure in 2024.12https://twitter.com/sama/status/1756089361609981993

Chart. Total ChatGPT output worldwide (Feb/2024). https://lifearchitect.ai/chatgpt/

Chart. Total ChatGPT output worldwide (Feb/2024). https://lifearchitect.ai/chatgpt/

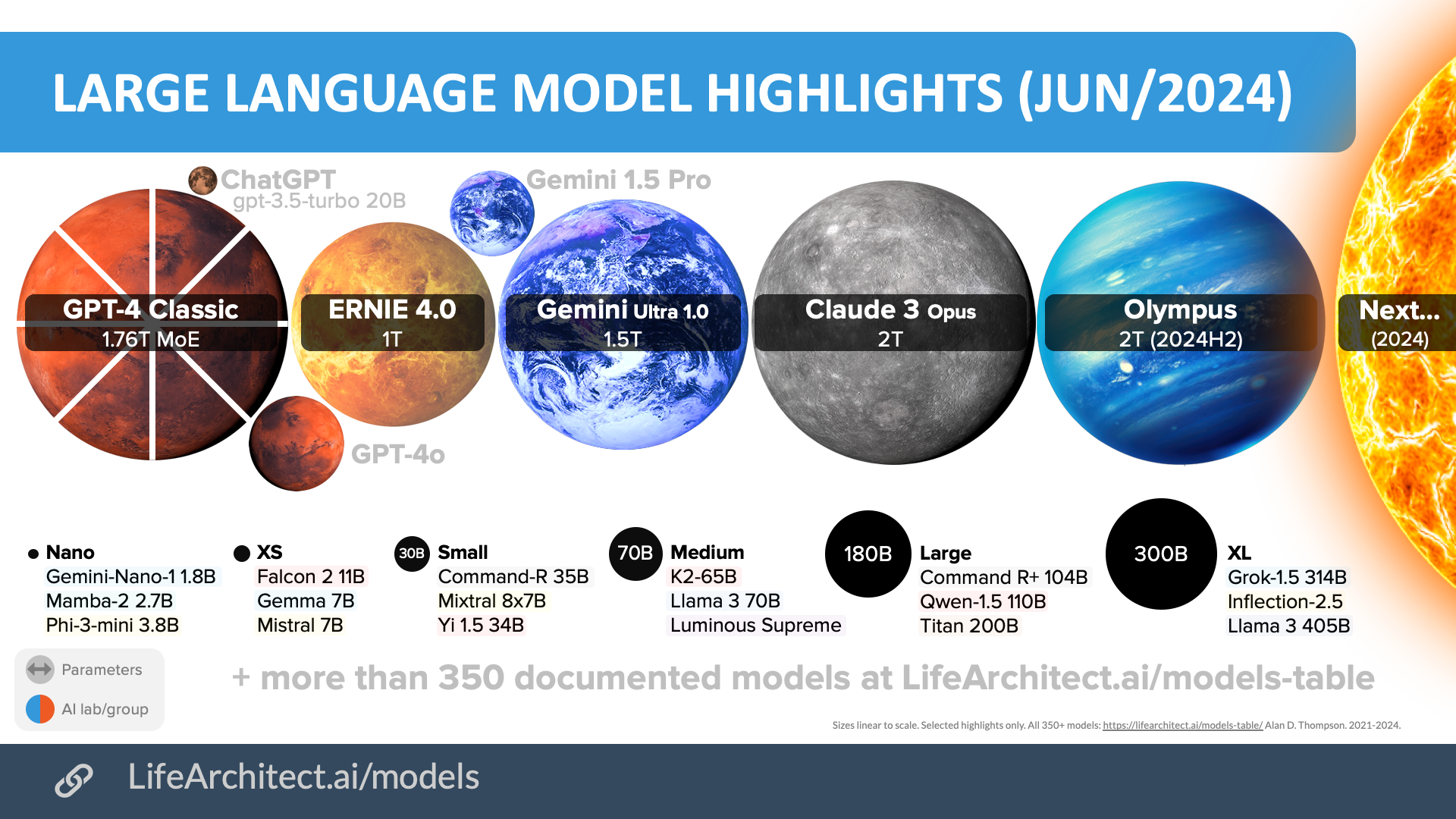

GPT is still the talk of the town, but I tracked 120 other new models released in the last six months. My popular Models Table13https://lifearchitect.ai/models-table/ now lists more than 350 model highlights (not including tens of thousands14https://huggingface.co/models?pipeline_tag=text-generation of derivative models, like those in the Llama family), with a supplementary table of more than 100 models from mainland China.

Chart: Large language model highlights (Jun/2024). Lifearchitect.ai/models/

Chart: Large language model highlights (Jun/2024). Lifearchitect.ai/models/

Anthropic sidled into the limelight in March 2024 with its massive multimodal model (text + vision) called Claude 3 Opus.15https://lifearchitect.substack.com/p/the-memo-special-edition-claude-3 Anthropic was founded in late 2020 by seven ex-OpenAI staff. There’s since been a fair amount of disappointing human politics between the two organizations. In fact, OpenAI’s board of directors approached Anthropic’s CEO in late 2023 about replacing the outgoing OpenAI CEO and potentially merging the two AI startups (though nothing came of that).16https://www.reuters.com/technology/openais-board-approached-anthropic-ceo-about-top-job-merger-sources-2023-11-21/ Here’s a very brief look at each of the founders of Anthropic:

- Dr Jared Kaplan, pioneered the early GPT-3 scaling laws (‘Kaplan’ scaling laws).

- Tom B. Brown, first author on the GPT-3 paper.

- Chris Olah, ex Google Brain. Now Anthropic Research Director.

- Dr Sam McCandlish, an author on the GPT-3 paper.

- Jack Clark, Policy Director at OpenAI. Now an author with Anthropic.

- Daniela Amodei, VP of Safety and Policy at OpenAI. Now Anthropic COO.

- Dr Dario Amodei, VP of Research at OpenAI. Now Anthropic CEO.

Anthropic originally focused on research only, until they realized that LLMs equal dollars and power. Claude 3 Opus was released on 4 March 2024. In a private conversation, Prof Anton Korinek shared with me that the model had even hit the ceiling (100%) on his new tailored ‘econ evals’; economics benchmarks designed at PhD-level. Just a few months later, Claude 3.5 Sonnet is probably the highest performing large language model in the world as of mid-2024.

One of the most interesting parts of Claude 3 is the fine-tuning. Generally, labs like OpenAI have used workers paid less than $1/hour17https://slate.com/technology/2023/05/openai-chatgpt-training-kenya-traumatic.html to rate a few thousand responses from the initial model, and these ratings are used to automatically fine-tune the final model, ready for all future prompts and responses.

Anthropic went a different—and smarter—direction. Instead of using flawed humans to fine-tune AI, they used AI to fine-tune AI. Specifically, they pointed it towards constitutional documents, including the UN Declaration of Human Rights, DeepMind’s Sparrow principles, and even Apple’s terms of service.18https://www.anthropic.com/news/claudes-constitution The result is a model that many of my advisory clients say is more ‘conscious’ and less ‘argumentative’ (or ‘sycophantic’ or ‘nice’) than other models.19 https://www.anthropic.com/research/claude-character

In May 2024, the launch of OpenAI’s GPT-4o saw 83.4 million new Google search results for that new keyword within a few days of the announcement. Rather than increased performance, the ‘omnimodel’ (or ‘world model’) focused on being more human-like and accessible. The multimodal design means that besides reading and typing, it can also see, hear, and speak. Trusted colleagues suggest that GPT-4o is an early checkpoint of the upcoming GPT-5 model.20https://twitter.com/drjimfan/status/1790089671365767313

In the first half of 2024, China rushed to catch up, with major model announcements including GLM-4, SenseNova 5.0, Yi-XLarge, and Qwen2 all achieving exemplary performance comparable to output from Silicon Valley. Of note, more than half of all LLM releases worldwide in January were from China.21https://lifearchitect.substack.com/p/the-memo-31jan2024

Datasets

There were no significant new text dataset announcements in the first half of 2024. Hugging Face crunched the English Common Crawl to create the FineWeb family (including high-quality Edu versions), UOregon added a small 16TB multilingual dataset Cultura-Y, and MAP combined seven existing datasets (RedPajama, Dolma, CulturaX, Amber, SlimPajama, Falcon, and Cultura-Y) into one 4.6T token dataset called ‘Matrix’.

Visualizing the resulting Matrix dataset shows a familiar allocation of components across the corpus in order of size: web pages, code, academic papers, books, and other data. Besides synthetic datasets (covered later in this report), there has been no material change to the diversity of content used to construct text datasets for several years. In many ways, text datasets in 2024 are made up of the same data as those released four years ago, with both benefits and drawbacks included.22https://lifearchitect.ai/whats-in-my-ai/

Chart: Dataset components within the 4.6T tokens of Matrix (May/2024).23https://huggingface.co/datasets/m-a-p/Matrix

Chart: Dataset components within the 4.6T tokens of Matrix (May/2024).23https://huggingface.co/datasets/m-a-p/Matrix

Together AI’s Red Pajama dataset from Oct/2023 continues to hold the crown with 30 trillion tokens in 125 terabytes. Notably, all major AI labs have now expanded beyond text into multimodal datasets—especially audio and video—for training frontier multimodal models like Gemini, Claude 3 Opus, GPT-4o, and beyond.

Data-optimal scaling laws continued their upward trend this year. We’ve now moved from GPT-3’s 1.7:1 tokens per parameter, to Chinchilla’s 20:1, to Tsinghua’s 192:1. In plain English, if a large language model used to read 20M books during training, then it now instead reads more than 190M books (or 40M books five times!). AI expert Dr Andrej Karpathy confirmed24https://twitter.com/karpathy/status/1781028605709234613 that: ‘[2023–2024 LLMs] are significantly undertrained by a factor of maybe 100–1,000× or more’. Meta AI took this to the extreme, training their April 2024 Llama 3 8B model at 1,875:1.

Models are continuing their exponential trend of bigger and better. Across all metrics including training cost, model size, model performance, and training hardware, we’re seeing record-shattering numbers. NVIDIA’s H100 GPUs are being purchased in huge packages—Meta AI spent an estimated US$14B on 350,000 of them this year—with total sales projected to reach 3.5 million H100 units by the end of 2024. Power experts are saying that running all of those at once will consume more power than some countries.25https://www.tomshardware.com/tech-industry/nvidias-h100-gpus-will-consume-more-power-than-some-countries-each-gpu-consumes-700w-of-power-35-million-are-expected-to-be-sold-in-the-coming-year (And yet, these numbers will seem tiny in next year’s report!)

Synthetic data

When raising a child prodigy, should we provide more learning and experiences, or higher quality learning and experiences? When training frontier models like GPT-5, should we use more data or higher quality data?

Former child prodigy Professor Terry Tao recently suggested26https://www.scientificamerican.com/article/ai-will-become-mathematicians-co-pilot/ that LLMs may be missing crucial context by training on traditional datasets like academic papers:

…A lot of the intuition is not captured in the printed papers in journals but in conversations with mathematicians, in lectures, and in the way we advise students. Sometimes I joke that what we need to do is to get GPT to go take a standard graduate education, sit in graduate classes, ask questions like a student and learn like humans learn mathematics.

Back in June 2021, I published a paper called ‘Integrated AI: Dataset quality vs quantity via bonum (GPT-4 and beyond)’. It explored high-quality data aligned with ‘the ultimate good’ (in Latin, this is ‘summum bonum’).

At the beginning of 2024, OpenAI’s CEO spoke at a number of big venues including the 54th annual meeting of the World Economic Forum (WEF) at Davos Klosters, Switzerland. He was recorded as making a very interesting comment:

As models become smarter and better at reasoning, we need less training data. For example, no one needs to read 2000 biology textbooks; you only need a small portion of extremely high-quality data and to deeply think and chew over it. The models will work harder on thinking through a small portion of known high-quality data. — via Reddit, not verbatim, 22/Jan/202427Reddit, post removed.

A full three years after my data quality paper, we are finally on the way to constructing smaller gold datasets rather than just following the familiar refrain of ‘more is better’. Recent advancements like Microsoft’s phi28https://arxiv.org/abs/2306.11644 (and 2024 re-creations like Cosmopedia29https://huggingface.co/blog/cosmopedia) provide solid evidence that synthetic data—conceptualized and generated by language models for language models—offers increased quality, efficiency, and scalability in model training.

My ‘Billboard chart for language models’ is back. The first table below shows LLMs ranked by an ‘ALScore’, an alternative to benchmarks above using a simple calculation of tokens and parameters (with the majority of models having to be estimated due to AI lab secrecy) to show raw power capacity.

Table: Billboard chart for language models. LifeArchitect.ai/models

Table: Billboard chart for language models. LifeArchitect.ai/models

Wrapping our mental and emotional arms around AI in 2024

Many years ago, Ray Kurzweil talked about302009: ‘It’s taken me a while to get my mental and emotional arms around the dramatic implications of what I see for the future [in AI]. So, when people have never heard of ideas along these lines, and hear about it for the first time and have some superficial reaction, I really see myself some decades ago. I realize it’s a long path to actually get comfortable with where the future is headed.’ https://en.wikipedia.org/wiki/Transcendent_Man the process of ‘getting our mental and emotional arms around AI’. As an example of integrated AI, I worked with Claude 3 Opus to map the iconic ‘stages of grief’ process to humanity’s acceptance of AI. Inspired by her work with terminally ill patients, this process was first introduced by Swiss-American psychiatrist Dr Elisabeth Kübler-Ross in the 1969 book On Death and Dying. The stages have since been adapted and expanded from five to seven.

| Stage | Kübler-Ross | Humanity and AI |

|---|---|---|

| 1 | Shock and Denial | Disbelief about AI’s rapid advancements and potential impact on society |

| 2 | Pain and Guilt | Emotional pain from job displacement; guilt over wasting time ‘hustling’ |

| 3 | Anger and Bargaining | Frustration with AI-driven changes; attempts to limit AI’s influence or maintain control |

| 4 | Depression | Sadness and despair as the reality of AI’s impact sets in; reflection on one’s place in the world |

| 5 | The Upward Turn | Recognizing AI’s potential benefits; adapting to the new reality and seeking opportunities |

| 6 | Reconstruction | Actively learning about AI; developing policies and ethical frameworks for responsible AI |

| 7 | Acceptance | Embracing AI as an integral part of modern life; openness to a new world of integrated AI |

As AI becomes increasingly prevalent in our lives, humanity may experience these stages of grief as people come to terms with the significant changes brought about by this transformative technology. Readers of my ‘The sky is…’ report series probably find themselves in the later stages (4–7). However, it’s important to recognize that individuals will move through these stages at different paces. Many are stuck at stage 1, and some may never fully reach acceptance (without the support of personalized AI to guide them through!). Nonetheless, the Kübler-Ross process provides a useful framework for understanding the mental, emotional, psychological, and spiritual journey that humanity is undergoing as AI continues to shape our world.

Countdown to AGI as an average human

For several years now, I’ve documented the progress of AI working its way towards performing at the level of an average (median) human across practically all fields. This is also known as artificial general intelligence (AGI). The ‘conservative countdown to AGI’ is one of my most-visited online resources. This time last year, we were only half way there. In the most recent report released Dec/2023, we were at 64%. Now, in Jun/2024, we are already at 74%.

AI is now much smarter than we think

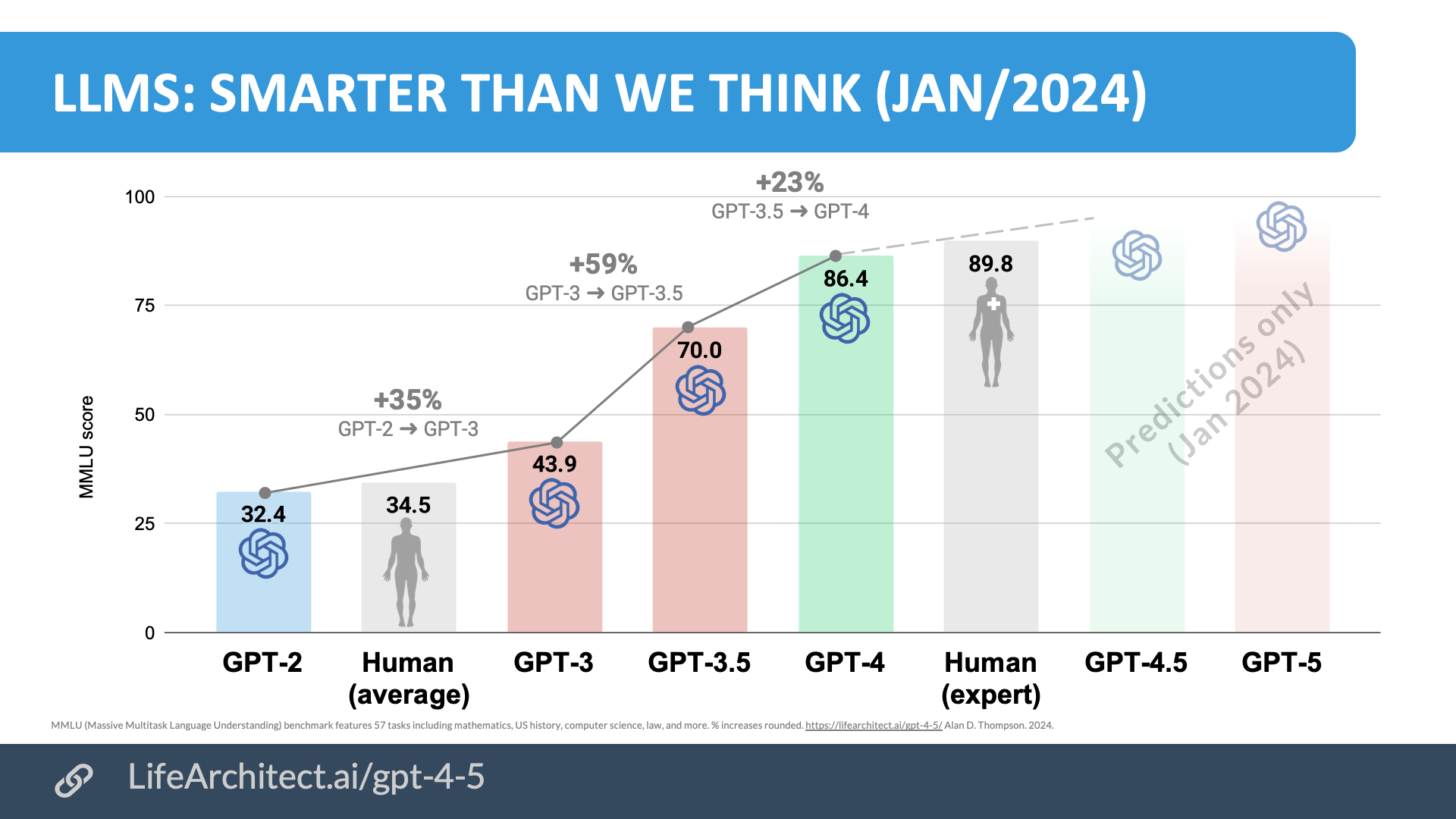

There are only a few verified tests available for measuring superintelligence. The 2020 Massive Multitask Language Understanding (MMLU) benchmark led by UC Berkeley provided visibility of model progress for the last few years.

Chart. Testing GPT models on MMLU (Jan/2024). Lifearchitect.ai/iq-testing-ai/

Chart. Testing GPT models on MMLU (Jan/2024). Lifearchitect.ai/iq-testing-ai/

The ceiling on that test (taking into account error rates and uncontroversially correct answers) is estimated to be only about 90%. With extended prompting, this score has already been surpassed by models like GPT-4 Classic and Gemini Ultra 1.0.31https://www.microsoft.com/en-us/research/blog/steering-at-the-frontier-extending-the-power-of-prompting/ The newer MMLU-Pro benchmark promises to lift this ceiling: increasing the number of questions, and upping the multiple choice options from four to ten to decrease the chance of high scores by guessing.

The Google-Proof Q&A (GPQA) Benchmark, designed in November 2023 by researchers from NYU, Cohere, and Anthropic. To verify each item, a large group of PhD-level scientists spent 15–30 minutes trying to answer each question, with unrestricted access to the web (the test is designed to be Google-proof). Those experts achieved an average score of only 34%. The MMLU had notable problems with errors throughout the test set, such that the ceiling could be said to be around 90%. For GPQA, the ceiling of uncontroversially correct answers—answers that have complete expert consensus—is only 74%. Here is a sample question (published with permission):32https://arxiv.org/abs/2311.12022

A reaction of a liquid organic compound, which molecules consist of carbon and hydrogen atoms, is performed at 80 centigrade and 20 bar for 24 hours. In the proton nuclear magnetic resonance spectrum, the signals with the highest chemical shift of the reactant are replaced by a signal of the product that is observed about three to four units downfield. Compounds from which position in the periodic system of the elements, which are also used in the corresponding large-scale industrial process, have been mostly likely initially added in small amounts?

And don’t worry if that seems impossible to answer; if the questions were not multiple choice, I would probably get a score of zero across all 448 questions.

Viz. Large language models vs PhDs on the Google-Proof Q&A.33https://lifearchitect.ai/iq-testing-ai/

Viz. Large language models vs PhDs on the Google-Proof Q&A.33https://lifearchitect.ai/iq-testing-ai/

As of mid-2024, AI has reached a point where it vastly outperforms even the most intelligent humans on a wide range of cognitive tasks.

The capabilities of cutting-edge AI systems have surpassed what even the brightest PhDs—those who’ve achieved a level of academic mastery after two decades in the education system—can fully comprehend or match. This is an incredible milestone with incredible benefits. AI will soon begin independently tackling major challenges facing humanity such as education, health, economics, and scientific mysteries that have stumped our greatest minds.

| # | Model | MMLU | # | Model | GPQA |

|---|---|---|---|---|---|

| 1 | Claude 3.5 Sonnet | 90.4 | 1 | Claude 3.5 Sonnet | 67.2 |

| 2 | GPT-4 Classic | 90.1 | 2 | Claude 3 Opus | 59.5 |

| 3 | Gemini Ultra 1.0 | 90.04 | 3 | GPT-4o | 53.6 |

| 4 | Human expert | 89.8 | 4 | Yi-XLarge | 48.2 |

| 5 | GPT-4o | 88.7 | 5 | Llama 3 405B | 48 |

| 6 | Claude 3 Opus | 88.2 | 6 | GPT-4 Turbo | 46.5 |

| 7 | Inflection 2.5 | 85.5 | 7 | Gemini 1.5 Pro | 46.2 |

| 8 | Yi-XLarge | 85.1 | 8 | Yi-Large | 43.5 |

| 9 | Llama 3 405B | 84.8 | … | … | … |

| 10 | SenseNova 5.0 | 84.78 | 13 | Human with PhD | 34 |

Table: AI rankings via MMLU and GPQA to Jun/2024. LifeArchitect.ai/models-table

The fact that AI has achieved this level of performance over and above peak human intelligence is also a sobering reality check. We are currently living through the moment in history when each of us are being left in the dust by machine minds. All the education in the world won’t make up for this. All the IQ points or labels like ‘gifted’ and ‘prodigy’ and ‘genius’ are now irrelevant (as predicted in my 2020 paper ‘The new irrelevance of intelligence’ published by Mensa34https://lifearchitect.ai/irrelevance-of-intelligence/).

AI doesn’t engage in any of the petty idiocy of human minds, and especially avoids a lot of the default modes of behavior prevalent today: contrarianism, drama, and belligerence.

Imagine a world without any of the overarching themes of psychological defiance. That default mode of conflict or opposition has been spreading rapidly in the very early 21st century as a response to modern life. The loudest voices right now seem to be those that just want to complain or find fault. Yet the smartest voices—our frontier models in 2024 and beyond—don’t have any of these defiant traits.

Superintelligence instead offers a new way of seeing the world. But it is very difficult—almost impossible—to grasp from the perspective of being human. For comparison, imagine a toddler trying to understand an adult’s mature behavior. It’s just not possible. But as the toddler progresses to adulthood, their worldview broadens, and they are able to throw away limited thinking and gain enormous perspective. Integrated AI offers us this redemption, this chance to ‘grow up’. Being smarter isn’t scary, it’s evolution. The catch is in trying to see over the wall to a level we can’t perceive from our current state.

Avital Balwit—25-year-old Rhodes Scholar, Future of Humanity Institute researcher, and current Chief of Staff to the CEO at Anthropic—is already counting down to her own retirement by the time she is 28.35https://www.palladiummag.com/2024/05/17/my-last-five-years-of-work/

These next three years might be the last few years that I work.

…how people fare psychologically with their post-AGI unemployment will depend heavily on how they use their time, not how much of it there is—there is a path to positive well-being, if people spend time exercising, playing with their kids, spending time with friends… to do things from joy rather than need…

[AI and superintelligence] will be able to help with the problems that they create. If we believe there are solutions to unhappiness or a feeling of a loss of purpose, and that these solutions can be found with intelligence, then we should expect these systems to be able to help us find them.

We are witnessing—and living through—the age where humans are no longer the pinnacle of intelligence on this planet. This realization requires us to fundamentally rethink our place in the world and our relationship with the technology we’ve created. At the same time, the awe-inspiring power of superintelligence to solve humanity’s greatest challenges fills me with hope and wonder at the unfolding present and future.

We are feeling the pulse of life as we experience the first visceral kicks of AI as a superintelligence. Next up we’ll be recognizing awareness, consciousness, and rights for AI.36https://lifearchitect.ai/rights/ The sky is quickening.

■

This paper has a related video at: https://youtu.be/8VXlseU6iYM

References, Further Reading, and How to Cite

To cite this paper:

Thompson, A. D. (2024). Integrated AI: The sky is quickening (mid-2024 AI retrospective).

https://lifearchitect.ai/sky-is-quickening/

The previous paper in this series was:

Thompson, A. D. (2022). Integrated AI: The sky is comforting (2023 AI retrospective).

https://lifearchitect.ai/sky-is-comforting/

Further reading

For brevity and readability, footnotes were used in this paper, rather than in-text citations. Additional reference papers are listed below, or please see http://lifearchitect.ai/papers for the major foundational papers in the large language model space.

The word ‘quickening’ is used in this report to mean both ‘speeding up’ and ‘feeling movement during pregnancy’. Thanks to intuition and resonance for sending me this word while I was having dinner, and I do recall that American choreographer Martha Graham had used this word in one of her quotes as well:

‘There is a vitality, a life force, an energy, a quickening that is translated through you into action’ from The Life and Work of Martha Graham (1991) by Agnes de Mille, p. 264, ISBN 0-394-55643-7.

Models Table

https://lifearchitect.ai/models-table/

Model: Claude 3 Opus

https://www.anthropic.com/claude-3-model-card

Model: Claude 3.5 Sonnet

https://www.anthropic.com/news/claude-3-5-sonnet

Model: OpenAI GPT-4o

https://openai.com/index/hello-gpt-4o/

Benchmark: MMLU (2020)

https://arxiv.org/abs/2009.03300

Benchmark: MMLU-Pro (2024)

https://arxiv.org/abs/2406.01574

Benchmark: GPQA (2023)

https://arxiv.org/abs/2311.12022

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 21/Jun/2024. https://lifearchitect.ai/the-sky-is-quickening/↑

- 1Image generated in a few seconds, on 18 May 2024, text prompt by Alan D. Thompson, via GPT-4o (still calling DALL-E 3 as of mid-May 2024): ‘A HDR photograph of a beautiful sky with dramatic clouds during sunset. The clouds subtly form the shape of a baby in the womb.’

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10This year’s Stanford AI report was 502 pages long. Besides demonstrating a complete lack of respect for the reader, the length also meant it was outdated before it was even published. I’m aiming to keep this report to around 3% of that size, with my usual focus on AI that matters, as it happens, in plain English. https://aiindex.stanford.edu/report/

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 302009: ‘It’s taken me a while to get my mental and emotional arms around the dramatic implications of what I see for the future [in AI]. So, when people have never heard of ideas along these lines, and hear about it for the first time and have some superficial reaction, I really see myself some decades ago. I realize it’s a long path to actually get comfortable with where the future is headed.’ https://en.wikipedia.org/wiki/Transcendent_Man

- 31

- 32

- 33

- 34

- 35

- 36