Get The Memo.

Alan D. Thompson

December 2024

Summary

| Organization | Amazon |

| Model name | Nova |

| Internal/project name | Olympus, Metis |

| Model type | Multimodal: Text, image, doc, video in. Text out. |

| Parameter count | (All estimates by Alan based on benchmark scores) Nova Micro ~ 11B Nova Lite ~ 20B Nova Pro ~ 90B Nova Premier ~ 470B |

| Dataset size (tokens) | 10T |

| Training data end date | Alan expects: Oct/2023 |

| Training start date | Alan expects: Nov/2023 |

| Training end/convergence date | Alan expects: May/2024. |

| Release date (public) | 3/Dec/2024 |

| Paper | Technical report |

| Playground | Amazon Bedrock account: Playground |

Etymology

2nd gen: Titan: The Titans were second-generation deities who lived on Mount Othrys. The Titans were immensely powerful beings who ruled before the Olympians.

3rd gen: Olympus: The Olympians were third-generation gods, mostly children of Titans, who established their reign on Mount Olympus after defeating the Titans in the Titanomachy. (source)

3rd gen (new): Nova: From the Latin ‘novus’ meaning ‘new.’ The term has no connection to Greek mythology. In astronomy, it describes a white dwarf star that suddenly brightens by thousands of times when it pulls enough material from a companion star to trigger a thermonuclear explosion on its surface [for the purposes of this analysis, this could possibly be related to synthetic data generation using other frontier models].

Amazon Nova size estimate

Alan’s analysis:

a. Nova Pro is estimated to be 90B parameters on 10T tokens (112:1).

b. Nova Premier is estimated to be 470B parameters on 20T tokens (43:1).

c. Nova Premier 470B dense is roughly equivalent to ≈ 2T MoE (see my o1 dense-MoE calcs table), which was the rumored size of Amazon Olympus.

d. Compare with Amazon’s 2023 model Amazon Titan 200B parameters on 4T tokens (20:1).

e. Nova Pro (and 16 other models) outperforms the current default ChatGPT model GPT-4o-2024-11-20.

| Amazon Nova Pro | OpenAI ChatGPT Default model Nov/2024 |

|

| MMLU | 85.9 | 85.7 |

| GPQA | 46.9 | 46.0 |

GPQA tetris

See: Mapping IQ, MMLU, MMLU-Pro, GPQA

See: Mapping IQ, MMLU, MMLU-Pro, GPQA

Models Table

Summary of current models: View the full data (Google sheets)Updates

3/Dec/2024: Amazon Nova Pro released (blog post, project page, benchmark page, technical report).

13/Jun/2024:

The former research scientist working on the Alexa LLM said Project Olympus is “a joke,” adding that the largest model in progress is 470 billion parameters. (13/Jun/2024, Fortune)

24/Jun/2024:

The [chatbot’s] secret internal project is code-named “Metis,” likely in reference to the Greek goddess of wisdom. The new service is designed to be accessed from a web browser, similar to how other AI assistants work… Metis is powered by an internal Amazon AI model called Olympus, another name inspired by Greek mythology. This is a more powerful version of the company’s publicly available Titan model, the people familiar said. At the most basic level, Metis gives text- and image-based answers in a smart, conversational manner, according to the internal document. It’s also able to share links to the source of its responses, suggest follow-up queries, and generate images. (24/Jun/2024, BI)

18/Mar/2024:

Amazon can see $1 billion training runs on the horizon:

…Technical talk from a longtime AWS person sheds light on frontier AI training…

James Hamilton, a distinguished engineer at Amazon, said at a talk this year that within the last year Amazon carried out a $65m training run. Specifically, they trained a 200B dense model on 4T tokens of data across 13,760 NVIDIA A100 chips (using 1,720 P4d nodes). It took 48 days to train. Hamilton described this training run as “1 gen old” so we can assume Amazon has moved on to larger runs since then. Looking ahead, Hamilton said “training runs soon to cross $1b”. (18/Mar/2024, via Jack Clark)

1/Feb/2024: CEO Andy Jassy has said the company plans to incorporate generative AI across all of its businesses. (CNBC)

Dataset

According to the technical report, Amazon Nova was trained on ‘a mixture of large amounts of multilingual and multimodal data. Our models were trained on data from a variety of sources, including licensed data, proprietary data, open source datasets, and publicly available data where appropriate.’

Multilingual in 200+ languages, sorted by number of training tokens:

- English

- German

- Spanish

- French

- Italian

- Japanese

- Korean

- Arabic

- Chinese (zh-CN)

- Russian

- Hindi

- Portuguese

- Dutch

- Turkish

- Hebrew

- and around 200 more…

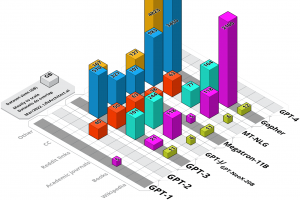

The Amazon Nova dataset is expected to use the same text sources as those detailed in my report What’s in my AI?, with some additional datasets from image, audio, and video, as well as new synthetic data.

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher

Alan D. Thompson

LifeArchitect.ai

March 2022

26 pages incl title page, references, appendix.

Timeline to Nova

| Date | Milestone |

| 2/Aug/2022 | AlexaTM 20B paper. |

| 2/Feb/2023 | Multimodal CoT paper. |

| 28/Sep/2023 | Amazon Titan embeddings released. |

| 8/Nov/2023 | Amazon Olympus plans leaked. |

| 3/Dec/2024 | Amazon Nova Pro released. |

| 30/Apr/2025 | Amazon Nova Premier 470B released. |

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 13/Jan/2026. https://lifearchitect.ai/olympus/↑