Alan D. Thompson

September 2021

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher

What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher

Alan D. Thompson

LifeArchitect.ai

March 2022

26 pages incl title page, references, appendix.

What is Megatron?

Megatron is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA, based on work by Google.

How to use it

Play with the Megatron-11B model at Adam Daniel King’s InferKit.com.

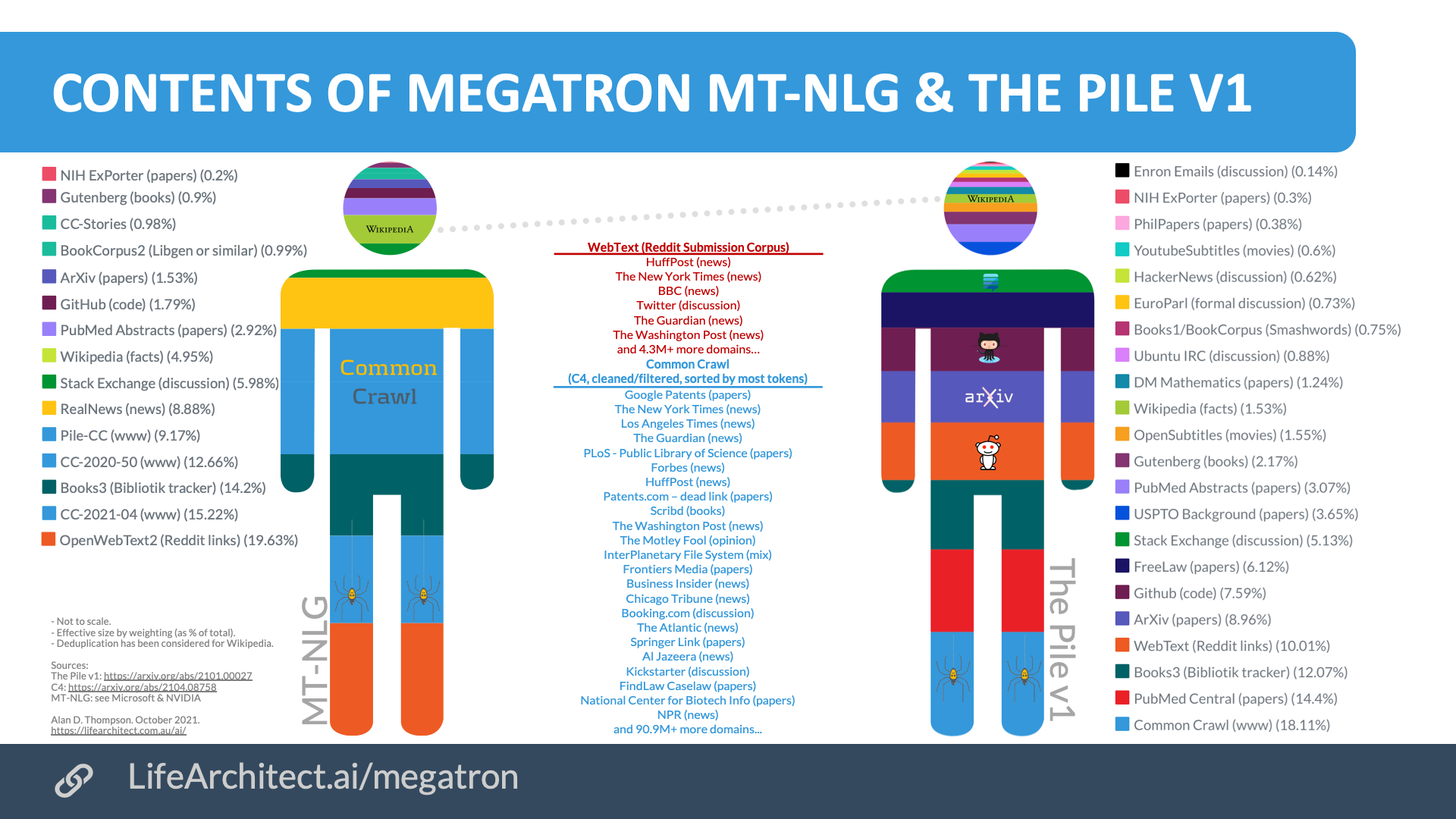

Viz: Megatron MT-NLG (530B, September 2021)

Megatron-Turing Natural Language Generation model (MT-NLG). MT-NLG is the successor to Microsoft Turing NLG 17B and NVIDIA Megatron-LM 8.3B. The MT-NLG model is three times larger than GPT-3 (530B vs 175B).

Download source (PDF)

Contents: View the data (Google sheets)

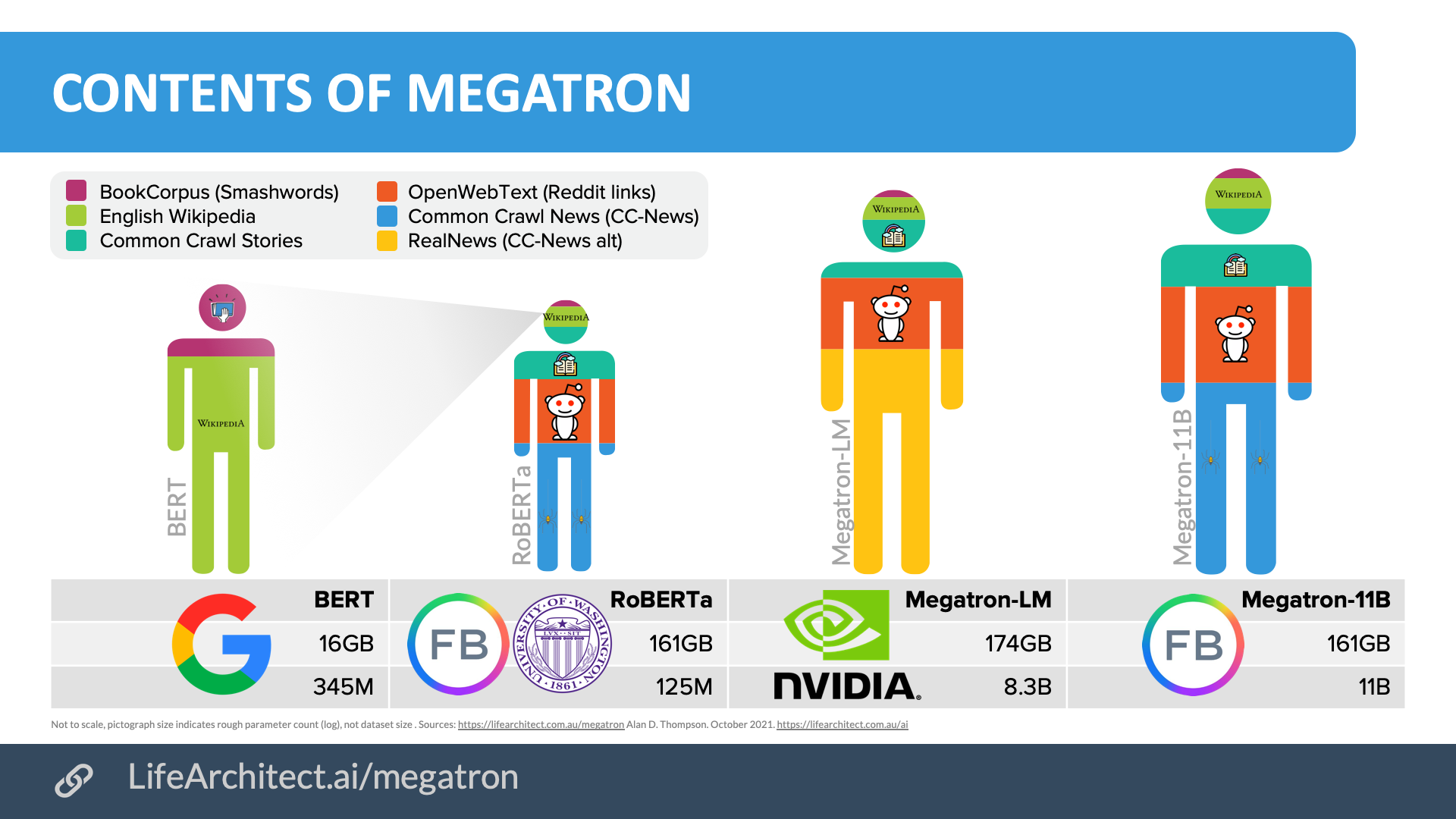

Viz: Evolution of Megatron (2019-2021)

* RealNews is practically the same as Common Crawl News (CC-News). RealNews is 120GB from 5,000 domains from Common Crawl Dec/2016-Mar/2019. CC-News is 76GB from Common Crawl Sep/2016-Feb/2019. They are shown with different colours here (amber/blue) for interest only.

Download source (PDF)

Contents: View the data (Google sheets)

Timeline

November 2018: Google open sources BERT. Trained in four days.

| Name | BERT Bidirectional Encoder Representations from Transformers. |

|---|---|

| Lab | |

| Parameters | 345M |

| Dataset sources | English Wikipedia (12GB) + BookCorpus (4GB). |

| Dataset total size | 16GB |

July 2019: Facebook AI and University of Washington introduce RoBERTa.

| Name | RoBERTa. Robustly optimized BERT approach. |

|---|---|

| Lab | FAIR (Facebook AI Research) + UW |

| Parameters | 125M (RoBERTa-base) |

| Dataset sources | Trained with BERT original dataset: English Wikipedia (12GB) + BookCorpus (4GB) + CC-News, 63 million English news articles from Sep/2016-Feb/2019 (76GB). + OpenWebText/Reddit upvoted (38GB). + Stories, 1M story documents from the CC (31GB). |

| Dataset total size | 161GB |

August 2019: NVIDIA introduces Megatron-LM. Trained in 53 minutes.

8.3 billion parameter transformer language model with data parallelism trained on 512 GPUs.

| Name | Megatron-LM |

|---|---|

| Lab | NVIDIA |

| Parameters | 8.3B (8,300M) |

| Dataset sources | Wikipedia, OpenWebText, RealNews, + CC-Stories. |

| Dataset total size | 174GB |

April 2020: Facebook AI Research labs introduce Megatron-11b (RoBERTa).

Megatron-11b is a unidirectional language model with 11B parameters based on Megatron-LM. Following the original Megatron work, FAIR trained the model using intra-layer model parallelism with each layer’s parameters split across 8 GPUs.

| Name | Megatron-11B |

|---|---|

| Lab | FAIR (Facebook AI Research) |

| Parameters | 11B (11,000M) |

| Dataset sources | Same as RoBERTa. Trained with BERT original dataset: English Wikipedia (12GB) + BookCorpus (4GB) + CC-News, 63 million English news articles from Sep/2016-Feb/2019 (76GB). + OpenWebText/Reddit upvoted (38GB). + Stories, 1M story documents from the CC (31GB). |

| Dataset total size | 161GB |

October 2021: NVIDIA and Microsoft introduce Megatron-Turing NLG 530B (The Pile).

Megatron-Turing Natural Language Generation model (MT-NLG). MT-NLG is the successor to Microsoft Turing NLG 17B and NVIDIA Megatron-LM 8.3B. The MT-NLG model is three times larger than GPT-3 (530B vs 175B). Following the original Megatron work, NVIDIA and Microsoft trained the model on over 4,000 GPUs.

| Name | Megatron MT-NLG |

|---|---|

| Lab | NVIDIA and Microsoft |

| Parameters | 530B (530,000M) |

| Dataset sources | Trained with The Pile v1 + more, totalling 15 datasets: Books3 OpenWebText2 (Reddit links) Stack Exchange PubMed Abstracts Wikipedia Gutenberg (PG-19) BookCorpus2 NIH ExPorter Pile-CC ArXiv GitHub + Common Crawl 2020 + Common Crawl 2021 + RealNews, from 5000 news domains (120GB). + CC-Stories, 1M story documents from the CC (31GB). |

| Dataset total size | >825GB (My estimate is 1.86TB or 1,863GB) Contents: View the data (Google sheets) |

“We live in a time where AI advancements are far outpacing Moore’s law. We continue to see more computation power being made available with newer generations of GPUs, interconnected at lightning speeds. At the same time, we continue to see hyperscaling of AI models leading to better performance, with seemingly no end in sight.”

— NVIDIA and Microsoft (October 2021)

December 2021: Meta AI introduces Fairseq.

NOTE: Fairseq is not related to Megatron, and the two use different technologies for training. The only link is in the datasets, which is the primary focus of this page. 13B trained in 2,363 GPU-days (assume 1,024 GPUs, for a total of ~3 days).

| Name | Fairseq. |

|---|---|

| Lab | Meta, previously known as FAIR (Facebook AI Research) |

| Parameters | 13B and 1.1T |

| Dataset sources | Same as RoBERTa (+CC100). Trained with BERT original dataset: English Wikipedia (12GB) + BookCorpus (4GB) + CC-News, 63 million English news articles from Sep/2016-Feb/2019 (76GB). + OpenWebText/Reddit upvoted (38GB). + Stories, 1M story documents from the CC (31GB). + new addition of English CC100 in Wikipedia style from Jan/2018-Dec/2018 (292GB). |

| Dataset total size | 453GB |

| Date | Type | Title |

| Dec/2025 | 📑 | Genesis Mission |

| Jan/2025 | 📑 | What's in Grok? |

| Jan/2025 | 💻 | NVIDIA Cosmos video dataset |

| Aug/2024 | 📑 | What's in GPT-5? |

| Jul/2024 | 💻 | Argonne AuroraGPT |

| Sep/2023 | 📑 | Google DeepMind Gemini: A general specialist |

| Feb/2023 | 💻 | Chinchilla data-optimal scaling laws: In plain English |

| Aug/2022 | 📑 | Google Pathways |

| Mar/2022 | 📑 | What's in my AI? |

| Sep/2021 | 💻 | Megatron the Transformer, and related language models |

| Ongoing... | 💻 | Datasets Table |

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 26/Feb/2026. https://lifearchitect.ai/megatron/↑