Get The Memo.

Alan D. Thompson

January 2025

Announced in Jan/2025, the NVIDIA Cosmos video dataset became the largest publicly-known training dataset in the world. With 20M hours of video (est 2.5B videos, avg 30sec), it has a raw size of ~45PB or ~9 quadrillion tokens (9Qa, 9,000T tokens). It surpassed the previous record holder from Jun/2024—the Common Crawl text dataset DCLM-Pool with 240T tokens—by 37.5×. All working by LifeArchitect.ai, assisted by OpenAI o1 on poe.com.

Summary

| Organization | NVIDIA |

| Dataset name | Cosmos |

| Announce date | Jan/2025 |

| Documents | ≈2.5B videos |

| #Raw dataset size | ≈45PB |

| #Raw dataset token count | ≈9,000T tokens |

| #Filtered dataset size | ≈2PB (4.44%) |

| #Filtered dataset token count | ≈400T tokens |

| Paper | research.nvidia.com |

1. Raw dataset size calcs (≈45PB)

1) Paper: "20M hours of raw videos, 720p-4k" We'll assume ~5 Mbps average. 2) 5 Mbps = 5,000,000 bits/s = 625,000 bytes/s = 0.625 MB/s 3) MB per hour: 0.625 MB/s × 3600 s = 2250 MB/hour = 2.25 GB/hour 4) For 20M hours total: 2.25 GB/hour × 20,000,000 hours = 45,000,000 GB = 45,000 TB = ~45 PB total for the raw dataset

2. Raw dataset tokens calcs (≈9Qa tokens)

# (assuming "8×8×8" temporal & spatial compression, 30 fps @ 1080p) 1) Frames in 20M hours: • Each hour = 3600 s • 30 fps × 3600 s = 108,000 frames/hour • 108,000 frames/hour × 20,000,000 hours = 2.16×10^12 frames 2) Temporal compression (divide by 8): 2.16×10^12 frames ÷ 8 = 2.7×10^11 token-frames (270 billion) 3) Spatial compression (divide width by 8, height by 8): • 1920÷8=240 • 1080÷8=135 = 240×135 = 32,400 spatial tokens per token-frame 4) Multiply token-frames by spatial tokens: 2.7×10^11 × 3.24×10^4 = 8.748×10^15 = ~8.75×10^15 tokens = ~9,000 trillion tokens for the raw dataset = ~9 quadrillion tokens total for the raw dataset

Confirmed by NVIDIA interview (6/Jan/2025) ‘Cosmos WFM models [world foundation models] were trained on 9,000 trillion tokens from 20 million hours of (video data)…’

3. Filtered dataset size calcs (≈2PB)

1) Paper: "100M curated clips, each ~2–60s. We'll pick 30s average." 2) 100,000,000 clips × 30s = 3,000,000,000s total 3,000,000,000s ÷ 3600 = ~833,333 hours 3) 2.25 GB/hour × 833,333 hours = ~1,875,000 GB = 1875 TB = 1.875 PB = ~1.9 PB total for pretraining 4) And an additional 10M clips for fine-tuning = 190TB 5) 2.09 PB (or 2090 TB) for the filtered dataset

4. Filtered dataset tokens calcs (≈400T)

# (assuming "8×8×8" temporal & spatial compression, 30 fps @ 1080p) 1) Each 30s clip has 30 fps × 30 s = 900 frames 2) Temporal compression: 900 frames ÷ 8 = 112.5 → ~112 token-frames 3) Spatial compression: • 1920÷8=240 • 1080÷8=135 = 240×135 = 32,400 spatial tokens 4) Tokens per clip: 32,400 × 112 = 3,628,800 = ~3.63 million tokens each clip 5) For 100M clips: 3.63×10^6 tokens/clip × 100,000,000 clips = 3.63×10^14 = 363 trillion tokens total for pretraining 6) And an additional 10M clips for fine-tuning = 36.3 trillion tokens for fine-tuning 7) 400T tokens for the filtered dataset

NVIDIA Cosmos video dataset categories

- Nature dynamics (20%)

- Spatial awareness and navigation (16%)

- Hand motion and object manipulation (16%)

- Driving (11%)

- Human motion and activity (10%)

- First person point-of-view (8%)

- Dynamic camera movements (8%)

- Synthetically rendered (4%)

- Others (7%).

All dataset reports by LifeArchitect.ai (most recent at top)

| Date | Type | Title |

| Dec/2025 | 📑 | Genesis Mission |

| Jan/2025 | 📑 | What's in Grok? |

| Jan/2025 | 💻 | NVIDIA Cosmos video dataset |

| Aug/2024 | 📑 | What's in GPT-5? |

| Jul/2024 | 💻 | Argonne AuroraGPT |

| Sep/2023 | 📑 | Google DeepMind Gemini: A general specialist |

| Feb/2023 | 💻 | Chinchilla data-optimal scaling laws: In plain English |

| Aug/2022 | 📑 | Google Pathways |

| Mar/2022 | 📑 | What's in my AI? |

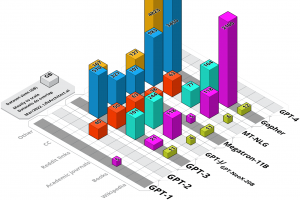

| Sep/2021 | 💻 | Megatron the Transformer, and related language models |

| Ongoing... | 💻 | Datasets Table |

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Informs research at Apple, Google, Microsoft · Bestseller in 147 countries.

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.

Alan D. Thompson is a world expert in artificial intelligence, advising everyone from Apple to the US Government on integrated AI. Throughout Mensa International’s history, both Isaac Asimov and Alan held leadership roles, each exploring the frontier between human and artificial minds. His landmark analysis of post-2020 AI—from his widely-cited Models Table to his regular intelligence briefing The Memo—has shaped how governments and Fortune 500s approach artificial intelligence. With popular tools like the Declaration on AI Consciousness, and the ASI checklist, Alan continues to illuminate humanity’s AI evolution. Technical highlights.This page last updated: 10/Jan/2025. https://lifearchitect.ai/cosmos/↑